This repository corresponds to the PyTorch implementation of the MCAN paper for VQA in Vietnamese

Orignal MCAN: link

By using the commonly used bottom-up-attention visual features, a single MCAN model delivers 55.48% overall accuracy on the test split of ViVQA dataset respectively, which significantly outperform existing state-of-the-arts model in Vietnamese. Please check "ViVQA: Vietnamese Visual Question Answering" paper for details.

June 2, 2023

You may need a machine with at least 1 GPU (>= 8GB), 20GB memory and 50GB free disk space. We strongly recommend to use a SSD drive to guarantee high-speed I/O.

You should first install some necessary packages.

-

Install Python >= 3.5

-

Install PyTorch >= 0.4.1 with CUDA (Pytorch 1.x, 2.0 is also supported).

$ pip install -r requirements.txt

- Download the ViVQA dataset, more information about relevant published papers and datasets, please visit UIT-NLP.

And finally, the datasets folders will have the following structure:

-- data

|-- train-val

| |-- 000000000001.jpg

| |-- ...

|-- test

| |-- 000000000002.jpg

| |-- ...

|-- vivqa_train.json

|--

{

"images": [

{

"id": 68857,

"filename": "000000068857.jpg",

"filepath": "train"

},...

]

"annotations": [

{

"id": 5253,

"image_id": 482062,

"question": "cái gì trên đỉnh đồi cỏ nhìn lên bầu trời xanh sáng",

"answers": [

"ngựa vằn"

]

},...

}

|-- vivqa_dev.json

|--

|-- vivqa_test.json

|--

The following script will start training with the default hyperparameters in config.yaml

$ python3 main.py --config config.yamlYou must go to main.py file and comment like :"#STVQA_Task(config).training()" Then go to config.yaml file and fix the "images_folder" to test images folder like: "images_folder: test"

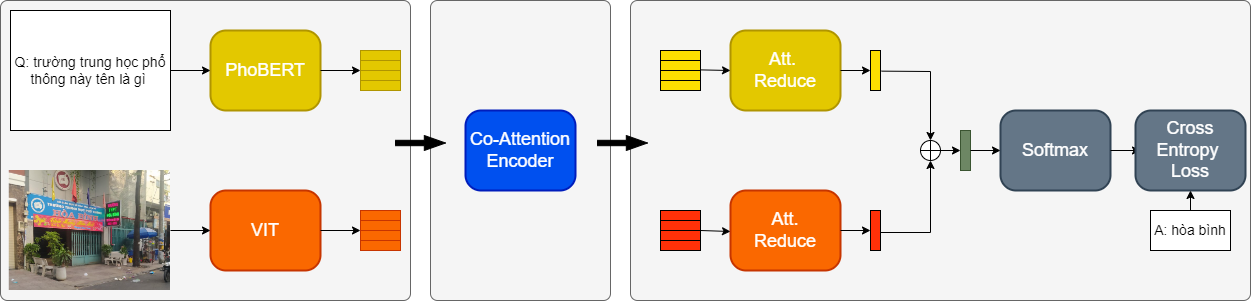

$ python3 main.py --config config.yamlWe use two pretrained models, namely the ViT(Vision Transfomer) paper and PhoBERT paper