Mobile-use is a powerful, open-source AI agent that controls your Android or IOS device using natural language. It understands your commands and interacts with the UI to perform tasks, from sending messages to navigating complex apps.

Mobile-use is quickly evolving. Your suggestions, ideas, and reported bugs will shape this project. Do not hesitate to join in the conversation on Discord or contribute directly, we will reply to everyone! ❤️

- 🗣️ Natural Language Control: Interact with your phone using your native language.

- 📱 UI-Aware Automation: Intelligently navigates through app interfaces (note: currently has limited effectiveness with games as they don't provide accessibility tree data).

- 📊 Data Scraping: Extract information from any app and structure it into your desired format (e.g., JSON) using a natural language description.

- 🔧 Extensible & Customizable: Easily configure different LLMs to power the agents that power mobile-use.

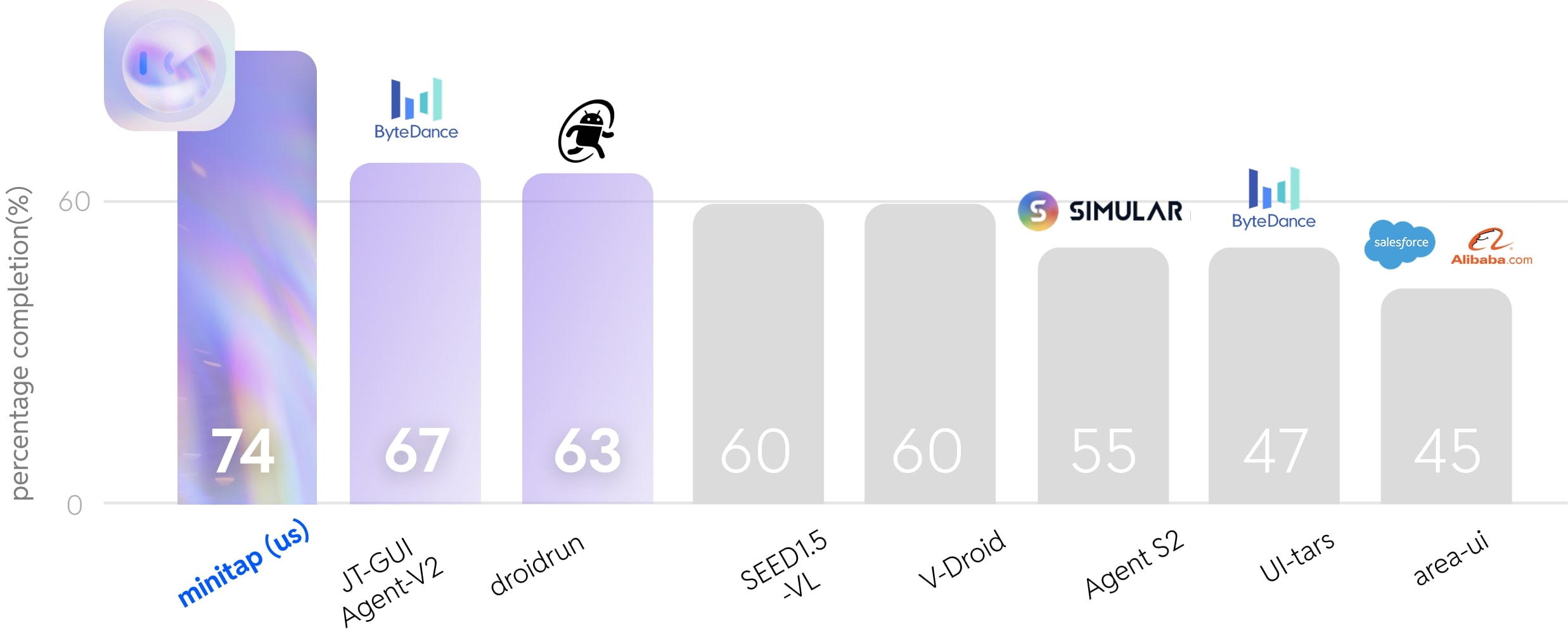

We are global number 1 Opensource pass@1 on the AndroidWorld benchmark.

More info here: https://minitap.ai/research/mobile-ai-agents-benchmark

The official leaderboard is available here

Ready to automate your mobile experience? Follow these steps to get mobile-use up and running.

-

Set up Environment Variables: Copy the example

.env.examplefile to.envand add your API keys.cp .env.example .env

-

(Optional) Customize LLM Configuration: To use different models or providers, create your own LLM configuration file.

cp llm-config.override.template.jsonc llm-config.override.jsonc

Then, edit

llm-config.override.jsoncto fit your needs.You can also use local LLMs or any other openai-api compatible providers :

- Set

OPENAI_BASE_URLandOPENAI_API_KEYin your.env - In your

llm-config.override.jsonc, setopenaias the provider for the agent nodes you want, and choose a model supported by your provider.

[!NOTE]

If you want to use Google Vertex AI, you must either:- Have credentials configured for your environment (gcloud, workload identity, etc…)

- Store the path to a service account JSON file as the GOOGLE_APPLICATION_CREDENTIALS environment variable

More information: - Credential types - google.auth API reference

- Set

Note

This quickstart, is only available for Android devices/emulators as of now, and you must have Docker installed.

First:

- Either plug your Android device and enable USB-debugging via the Developer Options

- Or launch an Android emulator

Important

At some point, the terminal will HANG, and Maestro will ask you Maestro CLI would like to collect anonymous usage data to improve the product.

It's up to you whether you accept (i.e enter 'Y') or not (i.e. enter 'n').

Then run in your terminal:

- For Linux/macOS:

chmod +x mobile-use.sh

bash ./mobile-use.sh \

"Open Gmail, find first 3 unread emails, and list their sender and subject line" \

--output-description "A JSON list of objects, each with 'sender' and 'subject' keys"- For Windows (inside a Powershell terminal):

powershell.exe -ExecutionPolicy Bypass -File mobile-use.ps1 `

"Open Gmail, find first 3 unread emails, and list their sender and subject line" `

--output-description "A JSON list of objects, each with 'sender' and 'subject' keys"Note

If using your own device, make sure to accept the ADB-related connection requests that will pop up on your device. Similarly, Maestro will need to install its APK on your device, which will also require you to accept the installation request.

The script will try to connect to your device via IP.

Therefore, your device must be connected to the same Wi-Fi network as your computer.

If the script fails with the following message:

Could not get device IP. Is a device connected via USB and on the same Wi-Fi network?

Then it couldn't find one of the common Wi-Fi interfaces on your device.

Therefore, you must determine what WLAN interface your phone is using via adb shell ip addr show up.

Then add the --interface <YOUR_INTERFACE_NAME> option to the script.

This is most probably an issue with your firewall blocking the connection. Therefore there is no clear fix for this.

Since UV docker images rely on a ghcr.io public repositories, you may have an expired token if you used ghcr.io before for private repositories.

Try running docker logout ghcr.io and then run the script again.

For developers who want to set up the environment manually:

Mobile-use currently supports the following devices:

- Physical Android Phones: Connect via USB with USB debugging enabled.

- Android Simulators: Set up through Android Studio.

- iOS Simulators: Supported for macOS users.

Note

Physical iOS devices are not yet supported.

For Android:

- Android Debug Bridge (ADB): A tool to connect to your device.

For iOS:

- Xcode: Apple's IDE for iOS development.

Before you begin, ensure you have the following installed:

- uv: A lightning-fast Python package manager.

- Maestro: The framework we use to interact with your device.

-

Clone the repository:

git clone https://github.com/minitap-ai/mobile-use.git && cd mobile-use

-

Create & activate the virtual environment:

# This will create a .venv directory using the Python version in .python-version uv venv # Activate the environment # On macOS/Linux: source .venv/bin/activate # On Windows: .venv\Scripts\activate

-

Install dependencies:

# Sync with the locked dependencies for a consistent setup uv sync

To run mobile-use, simply pass your command as an argument.

Example 1: Basic Command

python ./src/mobile_use/main.py "Go to settings and tell me my current battery level"Example 2: Data Scraping

Extract specific information and get it back in a structured format. For instance, to get a list of your unread emails:

python ./src/mobile_use/main.py \

"Open Gmail, find all unread emails, and list their sender and subject line" \

--output-description "A JSON list of objects, each with 'sender' and 'subject' keys"Note

If you haven't configured a specific model, mobile-use will prompt you to choose one from the available options.

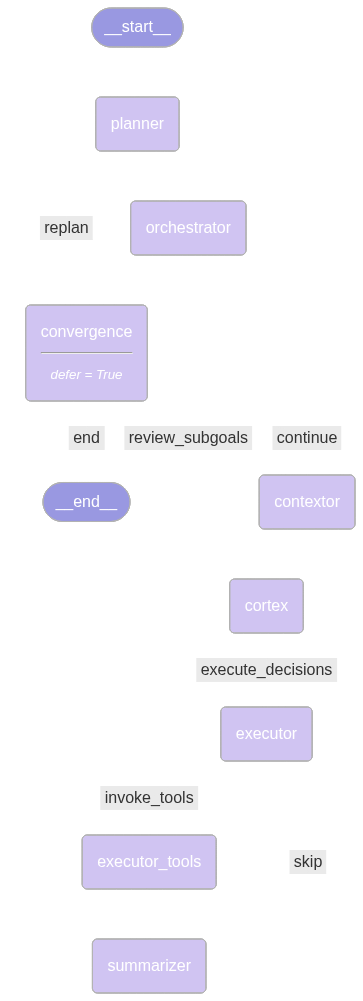

This diagram is automatically updated from the codebase. This is our current agentic system architecture.

We love contributions! Whether you're fixing a bug, adding a feature, or improving documentation, your help is welcome. Please read our Contributing Guidelines to get started.

This project is licensed under the MIT License - see the LICENSE file for details.