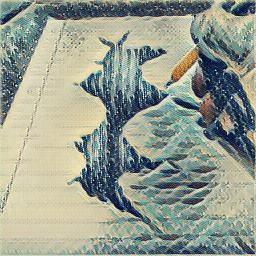

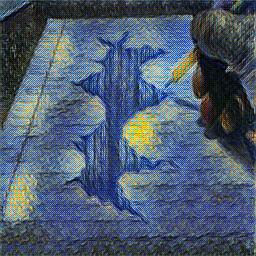

This is a TensorFlow implementation of Perceptual Losses for Real-Time Style Transfer and Super-Resolution using instance normalization as a regularizer to improve training efficiency and test results.1

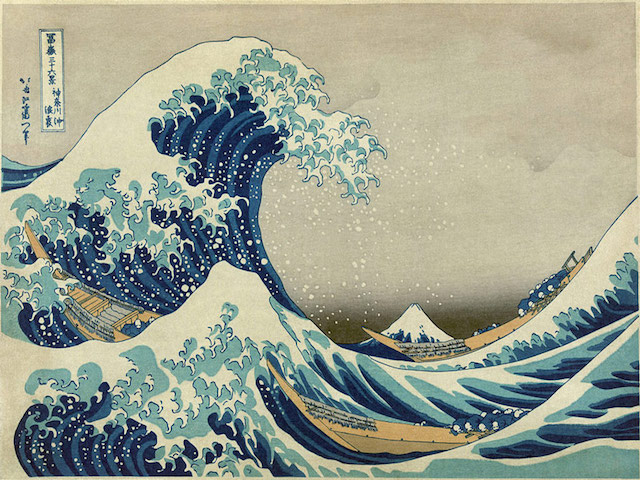

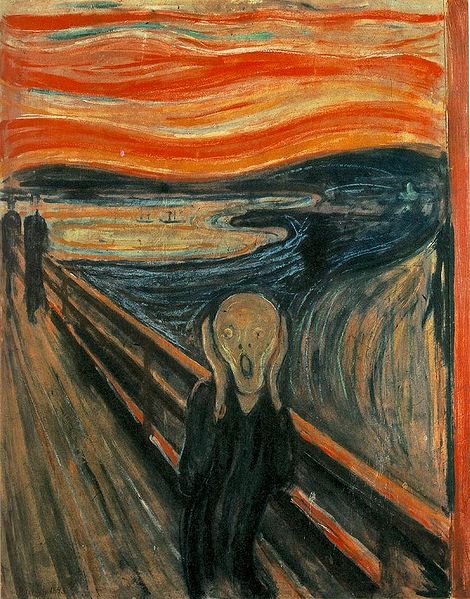

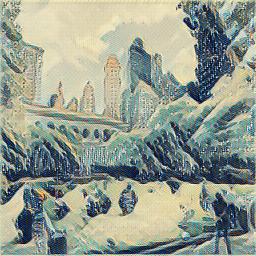

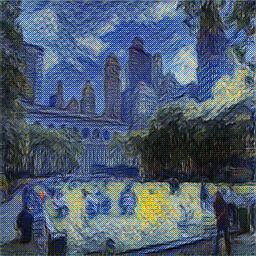

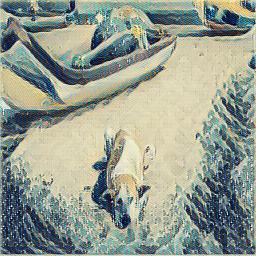

The loss derived from A Neural Algorithm of Artistic Style is used to train a generative neural network to apply artistic style transfer to some input image. Total variation denoising is used as a regularizer to reduce unrealistic constrast between adjacent pixels, which in turn reduces visible noise.

Unlike the non-feed-forward implementation, this implementation is deterministic due to the nature of the generator.

A generative convolutional neural network was used with downsampling layers followed by residual blocks. This is followed by upsampling layers accomplished using fractionally strided convolutions, which has been incorrectly termed "deconvolution" in some publications. Normalization of inputs is performed to reducing internal covariate shift.

For description of the generator's output, a pretrained VGG network was used. It is provided here by machrisaa on GitHub. The VGG implementation was customized to accomodate the implementation requirements requirements and is of the 16-layer variety.

1 The generative network can still support batch normalization if needed. Simply replace instance normalization calls with batch normalization and adjust the generator's input size.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

- Python 3.5

- TensorFlow (>= r1.2)

- scikit-image

- NumPy

To train a generative model to apply artistic style transfer invoke train.py with the file path of the image who's style you'd like to learn. A new directory with the name of the file will be created under the lib/generators and it will contain the network's trained paramater values for future use.

python train.py /path/to/style/image

To apply artistic style transfer to an image, invoke test.py with its file path through --input and specify the desired style through --style (i.e., "starry-night").

python test.py --input /path/to/input/image --style "style name"

To list available styles (trained models in lib/generators, invoke test.py with the --styles flag.

python test.py --styles

-

Stylizes an image using a generative model trained on a particular style.

-

Trains a generative model for stylizing an unseen image input with a particular style.

-

Trainer class for training a new generative model. This script is called from train.py after a style image path is specified.

-

Generative model with the architectural specifications suited for artistic style transfer.

-

Helper class containing various methods with their functions ranging from image retrieval to auxiliary math helpers.

-

Descriminative model trained on image classification used for gathering descriptive statistics for the loss measure.

The weights used by the VGG network. This file is not in this repository due to its size. You must download it and place in the working directory. The program will complain and ask for you to download it with a supplied link if it does not find it.