Publish HASS events to your Elasticsearch cluster!

- Efficiently publishes Home-Assistant events to Elasticsearch using the Bulk API

- Automatically sets up Datastreams or maintains Indexes and Index Templates using Index Lifecycle Management ("ILM") depending on your cluster's capabilities

- Supports Elastic's stack security features via optional username, password, and API keys

- Exclude specific entities or groups from publishing

- Elasticsearch 8.0+, 7.11+ (Self or Cloud hosted). Version

0.4.0includes support for older versions of Elasticsearch. - Elastic Common Schema version 1.0.0

- Home Assistant Community Store

- Home Assistant 2024.1

Some usage examples inspired by real users:

- Utilizing a Raspberry Pi in kiosk mode with a 15" display, the homeassistant-elasticsearch integration enables the creation of rotating fullscreen Elasticsearch Canvas. Those canvas displays metrics collected from various Home Assistant integrations, offering visually dynamic and informative dashboards for monitoring smart home data.

- To address temperature maintenance issues in refrigerators and freezers, temperature sensors in each appliance report data to Home Assistant, which is then published to Elasticsearch. Kibana's alerting framework is employed to set up rules that notify the user if temperatures deviate unfavorably for an extended period. The Elastic rule engine and aggregations simplify the monitoring process for this specific use case.

- Monitoring the humidity and temperature in a snake enclosure/habitat for a user's daughter, the integration facilitates the use of Elastic's Alerting framework. This choice is motivated by the framework's suitability for the monitoring requirements, providing a more intuitive solution compared to Home Assistant automations.

- The integration allows users to maintain a smaller subset of data, focusing on individual stats of interest, for an extended period. This capability contrasts with the limited retention achievable with Home Assistant and databases like MariaDB/MySQL. This extended data retention facilitates very long-term trend analysis, such as for weather data, enabling users to glean insights over an extended timeframe.

The Elasticsearch component requires, well, Elasticsearch! This component will not host or configure Elasticsearch for you, but there are many ways to run your own cluster. Elasticsearch is open source and free to use: just bring your own hardware! Elastic has a great setup guide if you need help getting your first cluster up and running.

If you don't want to maintain your own cluster, then give the Elastic Cloud a try! There is a free trial available to get you started.

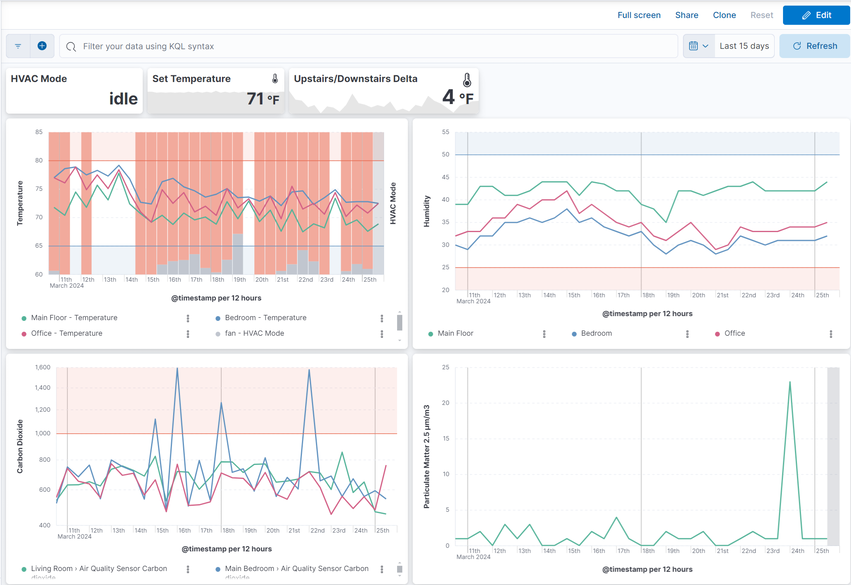

Graph your home's climate and HVAC Usage:

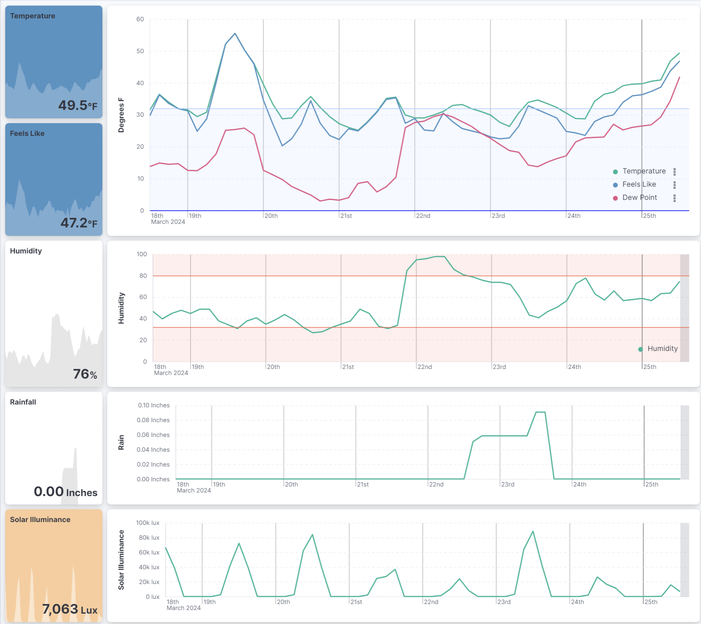

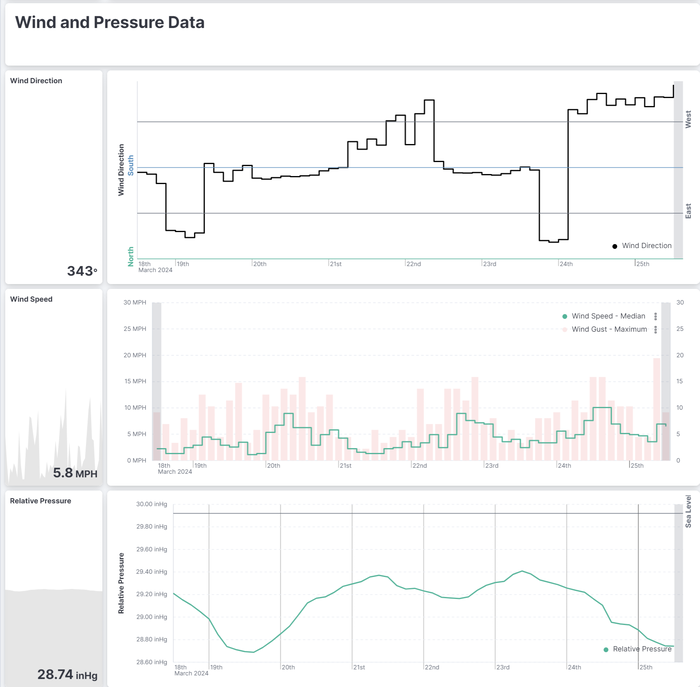

Visualize and alert on data from your weather station:

This component is available via the Home Assistant Community Store (HACS) in their default repository. Visit https://hacs.xyz/ for more information on HACS.

Alternatively, you can manually install this component by copying the contents of custom_components to your $HASS_CONFIG/custom_components directory, where $HASS_CONFIG is the location on your machine where Home-Assistant lives.

Example: /home/pi/.homeassistant and /home/pi/.homeassistant/custom_components. You may have to create the custom_components directory yourself.

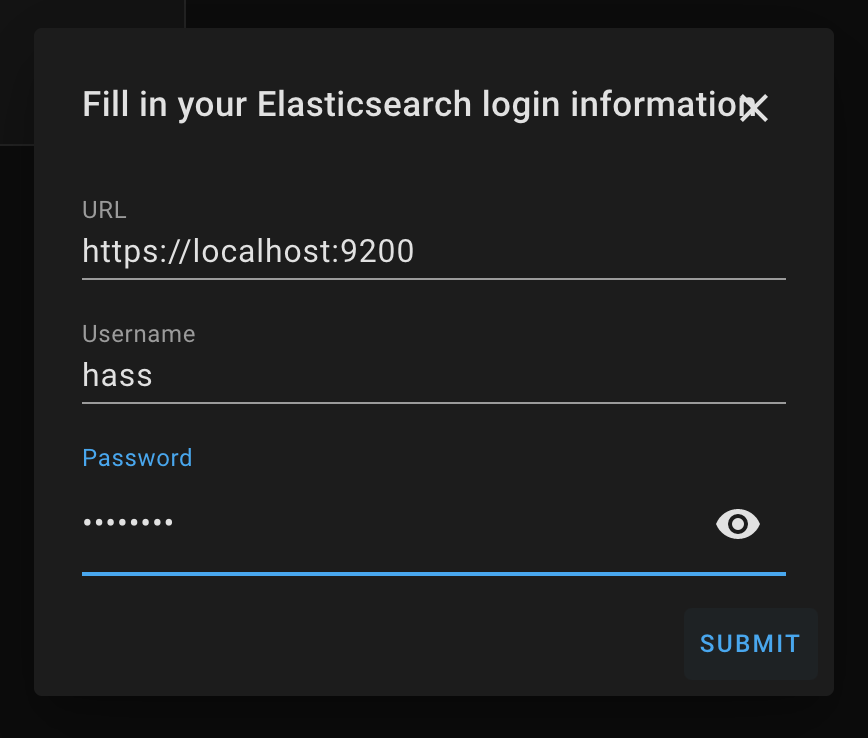

The integration supports authenticating via API Key, Username and Password or unauthenticated access.

You must first create an API Key with the appropriate privileges.

Note that if you adjust the index_format or alias settings that the role definition must be updated accordingly:

POST /_security/api_key

{

"name": "home_assistant_component",

"role_descriptors": {

"hass_writer": {

"cluster": [

"manage_index_templates",

"manage_ilm",

"monitor"

],

"indices": [

{

"names": [

"hass-events*",

"active-hass-index-*",

"all-hass-events",

"metrics-homeassistant*"

],

"privileges": [

"manage",

"index",

"create_index",

"create"

]

}

]

}

}

}If you choose not to authenticate via an API Key, you need to create a user and role with appropriate privileges.

# Create role

POST /_security/role/hass_writer

{

"cluster": [

"manage_index_templates",

"manage_ilm",

"monitor"

],

"indices": [

{

"names": [

"hass-events*",

"active-hass-index-*",

"all-hass-events",

"metrics-homeassistant*"

],

"privileges": [

"manage",

"index",

"create_index",

"create"

]

}

]

}# Create user

POST /_security/user/hass_writer

{

"full_name": "Home Assistant Writer",

"password": "changeme",

"roles": ["hass_writer"]

}This component is configured interactively via Home Assistant's integration configuration page.

- Restart Home-assistant once you've completed the installation instructions above.

- From the

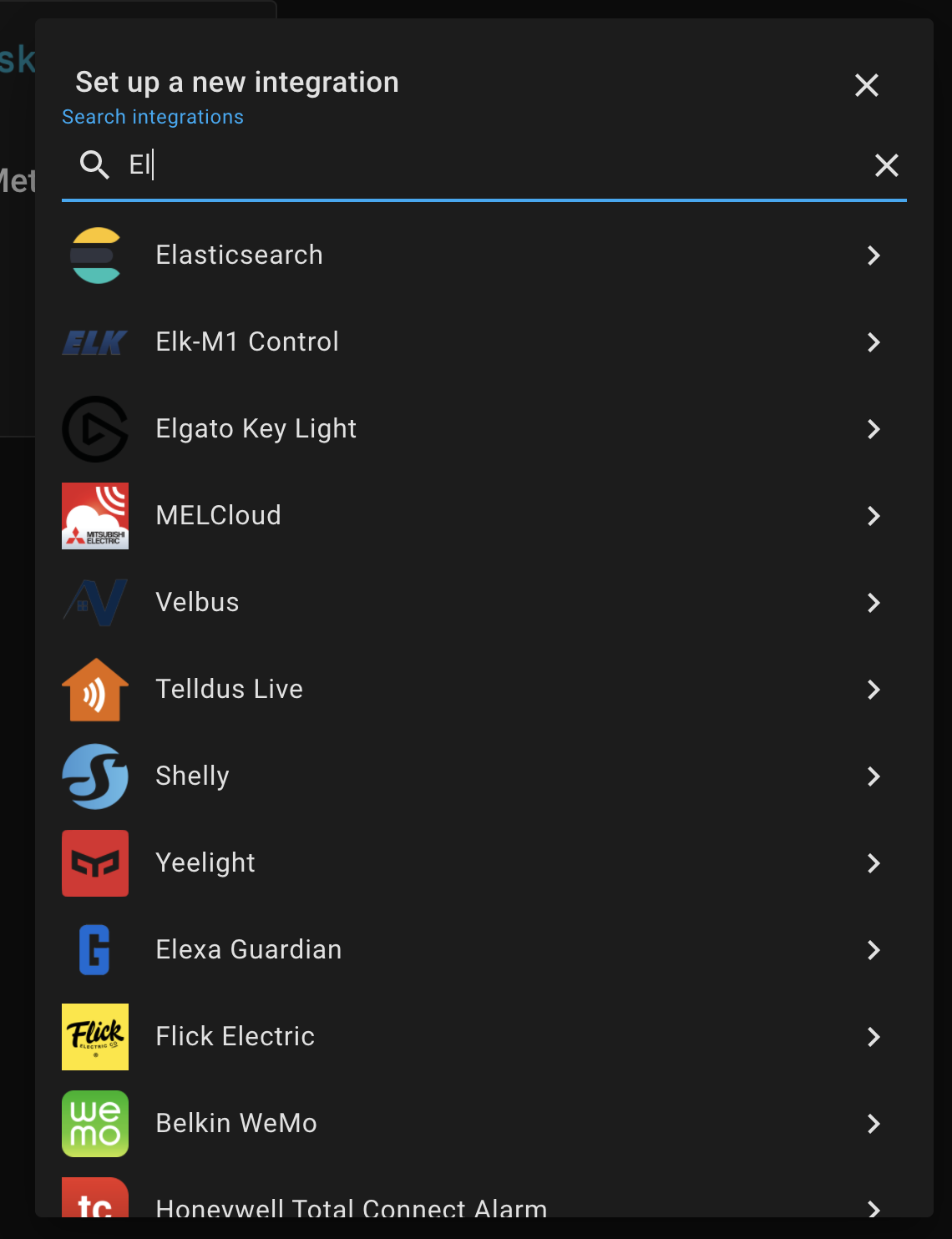

Integrationsconfiguration menu, add a newElasticsearchintegration.

- Select the appropriate authentication method

- Provide connection information and optionally credentials to begin setup.

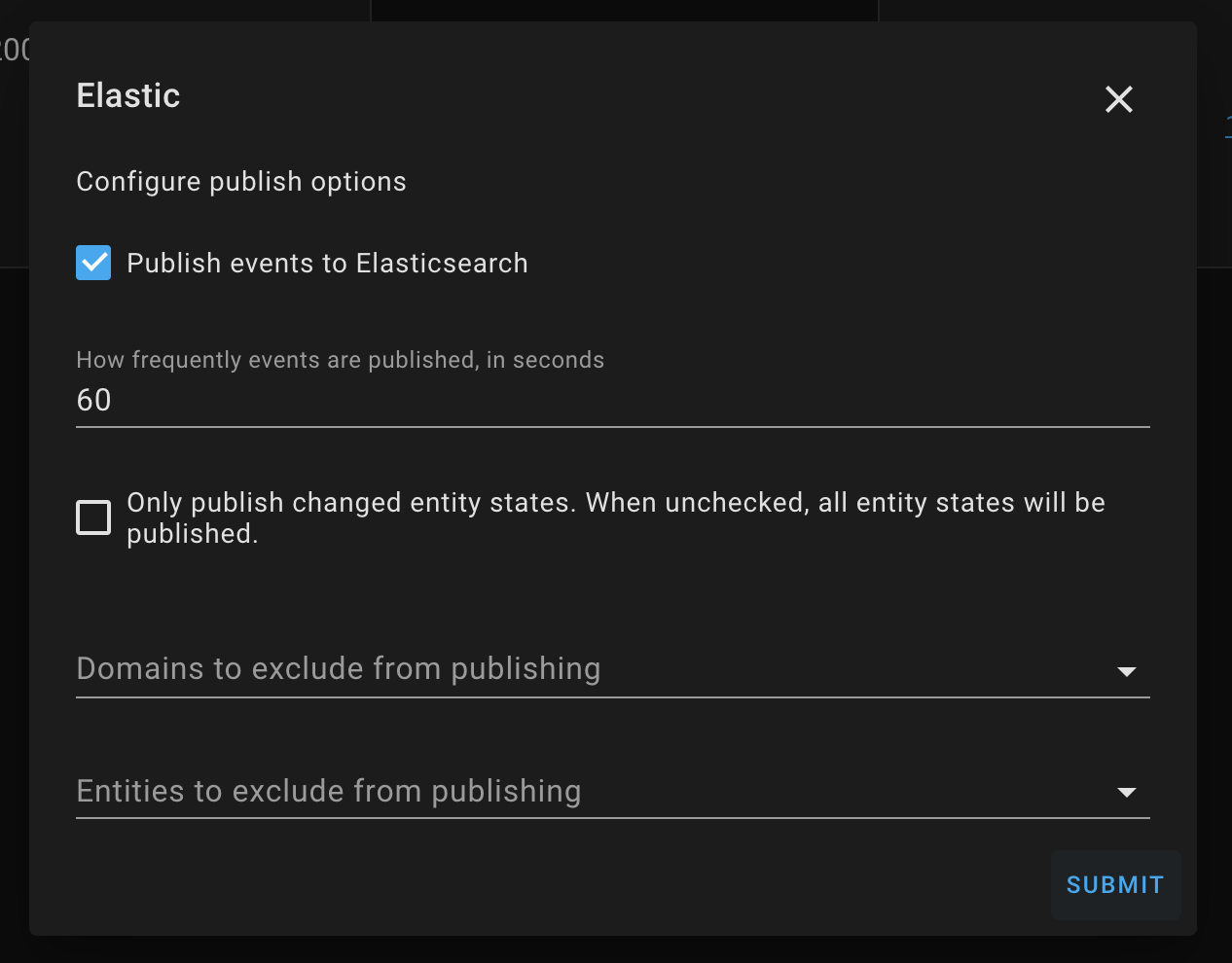

- Depending on the version of your Elasticsearch cluster you may be offered to choose between Datastreams (Preferred) and legacy indexing.

- Once the integration is setup, you may tweak all settings via the "Options" button on the integrations page.

When in Datastream mode (Default for Elasticsearch 8.7+) you can customize the mappings, settings and define an ingest pipeline by creating a component template called metrics-homeassistant@custom

The following is an example on how to push your Home Assistant metrics into an ingest pipeline called metrics-homeassistant-pipeline:

PUT _ingest/pipeline/metrics-homeassistant-pipeline

{

"description": "Pipeline for HomeAssistant dataset",

"processors": [ ]

}

PUT _component_template/metrics-homeassistant@custom

{

"template": {

"mappings": {}

"settings": {

"index.default_pipeline": "metrics-homeassistant-pipeline",

}

}

}

Component template changes apply when the datastream performs a rollover so the first time you modify the template you may need to manually initiate ILM rollover to start applying the pipeline.

Versions prior to 0.6.0 included a cluster health sensor. This has been removed in favor of a more generic approach. You can create your own cluster health sensor by using Home Assistant's built-in REST sensor.

# Example configuration

sensor:

- platform: rest

name: "Cluster Health"

unique_id: "cluster_health" # Replace with your own unique id. See https://www.home-assistant.io/integrations/sensor.rest#unique_id

resource: "https://example.com/_cluster/health" # Replace with your Elasticsearch URL

username: hass # Replace with your username

password: changeme # Replace with your password

value_template: "{{ value_json.status }}"

json_attributes: # Optional attributes you may want to include from the /_cluster/health API response

- "cluster_name"

- "status"

- "timed_out"

- "number_of_nodes"

- "number_of_data_nodes"

- "active_primary_shards"

- "active_shards"

- "relocating_shards"

- "initializing_shards"

- "unassigned_shards"

- "delayed_unassigned_shards"

- "number_of_pending_tasks"

- "number_of_in_flight_fetch"

- "task_max_waiting_in_queue_millis"

- "active_shards_percent_as_number"This project is not endorsed or supported by either Elastic or Home-Assistant - please open a GitHub issue for any questions, bugs, or feature requests.

Contributions are welcome! Please see the Contributing Guide for more information.