Hacky Python scripts for downloading your Twitter likes and converting to HTML. Includes support for downloading user avatars and image media in tweets. Scrapes tweets using the GraphQL API powering Twitter.com - the equivalent of you scrolling through all your likes in your web browser, only saved locally forever!

Note only currently supports grabbing the liked tweet. So if it was a quote tweet, does not download the RT'd tweet. If it's a reply or part of a thread, does not download the other tweets.

Meant to jumpstart you to at least getting your tweets offline from Twitter, if not building something much better!

Intended for use with Python 3.7. First install requirements:

pip install -r requirements.txt(It's just requests). Next you'll need to populate the config.json file with credentials needed to match how your web browser gets your likes from Twitter.com:

- Open up your web browser and ensure the Network web debugging tab is open so you can inspect network requests

- Navigate to

https://twitter.com/<your_user_handle>/likes - Look for a network request to an

api.twitter.comdomain path ending in/Likes - From the request headers:

a. Copy the

Authorizationvalue and save asHEADER_AUTHORIZATIONinconfig.jsonb. Copy theCookiesvalue and save asHEADER_COOKIESinconfig.jsonc. Copy thex-csrf-tokenvalue and save asHEADER_CSRFinconfig.json - Find your Twitter user ID (available in the

/Likesrequest params, or elsewhere) and save asUSER_IDinconfig.json

By default, liked tweets will be saved to a file liked_tweets.json in this repo's folder path. If you'd like to override this, set new path as OUTPUT_JSON_FILE_PATH in config.json.

Run as follows:

python download_tweets.pyShould provide output like the following:

Starting retrieval of likes for Twitter user 1234...

Fetching likes page: 1...

Fetching likes page: 2...

Fetching likes page: 3...

Done. Likes JSON saved to: liked_tweets.jsonThe output JSON will be a list of dictionaries like the following:

[

{

"tweet_id": "780770946428829696",

"user_id": "265447323",

"user_handle": "LeahTiscione",

"user_name": "Leah Tiscione",

"user_avatar_url": "https://pbs.twimg.com/profile_images/1563330281838284805/aUtIY2vj_normal.jpg",

"tweet_content":"What are you hiding in your locked instagram? sandwiches? Sunsets???? let us see your nephew!!!!",

"tweet_media_urls": [],

"tweet_created_at": "Sun Mar 13 15:16:45 +0000 2011"

}

]If you want your tweets as a local HTML file, you can run the second script to convert the output JSON file from the above step.

NOTE: This will attempt to download all media images and tweet author avatars locally by default to avoid relying on Twitter hosting. You can override this by changing the DOWNLOAD_IMAGES boolean in config.json to false.

- Be sure the

OUTPUT_JSON_FILE_PATHvalue inconfig.jsonis pointing to the output JSON file of your tweets. - Run:

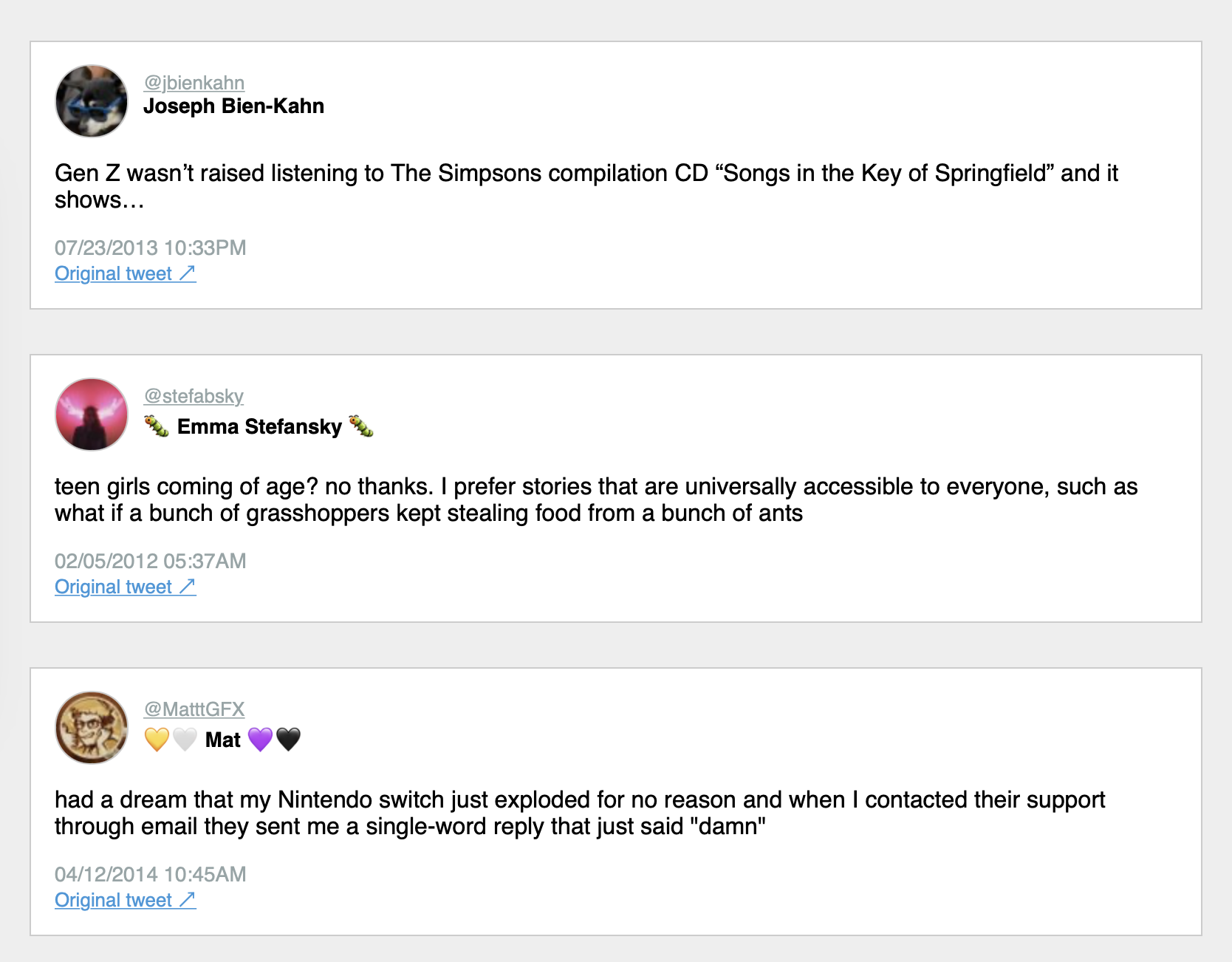

python parse_tweets_json_to_html.pyThis will download all images (if specified; saved to tweet_likes_html/images) and construct an HTML file at tweet_likes_html/index.html containing all liked tweets, as well as individual HTML files within tweet_likes_html/tweets/.