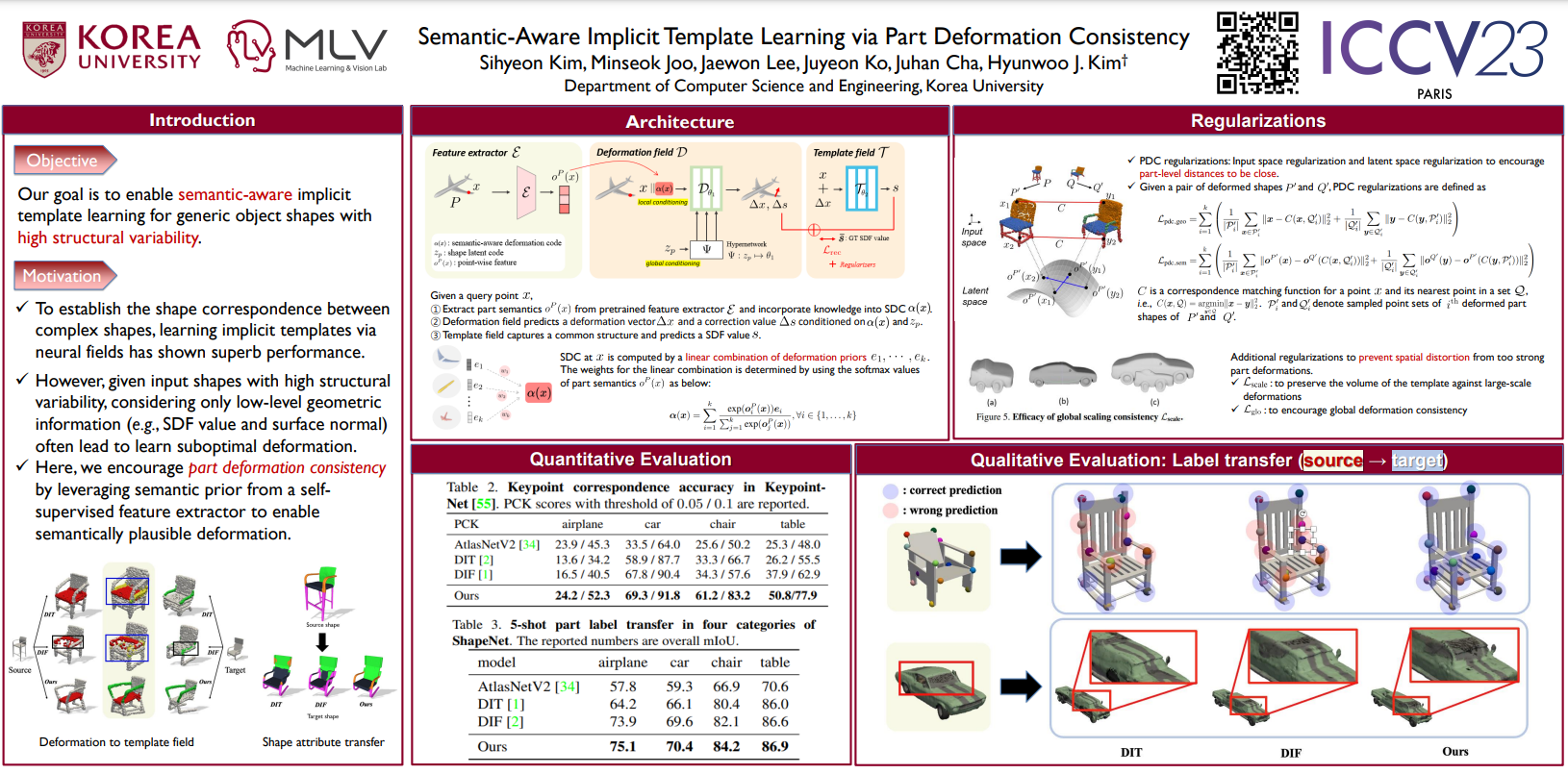

Official implementation of ICCV 2023 paper, "Semantic-Aware Implicit Template Learning via Part Deformation Consistency" by Sihyeon Kim, Minseok Joo, Jaewon Lee, Juyeon Ko, Juhan Cha, and Hyunwoo J. Kim

We evaluate unsupervised dense correspondence between shapes with various surrogate tasks.

- Clone repository & Setup conda environment

git clone https://github.com/mlvlab/PDC.git

cd PDC

conda create -n PDC python=3.8

conda activate PDC

- Install packages that require manual setup (install PyTorch version compatible with your CUDA environment)

pip install torch==1.8.1+cu111 torchvision==0.9.1+cu111 -f https://download.pytorch.org/whl/torch_stable.html

pip install setuptools==59.5.0

Additionally, install torchmeta (I used earlier version, 1.4.0) by following the instructions provided in DIF & install pytorch3D as in the official github.

- Install the rest of the requirements

pip install -r requirements.txt

For data preprocessing, we follow DIF to generate SDF values/surface points/normal vectors for original meshes based on mesh_to_sdf. Go DIF repo for some example SDF datasets for ShapeNetV2.

python train.py --config=configs/train/<category>.yml

We provide pretrained weights for BAE-Net in pretrained_model/pn for each category. You can then use these pretrained weights to train PDC model. For training details for BAE-Net, please refer to the supplement of our paper.

We provide evaluation codes for keypoint transfer and part label transfer in evaluate.

See evaluate/evaluation.ipynb. After the extraction of deformed coordinates, you can calculate PCK score and mIoU for keypoint transfer and part label transfer.

You can test the jupyter notebook using the provided example datasets in examples/chair_kp and examples/chair_ptl.

Also, the ID list of source shapes we used for each task can be found in the evaluate/evaluation_source_list.txt.

04.10.23. Initial code release

10.11.23. Main code release

15.04.24. Refine some codes & add utils

19.04.24. Add pretrained weight for BAE-Net & evaluation codes/data

This repo is based on SIREN and DIF.

We especially thank Yu Deng, the author of DIF, for helping us with data preprocessing!

@inproceedings{kim2023semantic,

title={Semantic-Aware Implicit Template Learning via Part Deformation Consistency},

author={Kim, Sihyeon and Joo, Minseok and Lee, Jaewon and Ko, Juyeon and Cha, Juhan and Kim, Hyunwoo J},

booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision},

year={2023}

}

Code is released under [MIT License].

Copyright (c) 2023-present Korea University Research and Business Foundation & MLV Lab