This project provides educational material for Open Neural Network Exchange (ONNX). These materials would be useful for data scientists and engineers who are planning to use the ONNX models in their AI projects. Notebooks will help you to find answers to the following questions:

- How to convert SKLearn, XGBoost, and Tensorflow (Keras) classifiers into the ONNX format?

- What is the difference between the original SKLearn, XGBoost, and Tensorflow (Keras) models and their ONNX representation?

- Where to find and how to use already trained and serialized ONNX models?

- How to visualize the ONNX graph?

Note: These notebooks are not about data cleaning, feature selection, model optimization, etc. These notebooks are about exploring different aspects of using ONNX models! ☝️

You might be asking the question: why do we need this project if we already have excellent documentation here? I believe the best way to learn something new is to try-examples-yourself. So I tried to follow the existing documentation, repeat, and introduce some new steps. This way I tried to learn this technology and share my experience with others.

-

Clone this project:

~$ git clone https://github.com/mmgalushka/onnx-hello-world.git -

Initialize the virtual environment:

~$ cd onnx-hello-world ~$ ./helper.sh init

-

Open the project in VS Code:

~$ code .

Note: To work with this project you can use any other Python environment. If you decided to use the recommended VS Code make sure you install the following extensions:

Let's cite a quote defining the goal of the ONNX project:

"Our goal is to make it possible for developers to use the right combinations of tools for their project. We want everyone to be able to take AI from research to reality as quickly as possible without artificial friction from toolchains."

Developers and data scientists from big enterprises to small startups tend to choose different ML frameworks, which suits best their problems. For example, if we need to design a model for boosting sales, based on structured customer data, it makes sense to use the SKLearn framework (at least as a starting point). However, if we are designing a computer vision model for classifying shopping items from a photograph, the likely choice would be TensorFlow or PyTorch. This is the flexibility data scientists want. However, with this flexibility comes a lot of challenges. All these ML frameworks have different:

- languages;

- approaches for solving the same problems;

- terminology;

- software and hardware requirements;

- etc.

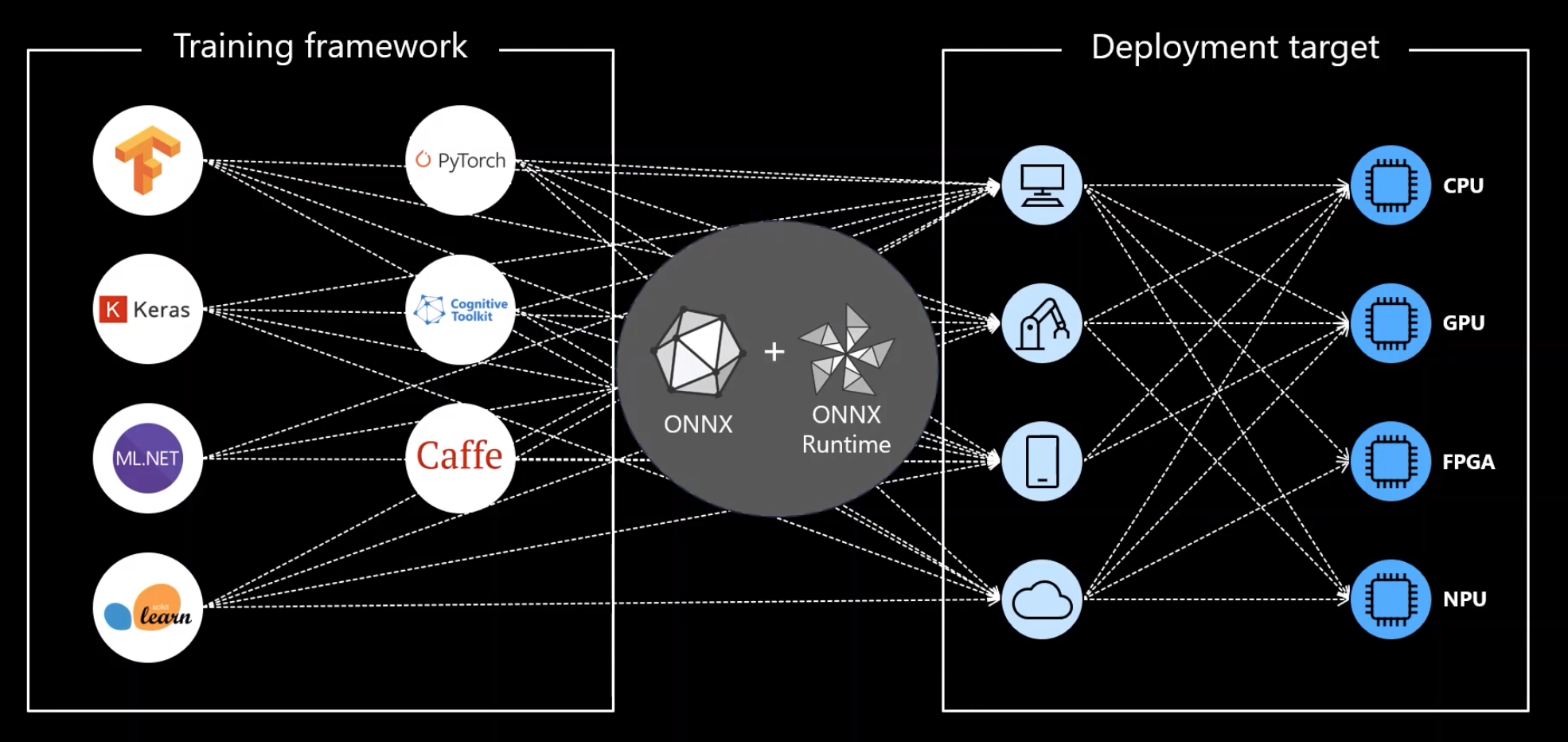

Such diversity of ML frameworks create a headache for engineers during the deployment phase. Here the ONNX might be an excellent solution. It allows using the ML framework of our choice for creating a model on one hand and streamlines the deployment process on the other. The following visualization from this presentation is well capturing the benefits of using ONNX.

The majority of ML frameworks now have converters to the ONNX format. In this study, we explored the conversion for SKLearn, XGBoots, and Tensorflow models. You can convert a model to ONNX and serialize it as easy as that:

from skl2onnx import convert_sklearn

# Converts the model to the ONNX format.

onnx_model = convert_sklearn(my_model, initial_types=<data types>)

# Serializes the ONNX model to the file.

with open('my_model.onnx', "wb") as f:

f.write(onnx_model.SerializeToString())To run your model you just need the ONNX runtime:

import onnxruntime as rt

# Creates a session for running predictions.

sess = rt.InferenceSession('my_model.onnx')

# Makes a prediction

y_pred, y_probas = sess.run(None, <your data>)This example shows how to use the SKLearn model convertor. But a similar approach applies to other frameworks. If you would like about ONNX please follow the following link.

This project includes the following studies (each study is a notebook exploring different ML framework or model):

| Explore | Notebook | Problem | Summary |

|---|---|---|---|

| SKLearn | onnx_sklearm.ipynb | Tabular | SKLearn models training, conversion, and comparing to ONNX |

| XGBoost | onnx_xgboost.ipynb | Tabular | XGBoost model training, conversion, and comparing to ONNX |

| Tensorflow(Keras) | onnx_tensorflow.ipynb | Tabular | Tensorflow(Keras) model training, conversion, and comparing to ONNX |

| Resnet | onnx_resnet.ipynb | CV | Inference using ResNet (image classification) ONNX |

| MaskRCNN | onnx_maskrcnn.ipynb | CV | Inference using MaskRCNN (instant segmentation) ONNX |

| SSD | onnx_ssd.ipynb | CV | Inference using SSD (objects detection) ONNX |

| BiDAF | onnx_bidaf.ipynb | NLP | Inference using BiDAF (Query/Answer) ONNX |

By looking into the GitHub repositories for different ONNX models we can observe a common pattern. The process of loading and queries the model is the same for the majority of models. but processes for preparing queries and interpreting results are different. To simplify the usage of the published ONNX model, the majority of authors provide the following functions preprocess and postprocess:

- the preprocess function takes raw data and transforms it into the input format (usually NumPy-array) used by the ML model.

- the postprocessing function takes the model output and transforms it into the format suitable for the end-user interpretation (for example an image with objects detected rectangles).

There is one interesting observation from reading the ONNX documentation for different ML models. Some authors define the input such as "The model has 3 outputs. boxes: (1x'nbox'x4) labels: (1x'nbox') scores: (1x'nbox')". It is not always clear, for example, what values are inside the binding box "(1x'nbox'x4)": (x1, y1, x2, y2) or (xc, yc, w, h), etc. If the postprocess function is not defined, it might take a while to understand the meaning of some output values.

Moving forward it would be nice if the ONNX community adopt something like Google Model Cards, to provide all information relevant to the model itself and its usage.

As a part of this study, we were able to convert SKLearn, XGBoost, and Tensorflow models to graphs using this notebook. We also made the attempt to convert much bigger models such as ResNet, MaskRCNN, etc. Unfortunately for the larger models, the graph computation took a huge amount of time, so this process was manually interrupted for all attempts.

Information-wise these graphs might be useful during the development and debugging phase. However, it could be quite challenging for external users to understand how the specific model works. Even more challenging this could be for the non-deep learning model. For example, an entire ML logic for the Random Forest model has been packaged into the "TreeEnsampleClassifier" node.

For Tensorflow(Keras) it might better use the native visualization tools. The ONNX graph even for the relatively simple Model that consisted of few Dense layers looks rather clouded compare to the native Keras visualization.

Another very good tool for visualizing the ONNX model is the Netron. You cat try this tool online here. If you want to visualize your ONNX model with the Netron inside this project, use the following command:

~$ ./helper.sh netron <path to your ONNX model>