Gluon implementation of some channel attention modules.

| Method | Paper |

Overview |

|---|---|---|

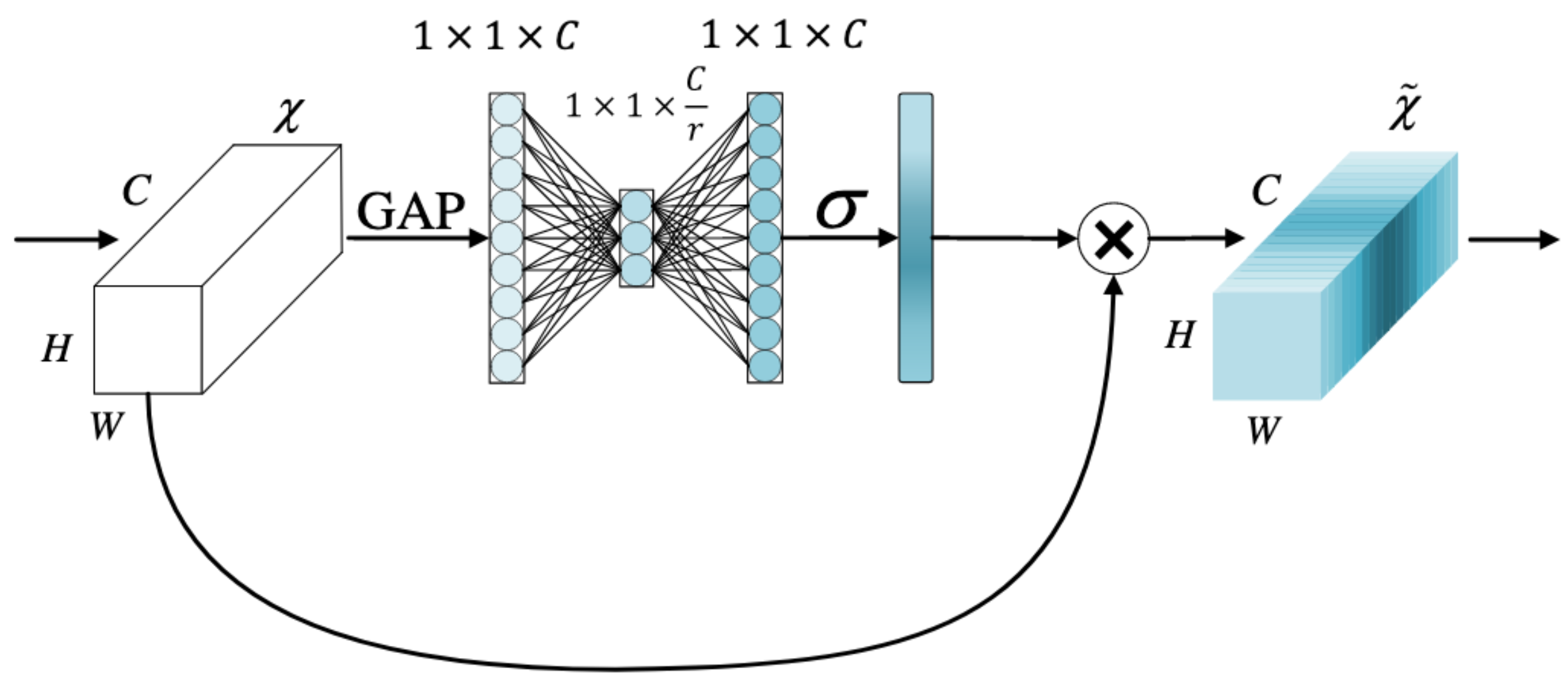

| SE | https://arxiv.org/abs/1709.01507 |  |

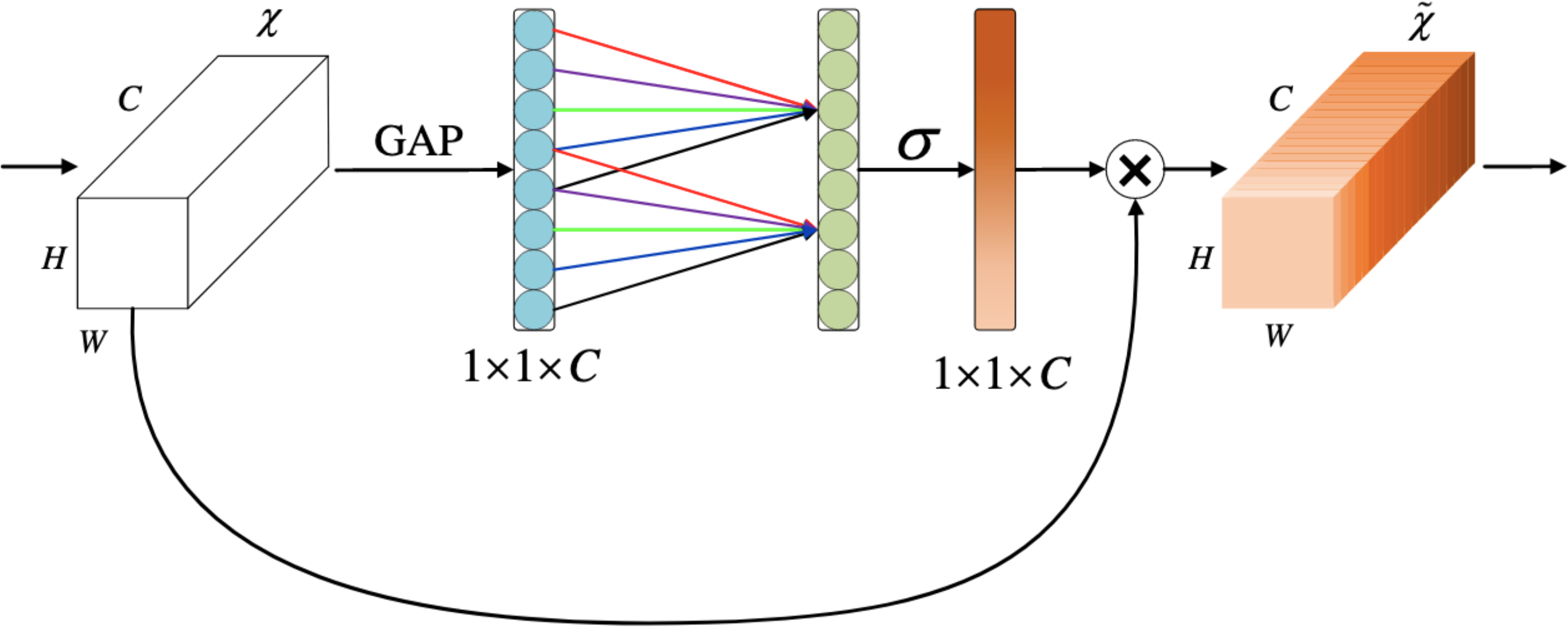

| ECA | https://arxiv.org/abs/1910.03151 |  |

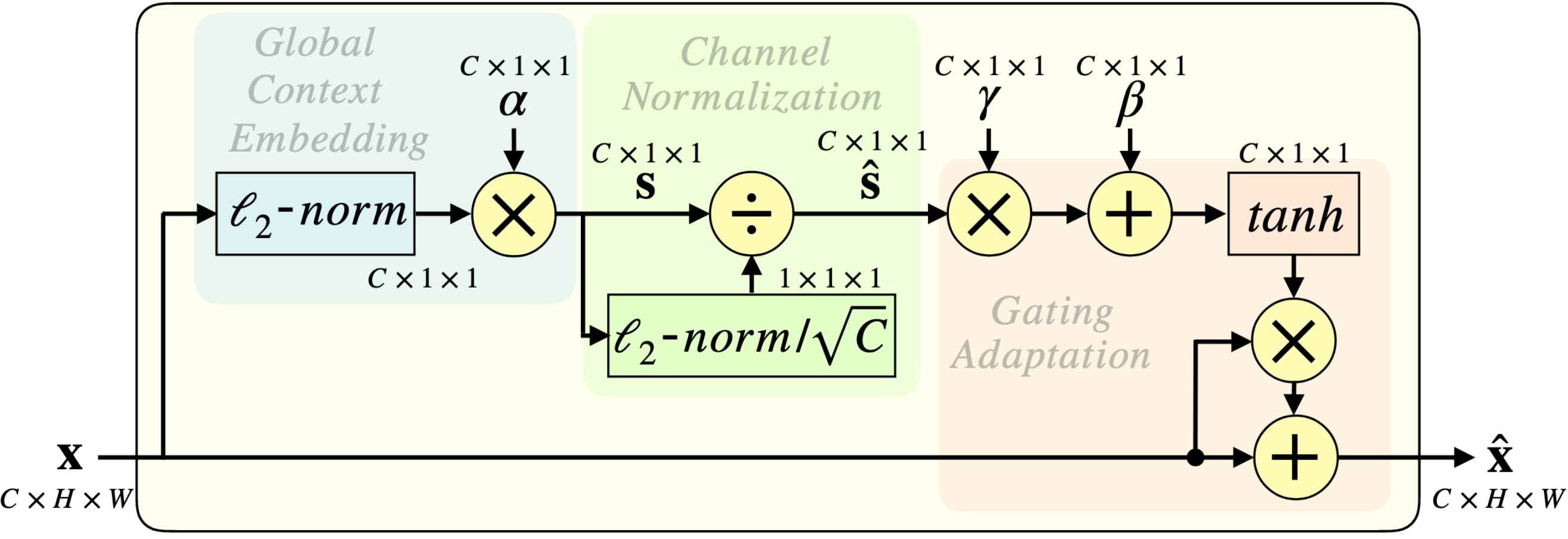

| GCT | https://arxiv.org/abs/1909.11519 |  |

Example of training resnet20_v1 with ECA:

python3 train_cifar10.py --mode hybrid --num-gpus 1 -j 8 --batch-size 128 --num-epochs 186 --lr 0.003 --lr-decay 0.1 --lr-decay-epoch 81,122 --wd 0.0001 --optimizer adam --random-crop --model cifar_resnet20_v1 --attention eca

| Model | Vanilla |

SE | ECA | GCT | ||||

|---|---|---|---|---|---|---|---|---|

| loss |

acc | loss | acc | loss | acc | loss | acc | |

| cifar_resnet20_v1 | 0.0344 | 0.9171 | 0.0325 | 0.9161 | 0.0302 | 0.9189 | 0.0292 | 0.9150 |

| cifar_resnet20_v2 | 0.1088 | 0.9133 | 0.0316 | 0.9162 | 0.0328 | 0.9194 | 0.0354 | 0.9172 |

| cifar_resnet56_v1 | 0.0431 | 0.9154 | 0.0280 | 0.9238 | 0.0170 | 0.9243 | 0.0244 | 0.9238 |

| cifar_resnet56_v2 | 0.0629 | 0.9165 | 0.0268 | 0.9243 | 0.0235 | 0.9218 | 0.0330 | 0.9200 |