Official implementation of the CVPR 2021 paper (oral): Arxiv Paper | GitHub Pages

M. Böhle, M. Fritz, B. Schiele. Convolutional Dynamic Alignment Networks for Interpretable Classifications. CVPR, 2021.

-

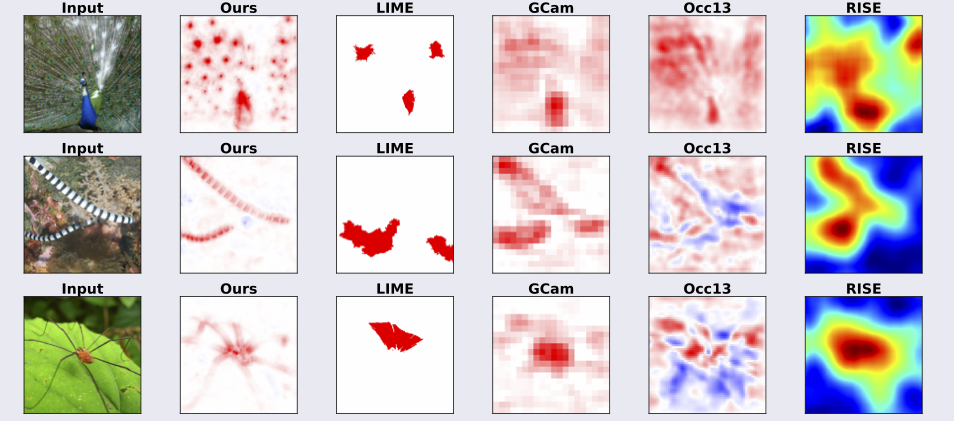

- Apart from the examples shown above, we additionally present some comparisons to post-hoc explanations evaluated on a CoDA-Net.

- In order to highlight the stability of the explanations, we additionally present contribution maps evaluated on videos here.

- For the latter, see interpretability/eval_on_videos.py, for the others check out the jupyter notebook CoDA-Networks Examples.

-

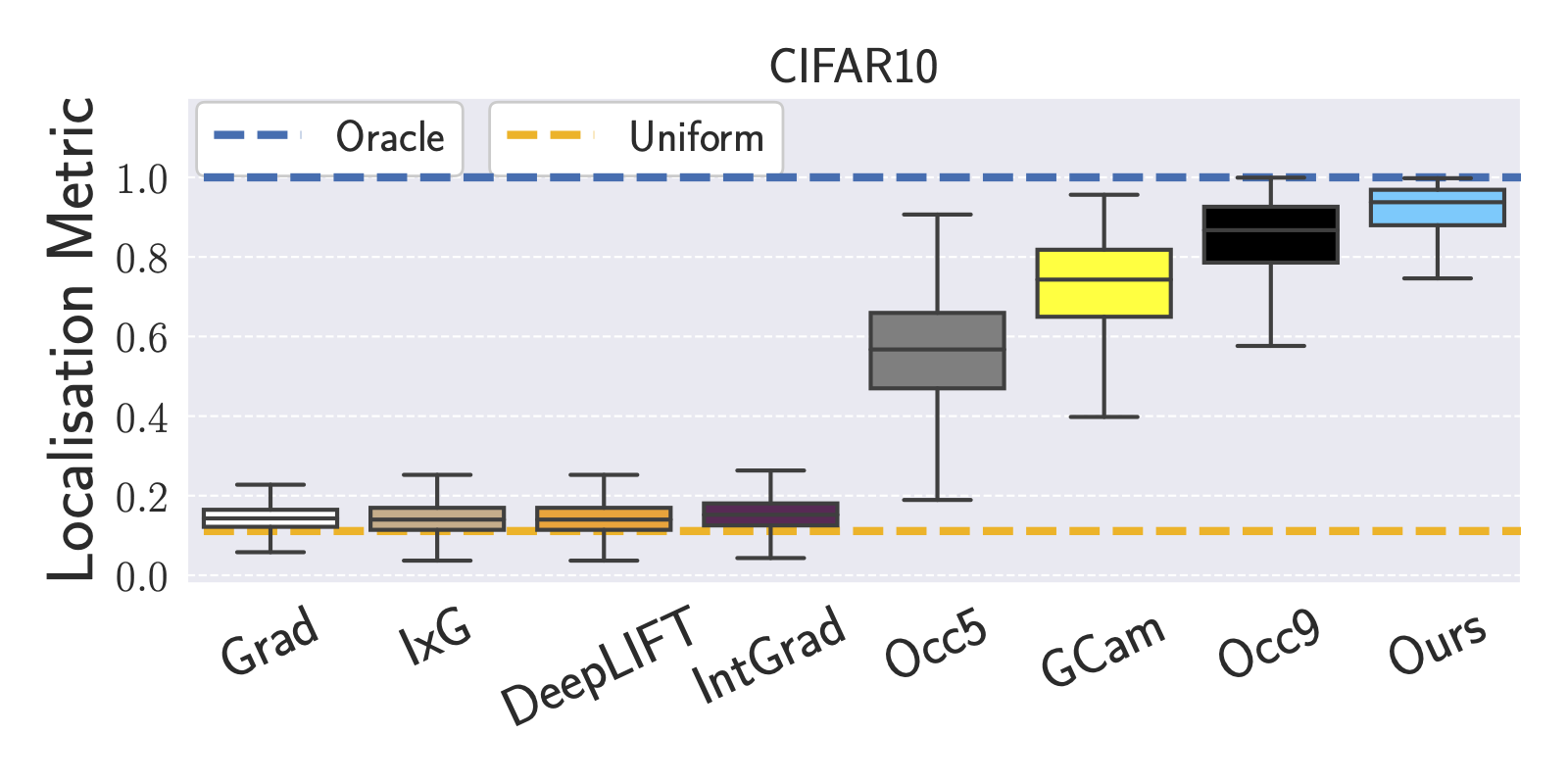

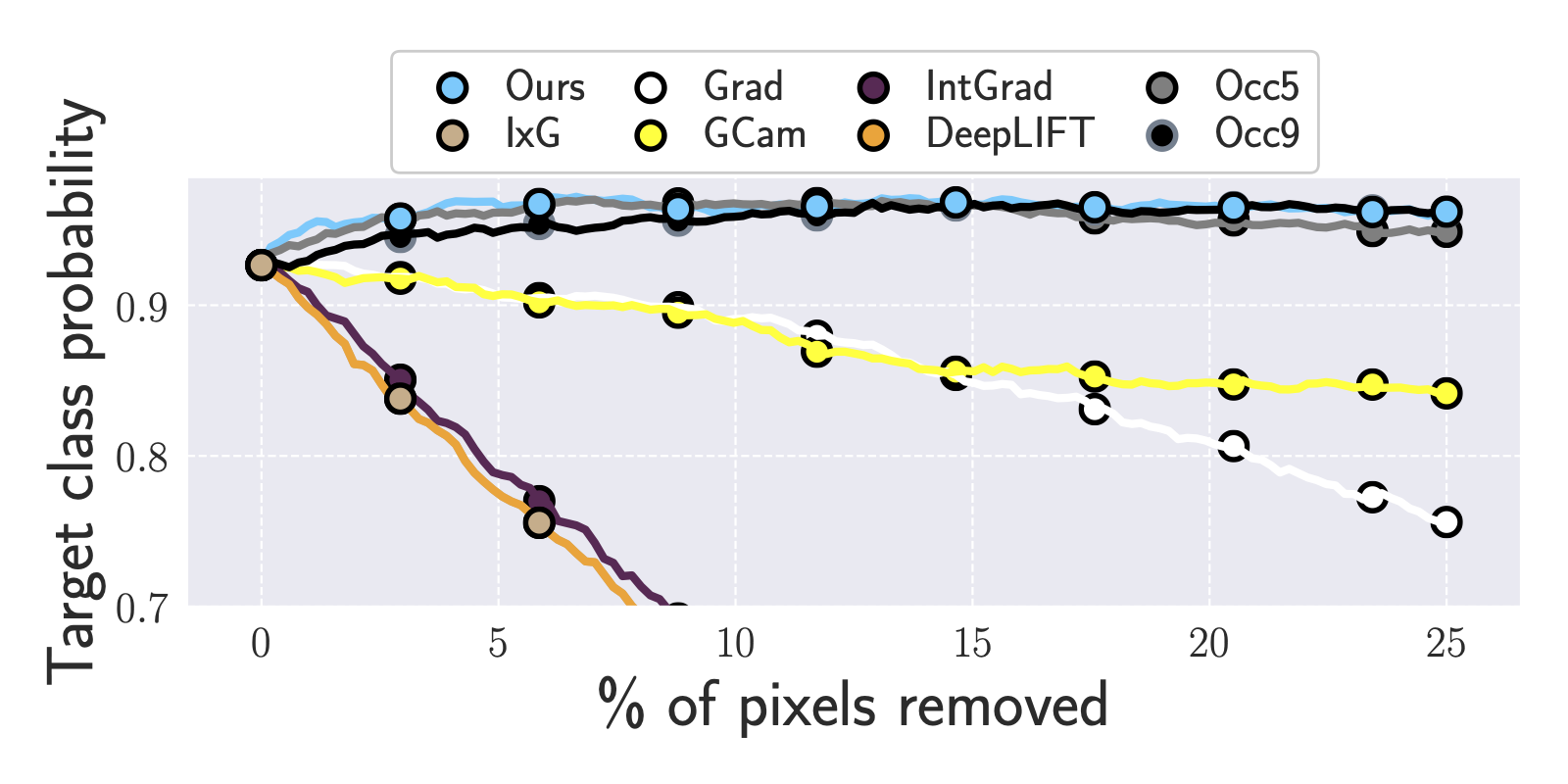

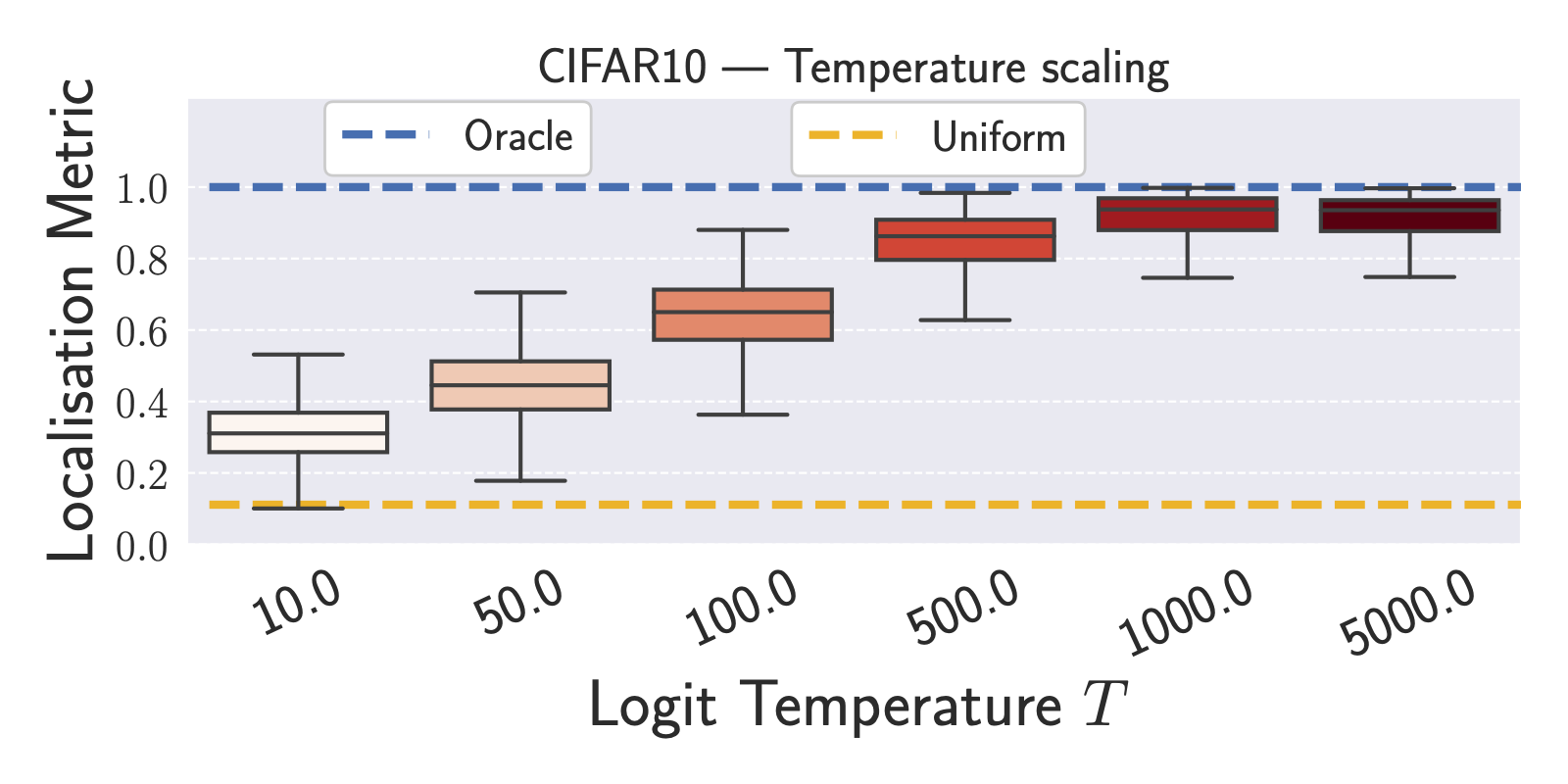

- Here we present the quantitative results of the CoDA-Net interpretability for a model trained on CIFAR10.

- In order to reproduce these plots, check out the jupyter notebook CoDA-Networks Examples.

- For more information, also check out the code in interpretability/.

-

Training

- In order to retrain the models according to the specifications outlined in the paper, see the experiments folder

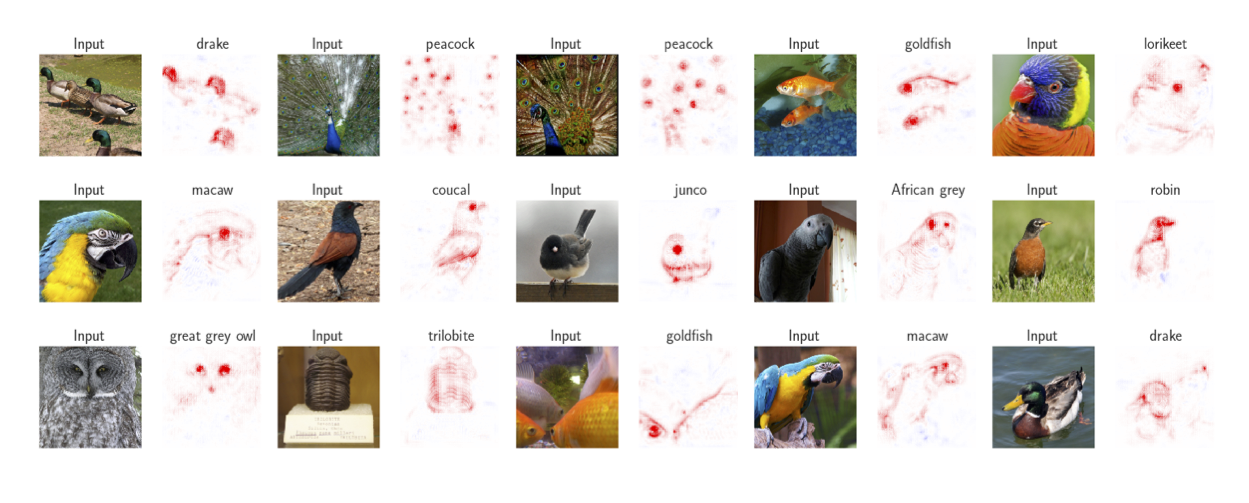

In order to highlight the stability of the contribution-based explanations of the CoDA-Nets, we present some examples for which the output for the respective class of the CoDA-Net was linearly decomposed frame by frame; for more information, see interpretability/eval_on_videos.py.

In order to reproduce these plots, check out the jupyter notebook CoDA-Networks Examples. For more information, see the paper and check out the code at interpretability/

Localisation metric |

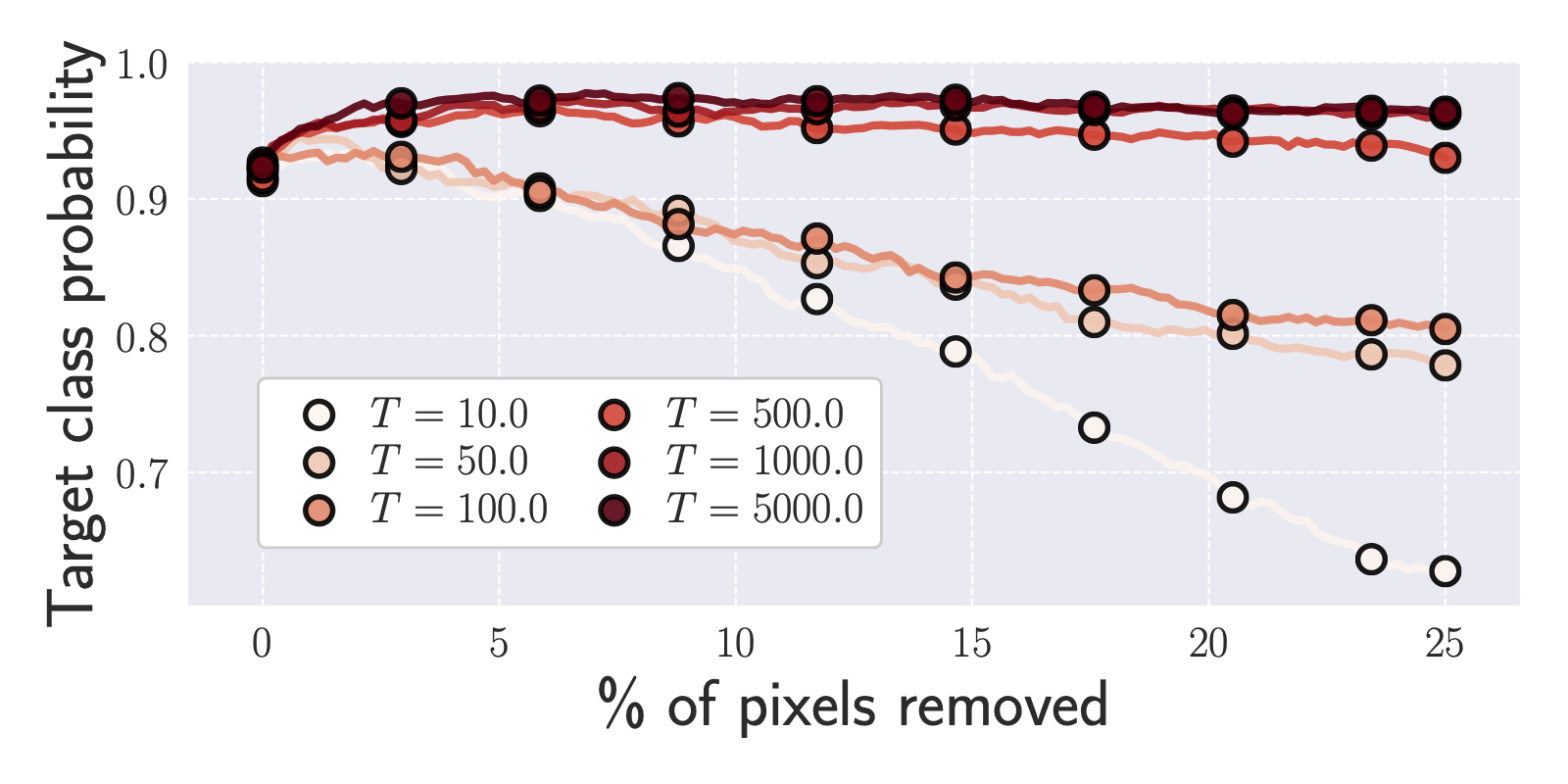

Pixel removal metric |

|

|---|---|---|

Compared to others |

|

|

Trained w/ different temperatures |

|

|

Copyright (c) 2021 Moritz Böhle, Max-Planck-Gesellschaft

This code is licensed under the BSD License 2.0, see license.

Further, you use any of the code in this repository for your research, please cite as:

@inproceedings{Boehle2021CVPR,

author = {Moritz Böhle and Mario Fritz and Bernt Schiele},

title = {Convolutional Dynamic Alignment Networks for Interpretable Classifications},

journal = {IEEE/CVF Conference on Computer Vision and Pattern Recognition ({CVPR})},

year = {2021}

}