scikit-learn-lambda is a toolkit for deploying scikit-learn models for realtime inference on AWS Lambda.

- Get started quickly -

scikit-learn-lambdahandles the boilerplate code for you, simply drop in ajobliborpicklemodel file and deploy. - Cost efficient - The equivalent architecture on AWS SageMaker will cost you ~$50 per endpoint per month. Deploying on AWS Lambda allows you to pay-per-request and not worry about the number of models you're deploying to production.

- Built-in autoscaling - Deploying on Elastic Container Service or Kubernetes requires configuring and maintaining autoscaling groups. AWS Lambda abstracts this complexity away for you.

Read more in our blogpost: Saving 95% on infrastructure costs using AWS Lambda for scikit-learn predictions.

scikit-learn-lambda provides three components:

scikit-learn-lambda: A Python package that includes a handler for servingscikit-learnpredictions via AWS Lambda, designed for use with API Gateway.- A repository of Lambda layers for various versions of Python (3.6 - 3.8)

and

scikit-learn(0.22+). - Example Serverless template configuration for deploying a model to an HTTP endpoint.

You have two options for deploying a model with Serverless framework.

-

Package your model as part of the deployment package upload to AWS Lambda. This option will require your model file to be under ~50 MB, but achieve the best cold-start latency.

-

Store your model into Amazon S3 and load it from there on AWS Lambda initialization. This option has no model size constraints.

- Copy your model joblib file to

scikit-learn-lambda/model.joblib, the same directory thatserverless.yamlis in.

$ cp testdata/svm.joblib scikit-learn-lambda/model.joblib

- Deploy your model with Serverless framework.

$ serverless deploy

- Test the your endpoint with some example data:

$ curl --header "Content-Type: application/json" \

--request POST \

--data '{"input":[[0, 0, 0, 0]]}' \

https://<insert your api here>.execute-api.us-west-2.amazonaws.com/dev/predict

$ {"prediction": [0]}

- Edit the environment variable

SKLEARN_MODEL_PATHinscikit-learn-lambda/serverless.yamlto specify an s3 URL.

function:

scikitLearnLambda:

...

environment:

SKLEARN_MODEL_PATH: "s3://my-bucket/my-model-file.joblib"

- Add an IAM Role that gives the Lambda function permission to access the model file at the specified S3 path.

function:

scikitLearnLambda:

...

role: ScikitLearnLambdaRole

resources:

ScikitLearnLambdaRole:

Type: AWS::IAM::Role

Properties:

RoleName: ScikitLearnLambdaRole

AssumeRolePolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Principal:

Service:

- lambda.amazonaws.com

Action: sts:AssumeRole

Policies:

- PolicyName: ModelInfoGetObjectPolicy-${self:custom.stage}

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Action: ["s3:GetObject"]

Resource: "arn:aws:s3:::my-bucket/my-model.file.joblib"

- Deploy your model with Serverless framework.

$ serverless deploy

It is a good idea to match your Python version and scikit-learn version with the environment that was used to train and serialize the model.

The default template is configured to use Python 3.7 and scikit-learn 0.23.1. To use a different version, change the layer ARN in the serverless template. We have prebuilt and published a set of AWS Lambda you can get started with quickly. For production usage, we recommend that you use our provided scripts to build and host your own AWS Lambda layer -- see Layers.

function:

scikitLearnLambda:

...

layers:

- "<layer ARN including version number>"

When changing the Python runtime version, make sure to also edit the runtime

in serverless:

provider:

...

runtime: "<python3.6, python3.7, or python3.8>"

To get around a the 50 MB (zipped) deployment package

limit,

it is useful to create a distinct layer for the scikit-learn dependency. This

frees up more room in your deployment package for your model or other

dependencies.

scikit-learn-lambda comes with a pre-built set of AWS Lambda layers that include

scikit-learn and joblib that you can use out of the box on us-west-2.

These layers are hosted on the Model Zoo AWS account with public permissions

for any AWS account to use. We also provide a script tools/build-layers.sh

that allows you to build and upload layers owned by your own AWS account.

We have published a set of layers for combinations of Python 3.6 - 3.8 and

scikit-learn 0.22 - 0.24 to different US regions. These are published to

layers.csv:

tools/build-layers.sh is a bash script that can be used to build one or more

AWS Lambda Layers with the scikit-learn and joblib dependencies.

This script assumes you have jq, Docker, and the AWS cli installed on your machine.

Example 1: Build layer for Python 3.7 and scikit-learn 0.23.0.

./build-layers.sh --scikit-learn=0.23.0 --python=3.7

Example 2: Build layer for Python 3.7 and scikit-learn 0.23.0 and publish to us-west-2.

./build-layers.sh --python=3.7 --scikit-learn==0.23.0 --region=us-west-2

./build-layers.sh

scikit-learn-lambda is designed to be used with Lambda Proxy Integrations in

API

Gateway.

When using the provided serverless template, the HTTP endpoint will expect a

POST request with a Content-Type of application/json.

The expected input fields are:

input(string): The list of array-like values to predict. The shape should match the value that is typically fed into amodel.predictcall.return_prediction(boolean): Will returnpredictionin output if true. This field is optional and defaults to true.return_probabilities(boolean): Will returnprobabilitiesin output if true. This field is optional and defaults to false.

One of return_prediction or return_probabilities or both must be true.

The return response will be JSON-encoded with the following fields:

prediction: A list of array-like prediction frommodel.predict(), one for every sample in the batch. Present ifreturn_predictionis true.probabilities: A list of dictionaries mapping string class names to probabilities frommodel.predict_proba(), one for every sample in the batch. Present ifreturn_probabilitiesis true.

$ curl --header "Content-Type: application/json" \

--request POST \

--data '{"input":[[0, 0, 0, 0]]}' \

https://<api-id>.execute-api.us-west-2.amazonaws.com/mlp-250-250-250/predict

{"prediction": [0]}

$ curl --header "Content-Type: application/json" \

--request POST \

--data '{"input":[[0, 0, 0, 0]], "return_probabilities": true}' \

https://<api-id>.execute-api.us-west-2.amazonaws.com/mlp-250-250-250/predict

{"prediction": [0], "probabilities": [{"0": 0.3722170997279803, "1": 0.29998954257031885, "2": 0.32779335770170076}]}

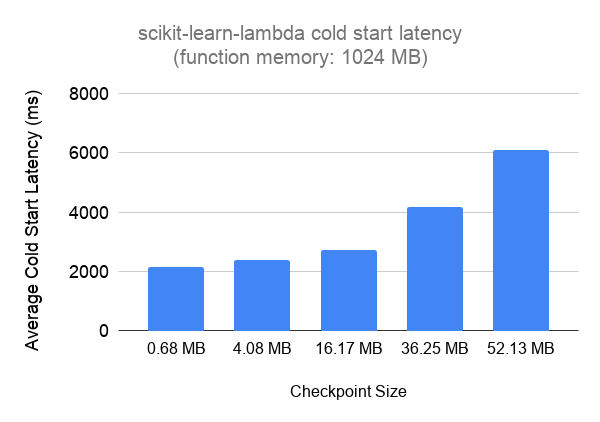

To run these benchmarks, we generate MLPClassifier models with three hidden layers each using layer sizes of 100, 250, 500, 750, and 900. Each model is trained on the iris dataset and serialized using joblib. This results in model files sized 0.68 MB, 4.08 MB, 16.17 MB, 36.25 MB, and 52.13 MB respectively. We deploy each to a lambda function with 1024 MB memory and measure an average cold-start latency over three samples:

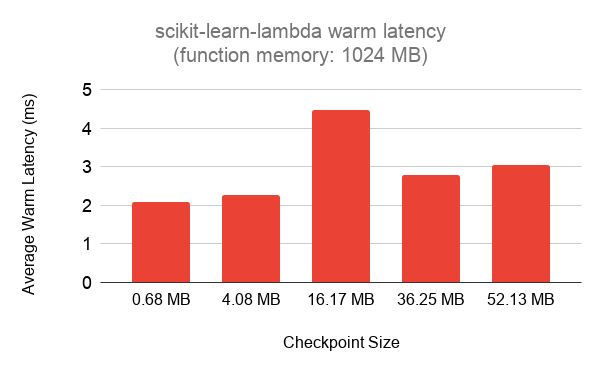

We also plot the “warm” latency below averaged over fifteen samples for each model:

https://modelzoo.dev/lambda-vs-sagemaker-cost/

We've created this interactive visualization to help us understand the cost per month under various usage scenarios. It also includes a comparison to SageMaker inference endpoint costs.