this repository is dedicated to train and use the RCN method (Recombinator Networks) for landmark localization/detection.

The source and original repository is https://github.com/SinaHonari/RCN and please consider that first.

The original repository was very dependent to the face datasets and this repository is an example for how to deal with that and use RCN for our dataset. For this reason some of the RCN scripts are modified.

My interest was just 68 landmarks version of the paper.

-

Ubuntu 16.4

-

python 2.7

-

create your python environment if you prefer

-

install opencv

pip install opencv-python

pip install opencv-contrib-python -

install pil

pip install Pillow -

install theano

-

create config file for theano

echo -e "\n[global]\nfloatX=float32\n" >> ~/.theanorc

make sure terminal is located in this repository.

e.g. ~/Desktop/Train-Predict-Landmarks-by-RCN-master

all of our data (images and corresponding .pts file of keypoints), should be places in ./data0/Train_set/data/.

Already, I have put some data for fasting start.

use following command to generate the training pickle:

python ./RCN/datasets/create_raw_300W.py --src_dir=./data0/ --dest_dir=./data0

now, move the generated pickle file to the path which is necessary for RCN by:

mv -f ./data0/300W_train_160by160.pickle ./RCN/datasets/300W/

First config the theano by

THEANO_FLAGS=floatX=float32,device=cuda,force_device=True

or if for cpu:

THEANO_FLAGS=floatX=float32,device=cpu,force_device=True

For training the RCN on 68 landmark dataset use following line:

python ./RCN/models/create_procs.py --L2_coef=1e-12 --L2_coef_ful=1e-08 --file_suffix=RCN_300W_test --num_epochs=1 --paral_conv=5.0 --use_lcn --block_img

do not forget to set the num_epochs.

After training the trained model and training information would be places at .\RCN\models\exp_shared_conv\

For prediction, it is assumed the test images and gtound truth keypoints are in a pickle.

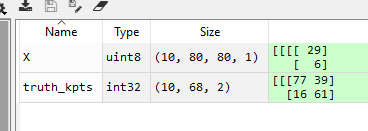

e.g. Data_Test.pickle in data0 folder with following data structure. X contains images (10 images, gray scale, with size 80x80) and truth_kpts are the corresponding landmarks.

Note that, for RCN we should prepared our test data such as above config. image size=80x80 and gray scale.

use following line to predict by trained network. A picke contains both ground truth and predicted keypoints would be generated in outputs folder.

python ./RCN/models/export_draw_points_guide.py --img_path=./data0/Data_Test.pickle --path=./RCN/models/exp_shared_conv/shared_conv_params_RCN_300W_test_300W.pickle

if we are not interested in face landmarks, we could use

python ./RCN/models/create_procs_noface.py --L2_coef=1e-12 --L2_coef_ful=1e-08 --file_suffix=RCN_300W_test --num_epochs=1 --paral_conv=5.0 --use_lcn --block_img

which is not based on face detection and rectangles are fixed with for 256x256 images