E2E-MLT - an Unconstrained End-to-End Method for Multi-Language Scene Text code base for: https://arxiv.org/abs/1801.09919

@@inproceedings{buvsta2018e2e,

title={E2E-MLT-an unconstrained end-to-end method for multi-language scene text},

author={Bu{\v{s}}ta, Michal and Patel, Yash and Matas, Jiri},

booktitle={Asian Conference on Computer Vision},

pages={127--143},

year={2018},

organization={Springer}

}

- python3.x with

- opencv-python

- pytorch 0.4.1

- torchvision

- warp-ctc (https://github.com/SeanNaren/warp-ctc/)

wget http://ptak.felk.cvut.cz/public_datasets/SyntText/e2e-mlt.h5

python3 demo.py -model=e2e-mlt.h5

- ICDAR MLT Dataset

- ICDAR 2015 Dataset

- RCTW-17

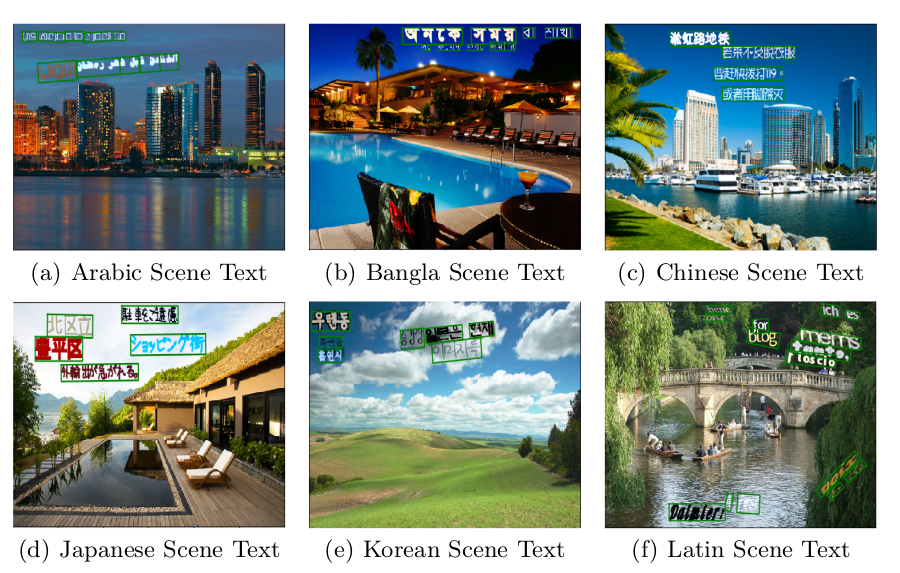

- Synthetic MLT Data (Arabic, Bangla, Chinese, Japanese, Korean, Latin, Hindi )

- and converted GT to icdar MLT format (see: http://rrc.cvc.uab.es/?ch=8&com=tasks) (Arabic, Bangla, Chinese, Japanese, Korean, Latin, Hindi )

Synthetic text has been generated using Synthetic Data for Text Localisation in Natural Images, with minor changes for Arabic and Bangla script rendering.

What we have found useful:

- for generating Arabic Scene Text: https://github.com/mpcabd/python-arabic-reshaper

- for generating Bangla Scene Text: PyQt4

- having somebody who can read non-latin scripts: we would like to thank Ali Anas for reviewing generated Arabic scene text.

python3 train.py -train_list=sample_train_data/MLT/trainMLT.txt -batch_size=8 -num_readers=5 -debug=0 -input_size=512 -ocr_batch_size=256 -ocr_feed_list=sample_train_data/MLT_CROPS/gt.txt

Code borrows from EAST and DeepTextSpotter