Welcome to GraphRAG Local with Ollama and Interactive UI! This is an adaptation of Microsoft's GraphRAG, tailored to support local models using Ollama and featuring a new interactive user interface.

NOTE: I am currently in the process of debugging certain aspects so everything will run more smoothly. This may mean you will need to update your version by the end of today if you encounter an error by chance. I am trying to be fluid with the adjustments. I will also be making some changes so the model provider is more agnostic and not reliant on just Ollama. Feel free to open an Issue if you run into an error and I will try to address it immediately so you don't run into any downtime

For more details on the original GraphRAG implementation, please refer to the GraphRAG paper.

- Local Model Support: Leverage local models with Ollama for LLM and embeddings.

- Cost-Effective: Eliminate dependency on costly OpenAI models.

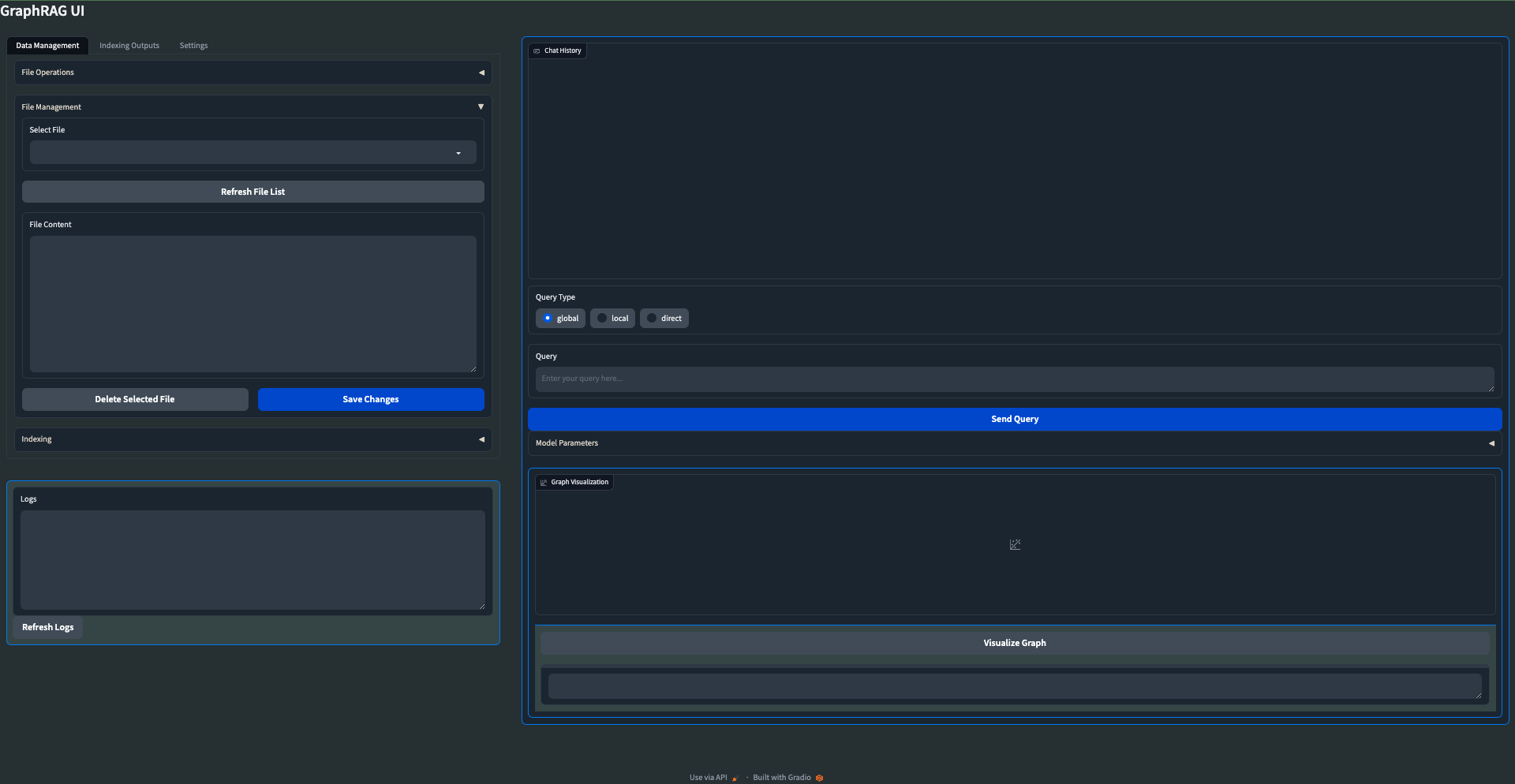

- Interactive UI: User-friendly interface for managing data, running queries, and visualizing results.

- Real-time Graph Visualization: Visualize your knowledge graph in 3D using Plotly.

- File Management: Upload, view, edit, and delete input files directly from the UI.

- Settings Management: Easily update and manage your GraphRAG settings through the UI.

- Output Exploration: Browse and view indexing outputs and artifacts.

- Logging: Real-time logging for better debugging and monitoring.

Follow these steps to set up and run GraphRAG Local with Ollama and Interactive UI:

-

Create and activate a new conda environment:

conda create -n graphrag-ollama -y conda activate graphrag-ollama

-

Install Ollama: Visit Ollama's website for installation instructions.

-

Install the required packages:

pip install -r requirements.txt

-

Launch the interactive UI:

gradio app.py

or

python app.py

-

Using the UI:

- Once the UI is launched, you can perform all necessary operations through the interface.

- This includes initializing the project, managing settings, uploading files, running indexing, and executing queries.

- The UI provides a user-friendly way to interact with GraphRAG without needing to run command-line operations.

Note: The UI now handles all the operations that were previously done through command-line instructions, making the process more streamlined and user-friendly.

Users can experiment by changing the models in the settings.yaml file. The LLM model expects language models like llama3, mistral, phi3, etc., and the embedding model section expects embedding models like mxbai-embed-large, nomic-embed-text, etc., which are provided by Ollama. You can find the complete list of models provided by Ollama here.

The UI now includes a 3D graph visualization feature. To use it:

- Run indexing on your data

- Go to the "Indexing Outputs" tab

- Select the latest output folder and navigate to the GraphML file

- Click the "Visualize Graph" button