This project is only for educational purposes. I did it while I was following the Udacity DataScience nanodegree.

In this project we analyze the interactions that users have with articles on the IBM Watson Studio platform, and make

recommendations to them about new articles we think they will like.

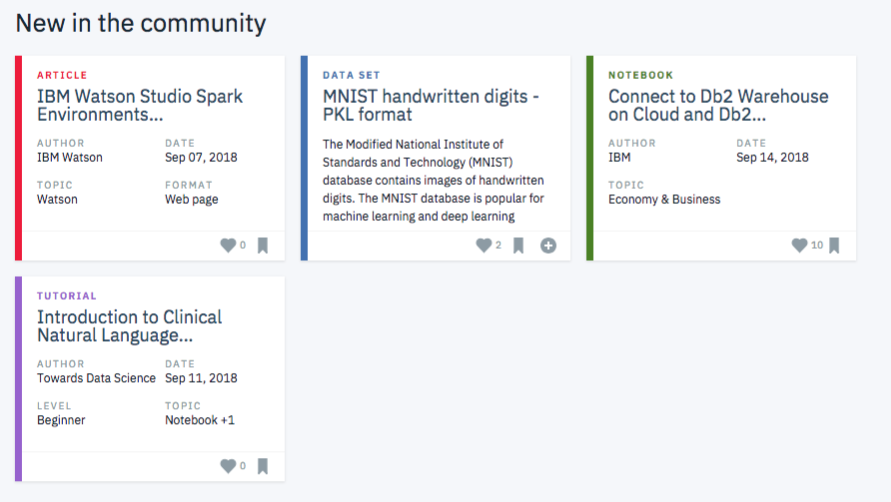

Here is an example of what the dashboard could look like displaying articles on the IBM Watson Platform:

Though the above dashboard is just showing the newest articles, we could imagine having a recommendation board available here that shows the articles that are most pertinent to a specific user.

This project is divided into several phases and this notebook is a walkthrough guide to follow:

- Exploratory Data Analysis that processes message and category data from csv files and load them into a SQLite database

- Rank Based Recommendations: find the most popular articles simply based on the most interactions. Since there are no ratings for any of the articles, it is easy to assume the articles with the most interactions are the most popular. These are then the articles we might recommend to new users (or anyone depending on what we know about them).

- User-User Based Collaborative Filtering: look at users that are similar in terms of the items they have interacted with. These items could then be recommended to the similar users. This would be a step in the right direction towards more personal recommendations for the users.

- Content Based Recommendations: use NLP to develop a content based recommendation system.

- Matrix Factorization (SVD): machine learning approach to building recommendations. Using the user-item interactions, we build out a matrix decomposition. And using this decomposition, we can get an idea of how well we can predict new articles an individual might interact with

Easiest way is to create a virtual environment through conda

and the given environment.yml file by running this command in a terminal (if you have conda, obviously):

conda env create -f environment.yml

If you do not have/want to use conda for any reason, you can still setup your environment by running some pip install

commands. Please refer to the environment.yml file to see what are the dependencies you will need to install.

Basically, this project requires Python 3.7 in addition to common datascience packages (such as

numpy, pandas, matplotlib).

For machine learning, this project is using Singular Value Decomposition from numpy package.

There are those additional packages in order to expose our work within a webapp:

- spacy: used for NLP tasks

Here is the structure of the project:

project

|__ assets (contains images displayed in notebooks or README)

|__ data (raw data)

|__ notebooks (contains all notebooks)

|__ srctests (python modules and scripts)