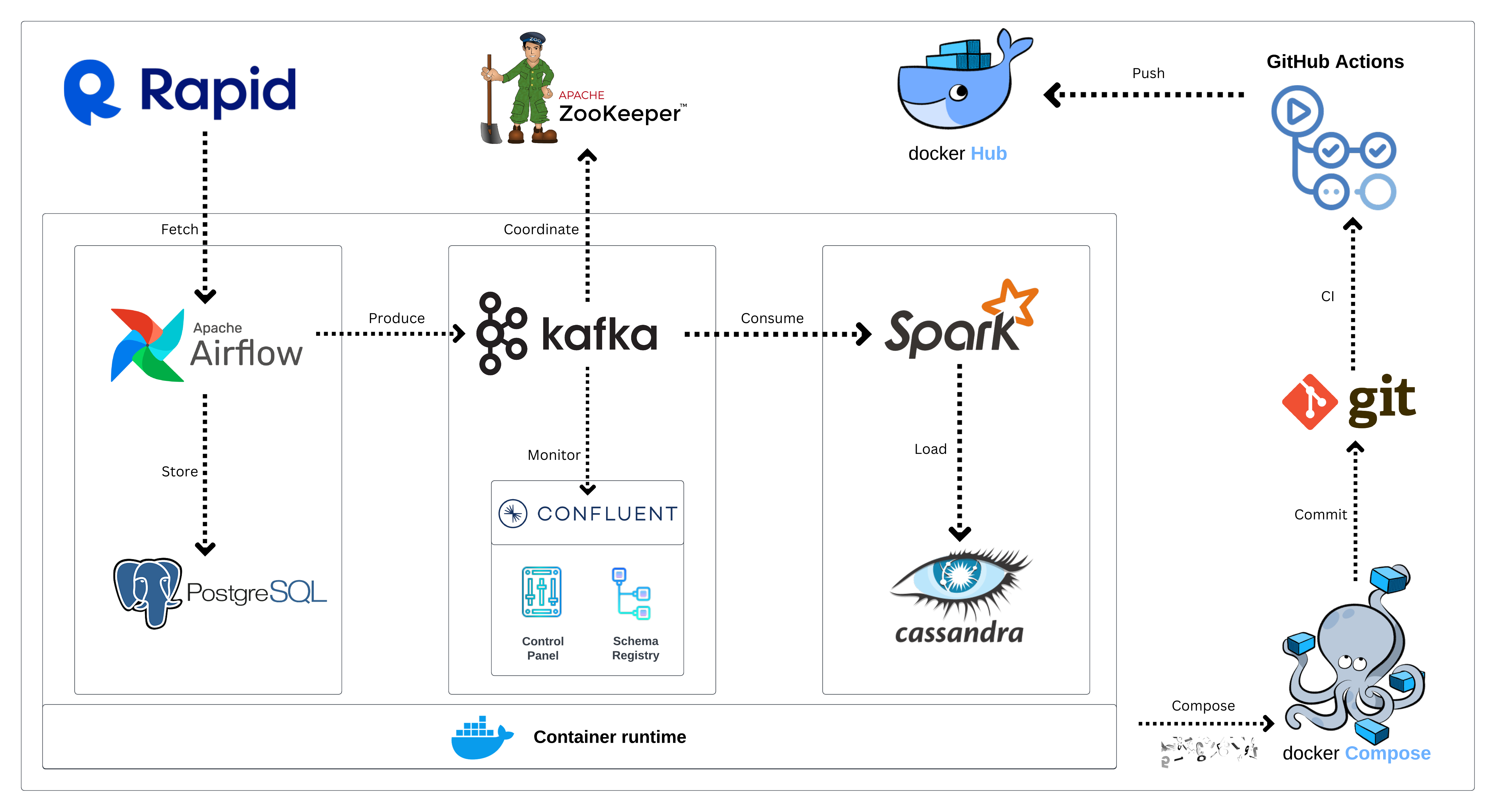

I used Airflow, PostgreSQL, Kafka, Spark and Cassandra in order to establish a fully automated ETL pipeline in a container runtime, with a CI using GitHub Actions to automate the service's Docker image updates on DockerHub.

- Clone the repository

git clone https://github.com/moontucer/Data-Streaming-Project/

- Go to the project folder

cd Data-Streaming-Project

- Build the environment with Docker Compose

docker-compose up

https://medium.com/@moontucer/data-streaming-project-real-time-end-to-end-data-pipeline-082f0d9cfbdb