This repository contains the code used in the paper "Image Segmentation Using Text and Image Prompts".

The Paper has been accepted to CVPR 2022!

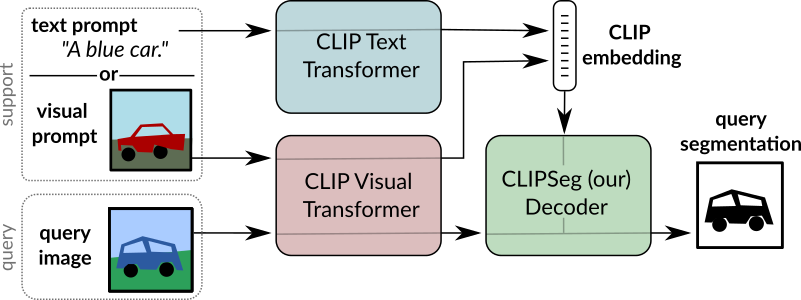

The systems allows to create segmentation models without training based on:

- An arbitrary text query

- Or an image with a mask highlighting stuff or an object.

In the Quickstart.ipynb notebook we provide the code for using a pre-trained CLIPSeg model. If you run the notebook locally, make sure you downloaded the rd64-uni.pth weights, either manually or via git lfs extension.

It can also be used interactively using MyBinder

(please note that the VM does not use a GPU, thus inference takes a few seconds).

This code base depends on pytorch, torchvision and clip (pip install git+https://github.com/openai/CLIP.git).

Additional dependencies are hidden for double blind review.

PhraseCutandPhraseCutPlus: Referring expression datasetPFEPascalWrapper: Wrapper class for PFENet's Pascal-5i implementationPascalZeroShot: Wrapper class for PascalZeroShotCOCOWrapper: Wrapper class for COCO.

CLIPDensePredT: CLIPSeg model with transformer-based decoder.ViTDensePredT: CLIPSeg model with transformer-based decoder.

For some of the datasets third party dependencies are required. Run the following commands in the third_party folder.

git clone https://github.com/cvlab-yonsei/JoEm

git clone https://github.com/Jia-Research-Lab/PFENet.git

git clone https://github.com/ChenyunWu/PhraseCutDataset.git

git clone https://github.com/juhongm999/hsnet.gitThe MIT license does not apply to these weights.

- CLIPSeg-D64 (4.1MB, without CLIP weights)

- CLIPSeg-D16 (1.1MB, without CLIP weights)

In case the github LFS download does not work, please use this link to our cloud storage to obtain the weights.

To train use the training.py script with experiment file and experiment id parameters. E.g. python training.py phrasecut.yaml 0 will train the first phrasecut experiment which is defined by the configuration and first individual_configurations parameters. Model weights will be written in logs/.

For evaluation use score.py. E.g. python score.py phrasecut.yaml 0 0 will train the first phrasecut experiment of test_configuration and the first configuration in individual_configurations.

In order to use the dataset and model wrappers for PFENet, the PFENet repository needs to be cloned to the root folder.

git clone https://github.com/Jia-Research-Lab/PFENet.git

The source code files in this repository (excluding model weights) are released under MIT license.

@InProceedings{lueddecke22_cvpr,

author = {L\"uddecke, Timo and Ecker, Alexander},

title = {Image Segmentation Using Text and Image Prompts},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2022},

pages = {7086-7096}

}