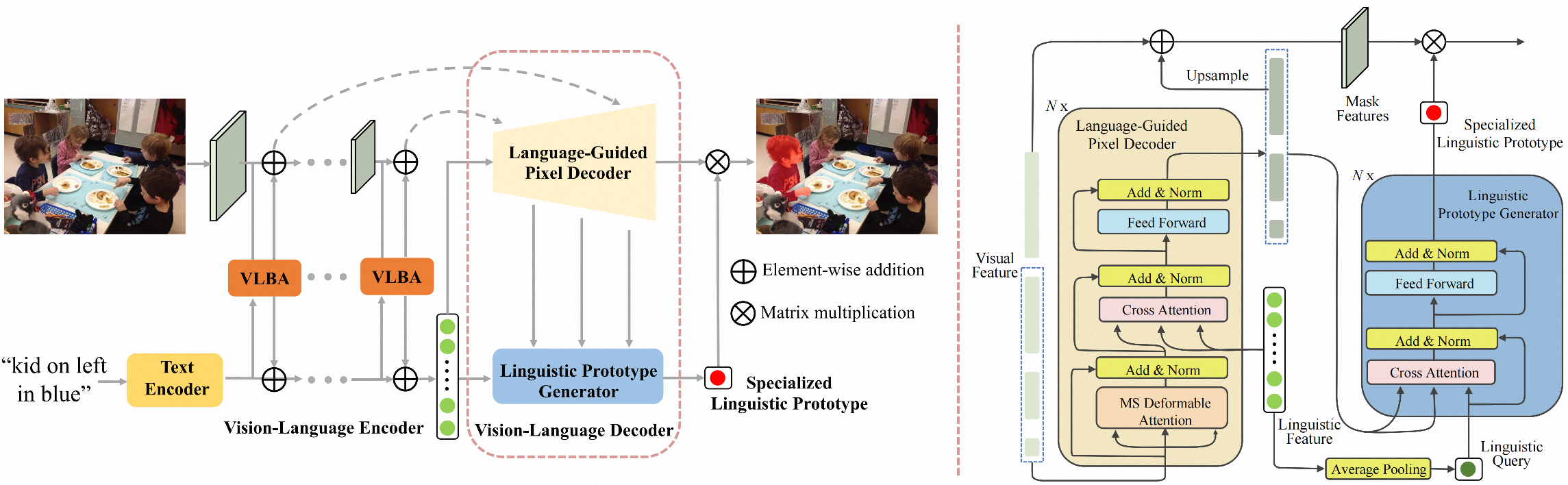

This is the official repository for the paper: "Linguistic Query-Guided Mask Generation for Referring Image Segmentation".

2023/05/26: Code is available.

-

Clone the repository

git clone https://github.com/ZhichaoWei/LGFormer.git

-

Navigate to the project directory

cd LGFormer -

Install the dependencies

conda env create -f environment.yaml conda activate lgformer

Hint: You can also download the pre-build docker image instead of using conda packages:

docker load < lgformer_pre_build.tar docker run -it --gpus all --name lgformer_inst --shm-size 16G lgformer_pre_build:v0.1 /bin/bash -

Compile CUDA operators for deformable attention

cd lib/ops sh make.sh cd ../..

See LAVT for reference. The datasets should be organized as follows:

datasets/

images/

...

mscoco/

saiapr_tc-12/

refcoco/

instances.json

'refs(google).p'

'refs(unc).p'

refcoco+/

instances.json

'refs(unc).p'

refcocog/

instances.json

'refs(google).p'

'refs(umd).p'

refclef/

instances.json

'refs(berkeley).p'

'refs(unc).p'

-

Create the directory where the pre-trained backbone weights will be saved:

mkdir ./pretrained_weights

-

Download pre-trained weights of the Swin transformer and put it in

./pretrained_weights.Swin tiny Swin small Swin base Swin large weights weights weights weights

-

ReferIt RefCOCO RefCOCO+ RefCOCOg weights weights weights weights -

Evaluate our pre-trained model on a specified split of a specified dataset (for example, evaluate on testA set of RefCOCO+):

# 1. download our pretrained weights on RefCOCO+ and put it at `checkpoints/model_refcoco+.pth`. # 2. set the argument `--split` in `scripts/test_scripts/test_refcoco+.sh` to `val`. # 3. evaluation sh scripts/test_scripts/test_refcoco+.sh

-

Train the model on a specified dataset (for example, train on RefCOCOg):

sh scripts/train_scripts/train_refcocog.sh

-

One can inference the pre-trained model on any image-text pair by running the script

inference.py.

If you find our work useful in your research, please cite it:

@misc{wei2023linguistic,

title={Linguistic Query-Guided Mask Generation for Referring Image Segmentation},

author={Zhichao Wei and Xiaohao Chen and Mingqiang Chen and Siyu Zhu},

year={2023},

eprint={2301.06429},

archivePrefix={arXiv},

}

Code is largely based on LAVT, Mask2Former and Deformable DETR.

Thanks for all these wonderful open-source projects!