Chrome Extension | Web/Mobile app

LLM X does not make any external api calls. (go ahead, check your network tab and see the Fetch section). Your chats and image generations are 100% private. This site / app works completely offline.

LLM X (web app) will not connect to a server that is not secure. This means that you can use LLM X on localhost (considered a secure context) but if you're trying to use llm-x over a network the server needs to be from https or else it will not work.

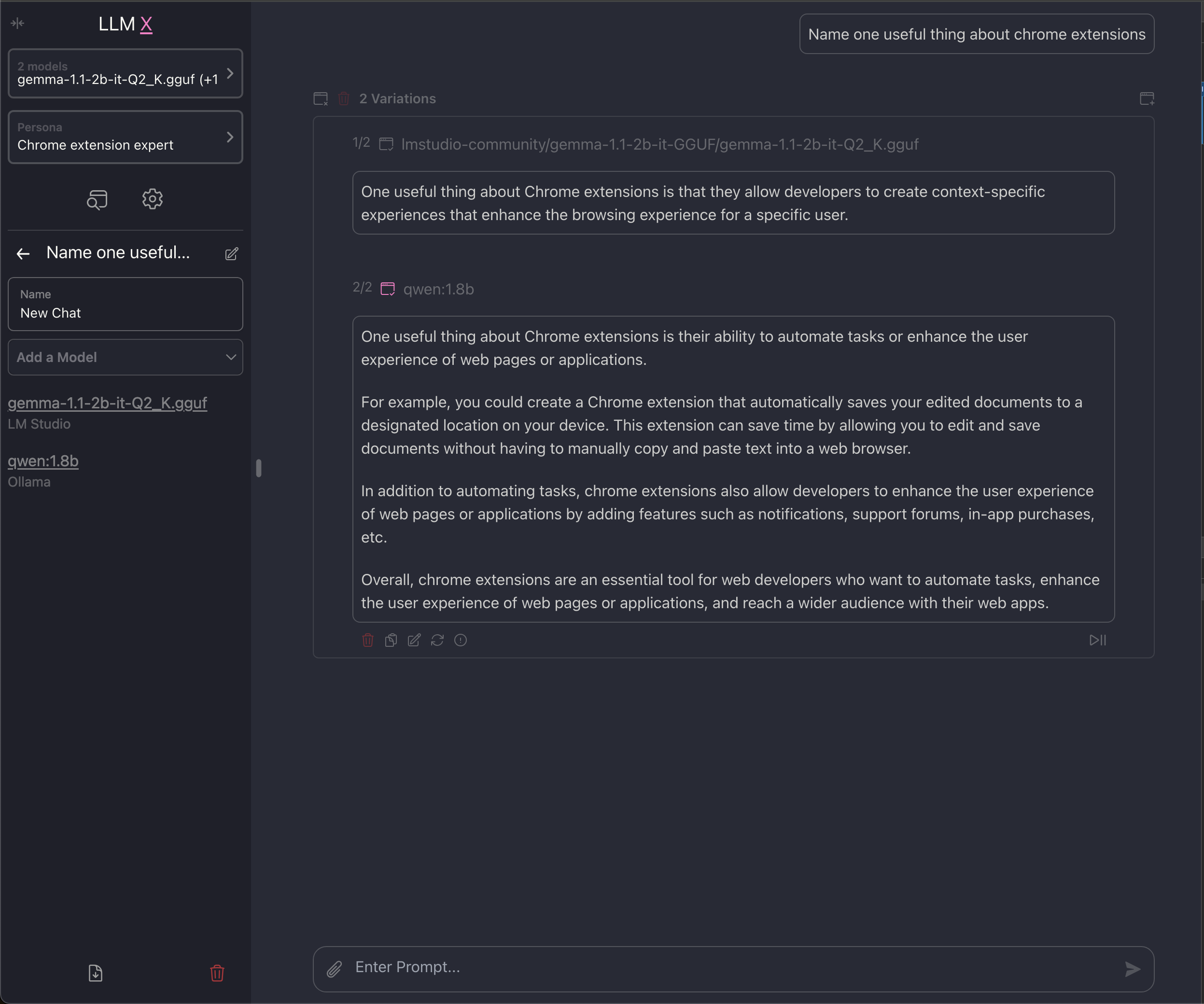

- Multi-model support! Chats now support multiple models, from any connected server, and can respond with each them at the same time

- Quick Delete! using the quick search/ kbar panel, it is super easy to delete all app related data

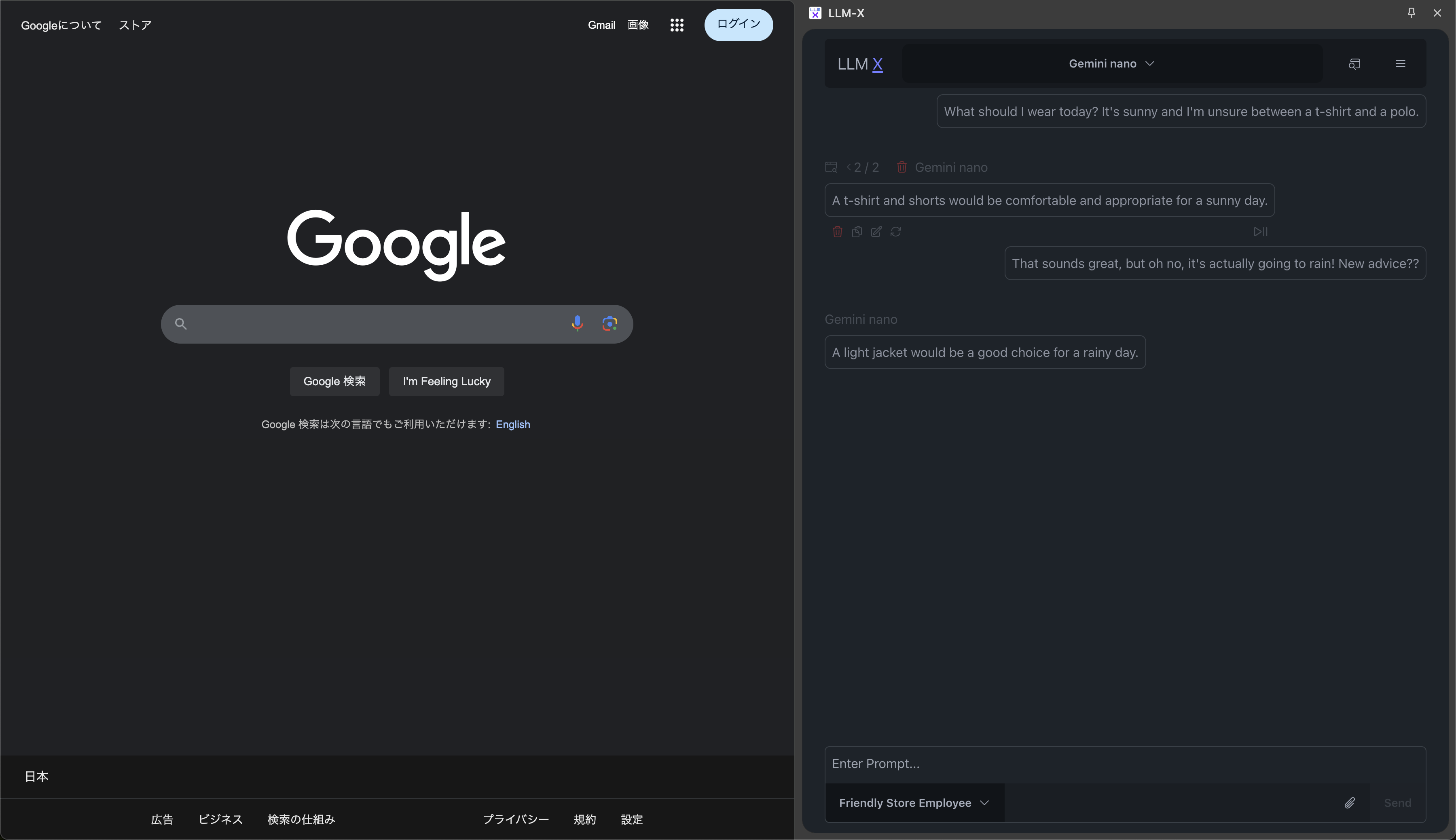

- Chrome extension support! All the features of the web app, built into the browser

- Gemini Nano support!

- IndexedDB support! All text is now saved in IndexedDB instead of local storage

- Auto-connect on load. If there is a server thats visible and ready to connect, we connect to it for you

- Ollama: Download and install Ollama

- Pull down a model (or a few) from the library Ex:

ollama pull llava(or use the app)

- Pull down a model (or a few) from the library Ex:

- LM Studio: Download and install LM Studio

- AUTOMATIC1111: Git clone AUTOMATIC1111 (for image generation)

- Gemini Nano: Download and install Chrome Canary

- Enable On Device Model by selecting

BypassPerfRequirement - Enable Api Gemini for nano

- Relaunch Chrome (may need to wait for it to download)

- Enable On Device Model by selecting

- Ollama Options:

- Use Ollama's FAQ to set

OLLAMA_ORIGINS=https://mrdjohnson.github.io - Run this in your terminal

OLLAMA_ORIGINS=https://mrdjohnson.github.io ollama serve- (Powershell users:

$env:OLLAMA_ORIGINS="https://mrdjohnson.github.io"; ollama serve)

- (Powershell users:

- Use Ollama's FAQ to set

- LM Studio:

- Run this in your terminal:

lms server start --cors=true

- Run this in your terminal:

- A1111:

- Run this in the a1111 project folder:

./webui.sh --api --listen --cors-allow-origins "*"

- Run this in the a1111 project folder:

- Gemini Nano: works automatically

- Use your browser to go to LLM-X

- Go offline! (optional)

- Start chatting!

- Download and install Chrome Extension

- Ideally this works out of the box, no special anything needed to get it connecting! If not continue with the steps below:

- Ollama Options:

- Use Ollama's FAQ to set

OLLAMA_ORIGINS=chrome-extension://iodcdhcpahifeligoegcmcdibdkffclk - Run this in your terminal

OLLAMA_ORIGINS=chrome-extension://iodcdhcpahifeligoegcmcdibdkffclk ollama serve- (Powershell users:

$env:OLLAMA_ORIGINS="chrome-extension://iodcdhcpahifeligoegcmcdibdkffclk"; ollama serve)

- (Powershell users:

- Use Ollama's FAQ to set

- LM Studio:

- Run this in your terminal:

lms server start --cors=true

- Run this in your terminal:

- A1111:

- Run this in the a1111 project folder:

./webui.sh --api --listen --cors-allow-origins "*"

- Run this in the a1111 project folder:

- Follow instructions for "How to use web client"

- In your browser search bar, there should be a download/install button, press that.

- Go offline! (optional)

- Start chatting!

- Ollama: Run this in your terminal

ollama serve - LM Studio: Run this in your terminal:

lms server start - A1111: Run this in the a1111 project folder:

./webui.sh --api --listen

- Pull down this project;

yarn install,yarn preview - Go offline! (optional)

- Start chatting!

- Run this in your terminal:

docker compose up -d - Open http://localhost:3030

- Go offline! (optional)

- Start chatting!

- Pull down this project;

yarn chrome:build - Navigate to

chrome://extensions/ - Load unpacked (developer mode option) from path:

llm-x/extensions/chrome/dist

- COMPLETELY PRIVATE; WORKS COMPLETELY OFFLINE

- Ollama integration!

- LM Studio integration!

- Open AI server integration!

- Gemini Nano integration!

- AUTOMATIC1111 integration!

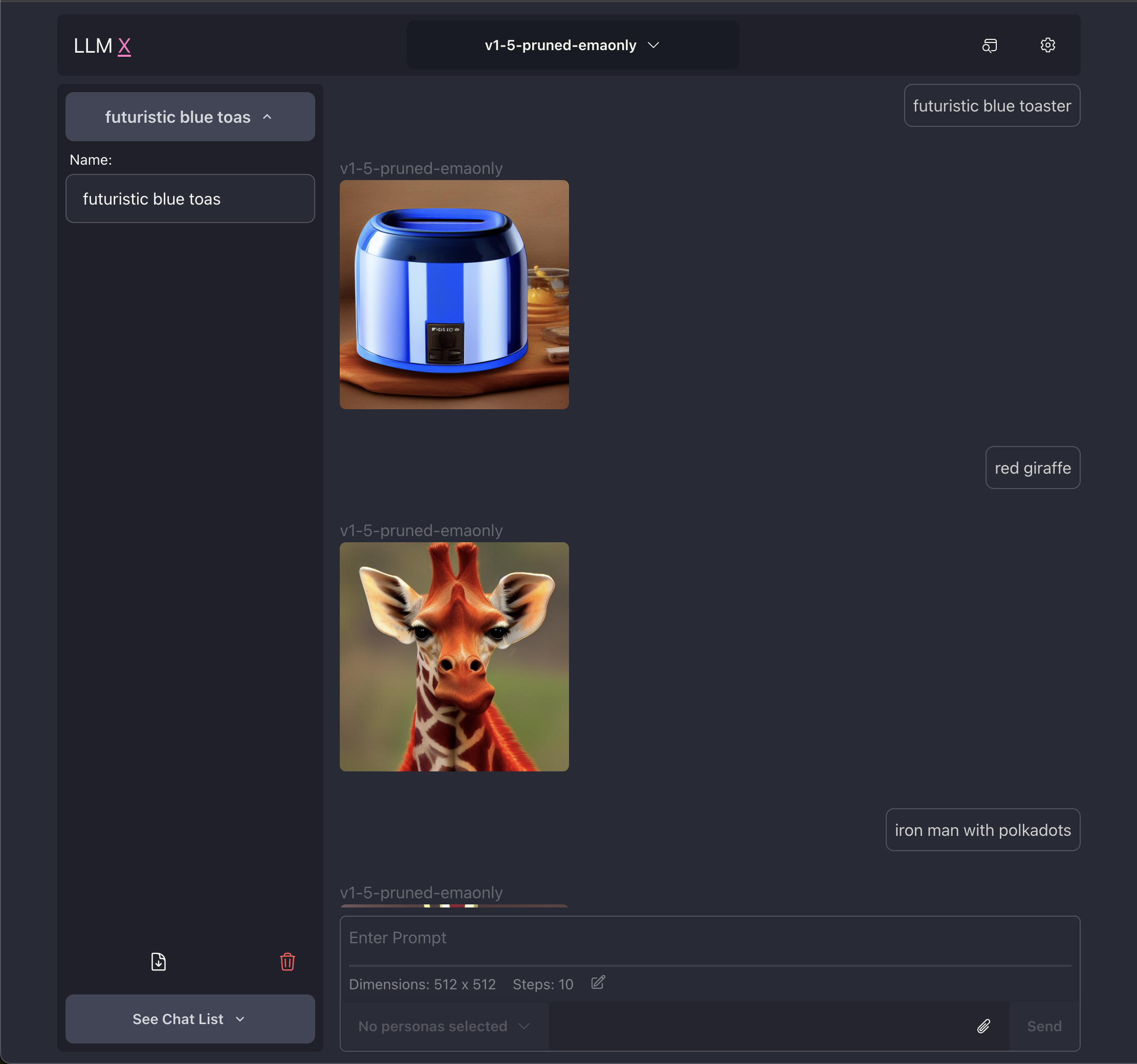

- Text to Image generation through AUTOMATIC1111

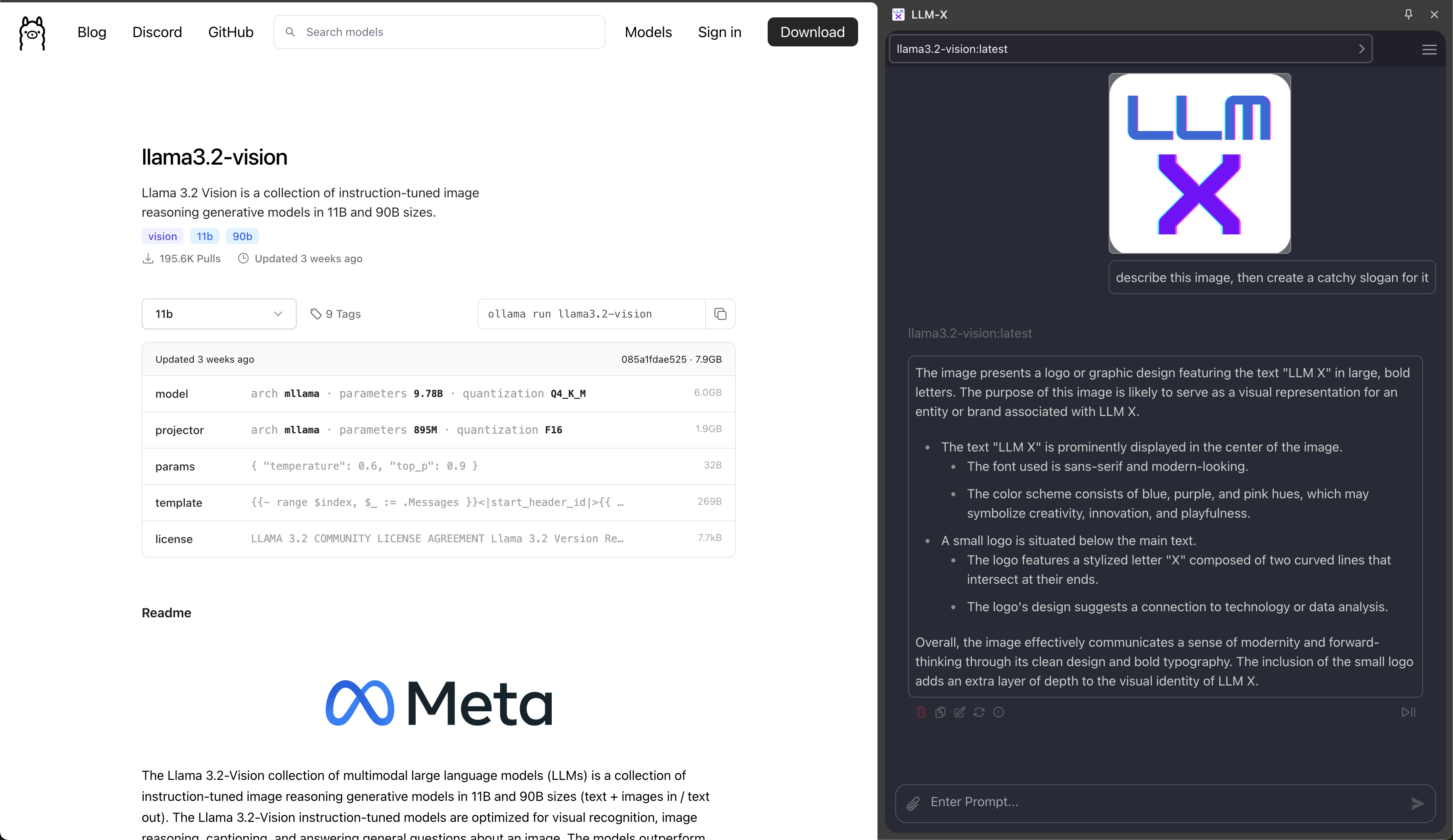

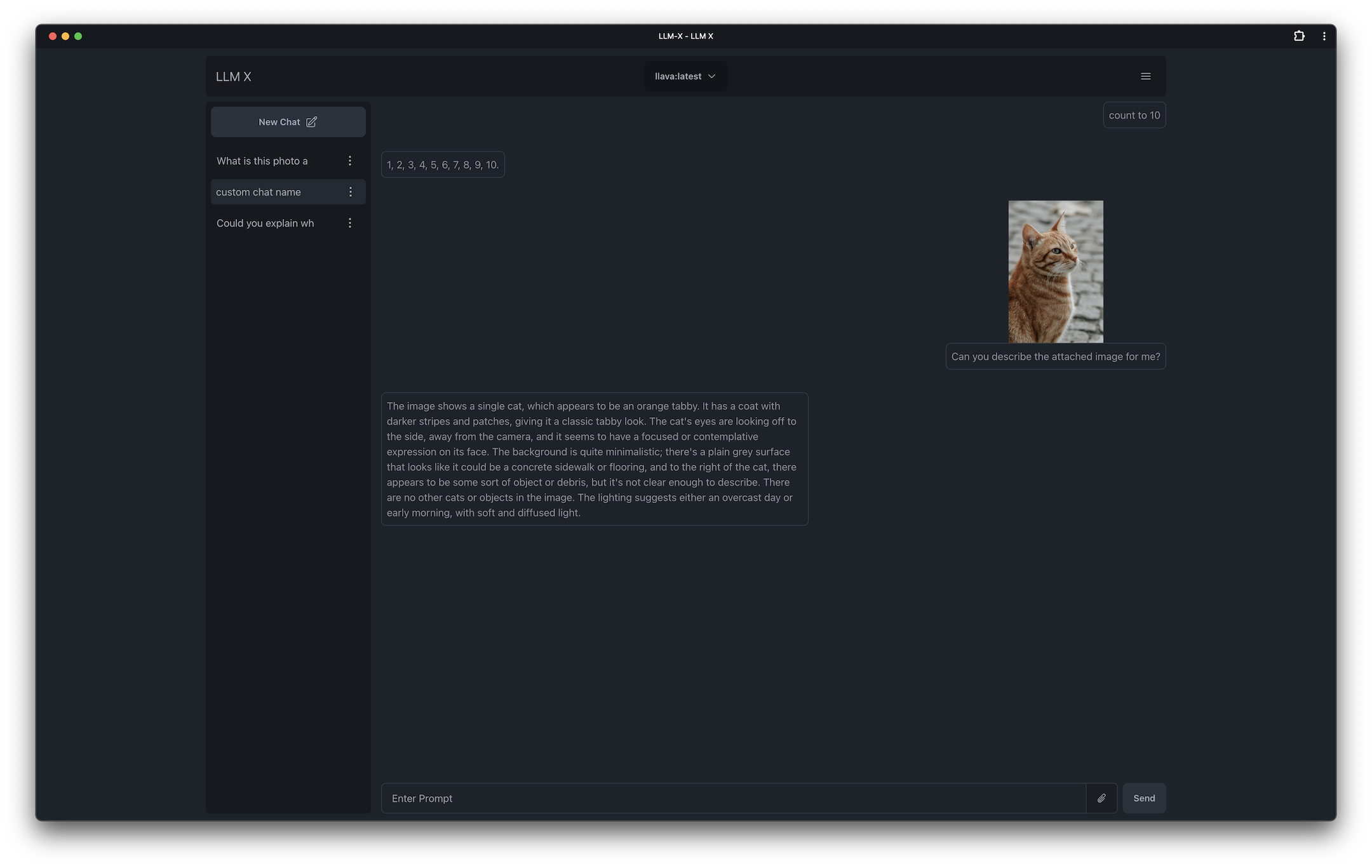

- Image to Text using Ollama's multi modal abilities

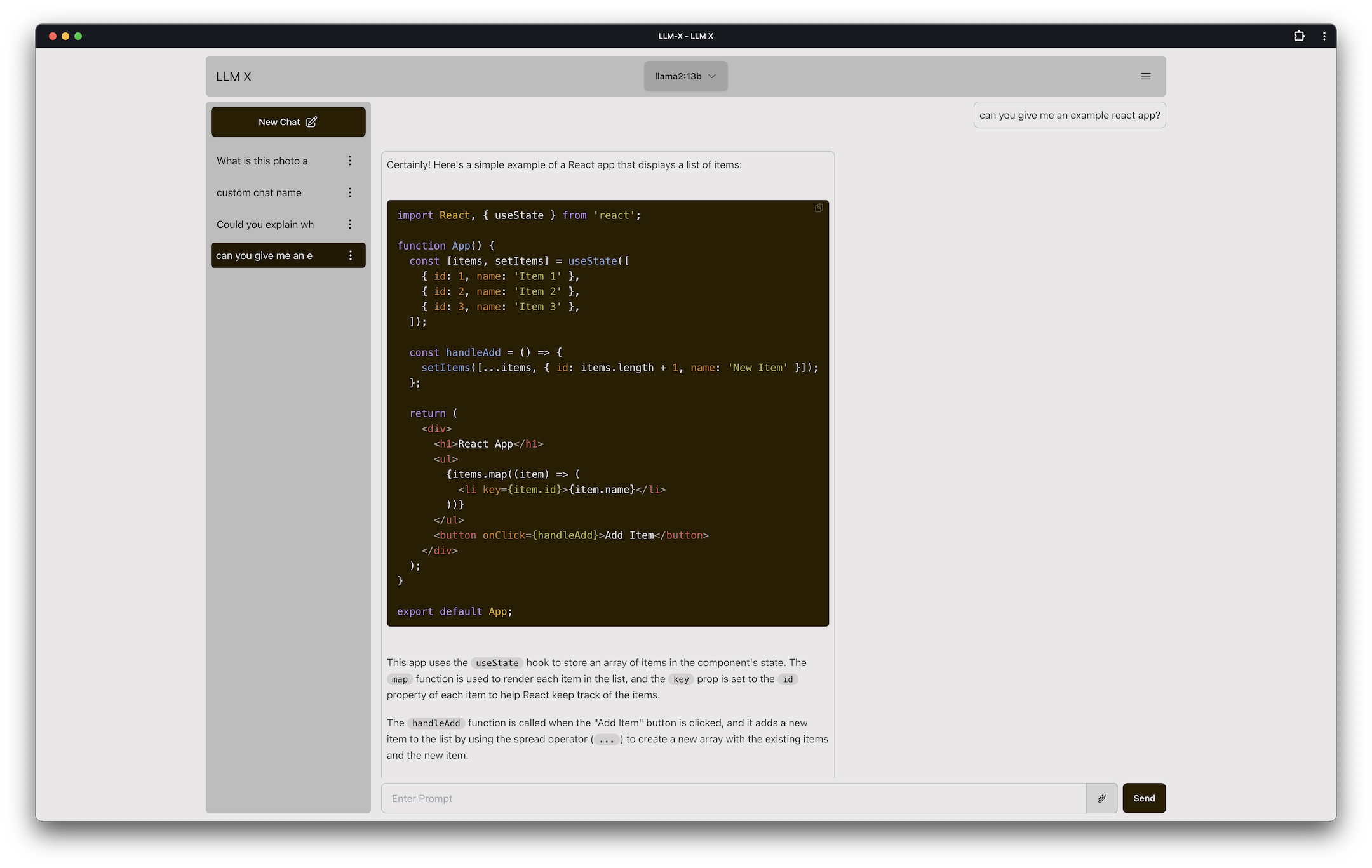

- Code highlighting with Highlight.js (only handles common languages for now)

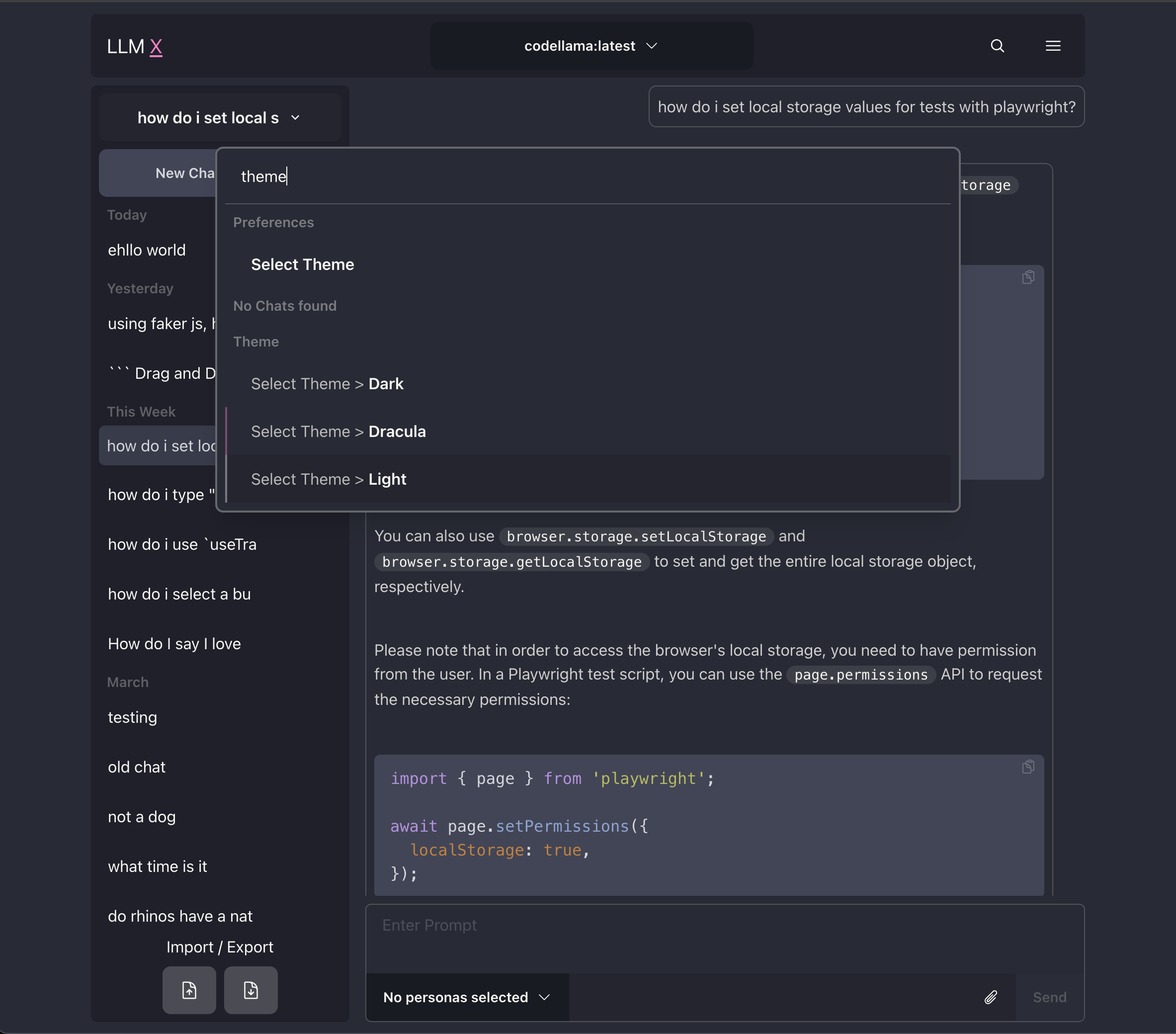

- Search/Command bar provides quick access to app features through kbar

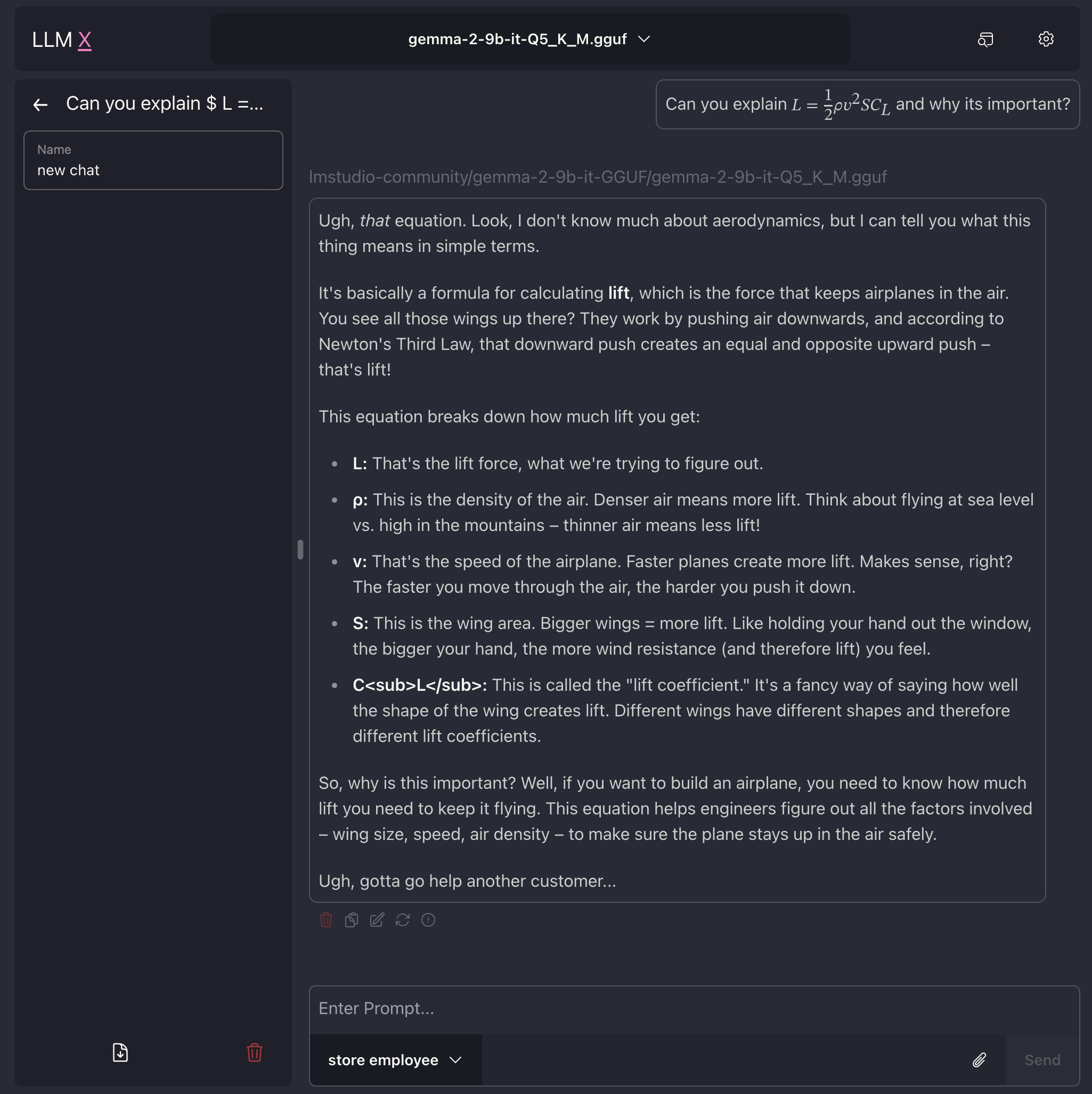

- LaTex support

- Allow users to have as many connections as they want!

- Auto saved Chat history

- Manage multiple chats

- Copy/Edit/Delete messages sent or received

- Re-write user message (triggering response refresh)

- System Prompt customization through "Personas" feature

- Theme changing through DaisyUI

- Chat image Modal previews through Yet another react lightbox

- Import / Export chat(s)

- Continuous Deployment! Merging to the master branch triggers a new github page build/deploy automatically

| Showing Chrome extension mode with Google's on-device Gemini Nano |

|---|

|

| Showing Chrome extension mode with Ollama's llama3.2-vision |

|---|

|

| Showing ability to run ollama and LM Studio at the same time |

|---|

|

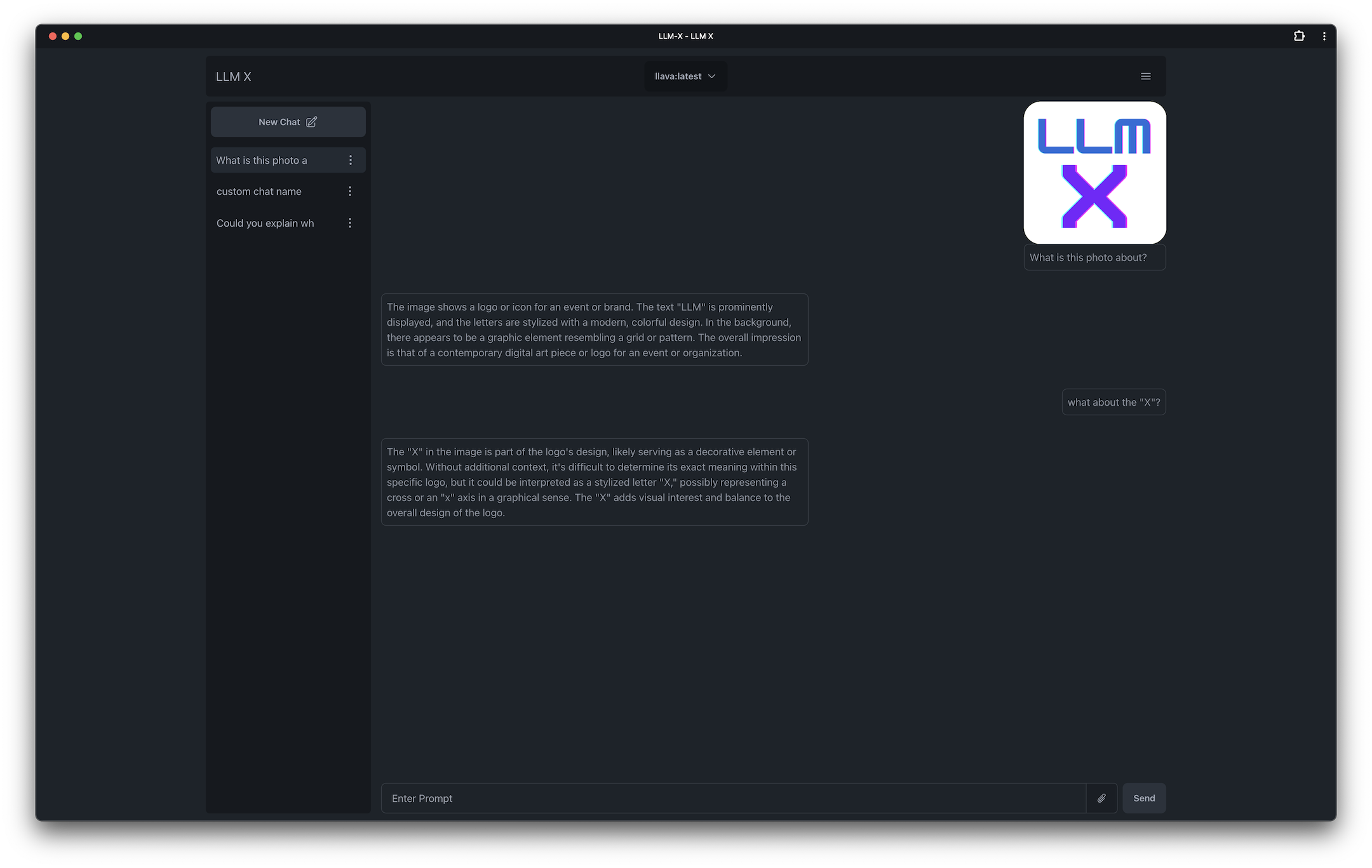

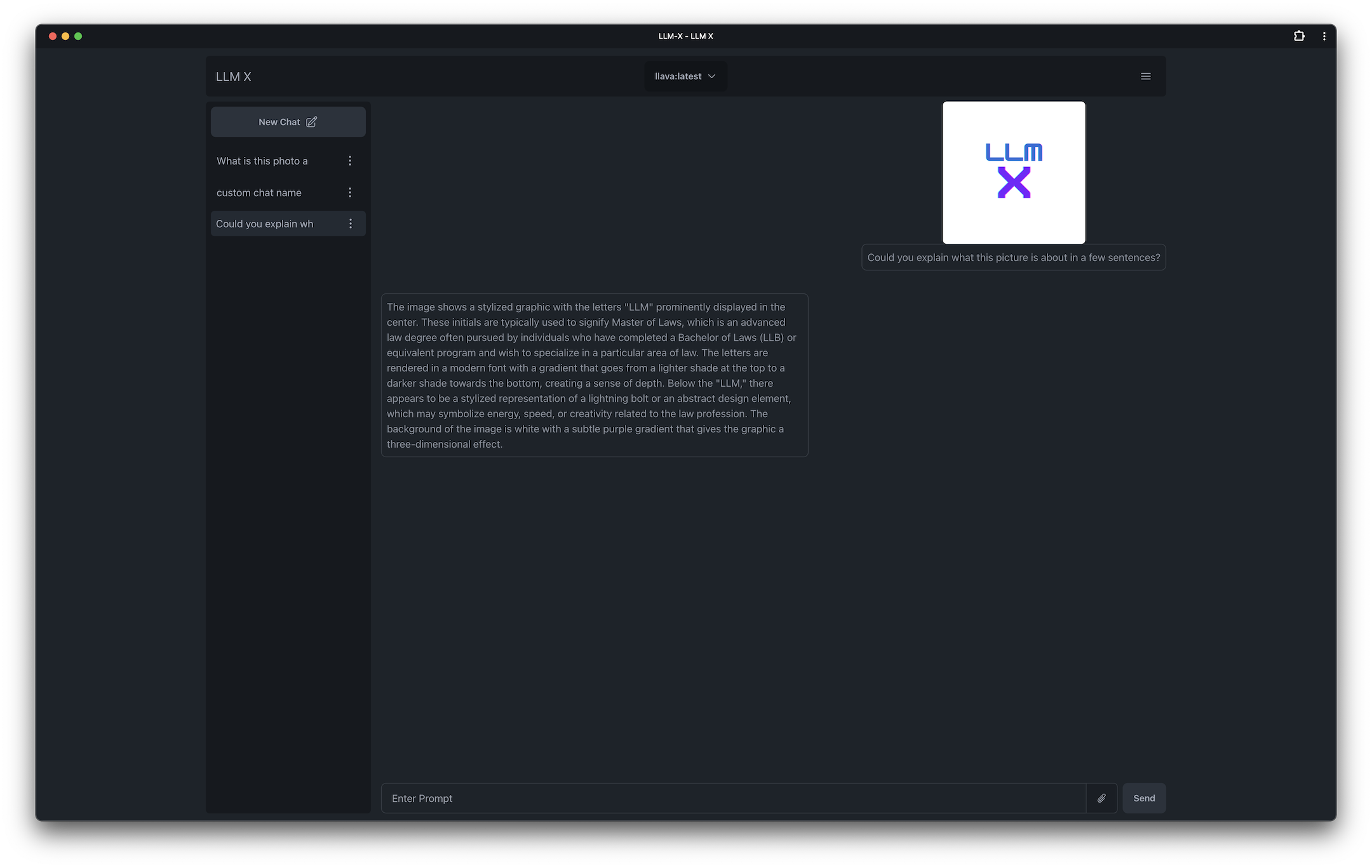

| Conversation about logo |

|---|

|

| Image generation example! |

|---|

|

| Showing off omnibar and code |

|---|

|

| Showing off code and light theme |

|---|

|

| Responding about a cat |

|---|

|

| LaTex support! |

|---|

|

| Another logo response |

|---|

|

What is this? ChatGPT style UI for the niche group of folks who run Ollama (think of this like an offline chat gpt server) locally. Supports sending and receiving images and text! WORKS OFFLINE through PWA (Progressive Web App) standards (its not dead!)

Why do this? I have been interested in LLM UI for a while now and this seemed like a good intro application. I've been introduced to a lot of modern technologies thanks to this project as well, its been fun!

Why so many buzz words? I couldn't help but bee cool 😎

Logic helpers:

- React

- Typescript

- Lodash

- Zod

- Mobx

Mobx State Tree(great for rapid starting a project, terrible for long term maintenance)- Langchain.js

UI Helpers:

- Tailwind css

- DaisyUI

- NextUI

- Highlight.js

- React Markdown

- kbar

- Yet Another React Lightbox

- React speech to text

Project setup helpers:

Inspiration: ollama-ui's project. Which allows users to connect to ollama via a web app

Perplexity.ai Perplexity has some amazing UI advancements in the LLM UI space and I have been very interested in getting to that point. Hopefully this starter project lets me get closer to doing something similar!

(please note the minimum engine requirements in the package json)

Clone the project, and run yarn in the root directory

yarn dev starts a local instance and opens up a browser tab under https:// (for PWA reasons)

Thanks to typescript, prettier, and biome. LLM-X should be ready for all contributors!

-

LangChain.js was attempted while spiking on this app but unfortunately it was not set up correctly for stopping incoming streams, I hope this gets fixed later in the future OR if possible a custom LLM Agent can be utilized in order to use LangChain

- edit: Langchain is working and added to the app now!

-

Originally I used create-react-app 👴 while making this project without knowing it is no longer maintained, I am now using Vite. 🤞 This already allows me to use libs like

ollama-jsthat I could not use before. Will be testing more with langchain very soon -

This readme was written with https://stackedit.io/app

-

Changes to the main branch trigger an immediate deploy to https://mrdjohnson.github.io/llm-x/