This repository provides accelerated deployment cases of deep learning CV popular models, and cuda c supports dynamic-batch image process, infer, decode, NMS. Most of the model transformation process is torch->onnx->tensorrt.

There are two ways to obtain onnx files:

- According to the network disk provided by TensorRT-Alpha, download ONNX directly. weiyun or google driver

- Follow the instructions provided by TensorRT-Alpha to manually export ONNX from the relevant python source code framework.

- 2023.01.01 🔥 update yolov3, yolov4, yolov5, yolov6

- 2023.01.04 🍅 update yolov7, yolox, yolor

- 2023.01.05 🎉 update u2net, libfacedetection

- 2023.01.08 🚀 The whole network is the first to support yolov8

- 2023.01.20 update efficientdet, pphunmanseg

The following environments have been tested:

Ubuntu18.04

- cuda11.3

- cudnn8.2.0

- gcc7.5.0

- tensorrt8.4.2.4

- opencv3.x or 4.x

- cmake3.10.2

Windows10

- cuda11.3

- cudnn8.2.0

- visual studio 2017 or 2019 or 2022

- tensorrt8.4.2.4

- opencv3.x or 4.x

Python environment(Optional)

# install miniconda first

conda create -n tensorrt-alpha python==3.8 -y

conda activate tensorrt-alpha

git clone https://github.com/FeiYull/tensorrt-alpha

cd tensorrt-alpha

pip install -r requirements.txt Installation Tutorial:

set your TensorRT_ROOT path:

git clone https://github.com/FeiYull/tensorrt-alpha

cd tensorrt-alpha/cmake

vim common.cmake

# set var TensorRT_ROOT to your path in line 20, eg:

# set(TensorRT_ROOT /root/TensorRT-8.4.2.4)start to build project: For example:yolov8

waiting for update

At present, more than 30 models have been implemented, and some onnx files of them are organized as follows:

| model | tesla v100(32G) | weiyun | google driver |

|---|---|---|---|

| yolov3 | weiyun | google driver | |

| yolov4 | weiyun | google driver | |

| yolov5 | weiyun | google driver | |

| yolov6 | weiyun | google driver | |

| yolov7 | weiyun | google driver | |

| yolov8 | weiyun | google driver | |

| yolox | weiyun | google driver | |

| yolor | weiyun | google driver | |

| u2net | weiyun | google driver | |

| libfacedetection | weiyun | google driver | |

| facemesh | weiyun | google driver | |

| pphumanseg | weiyun | google driver | |

| efficientdet | weiyun | google driver | |

| more...(🚀: I will be back soon!) |

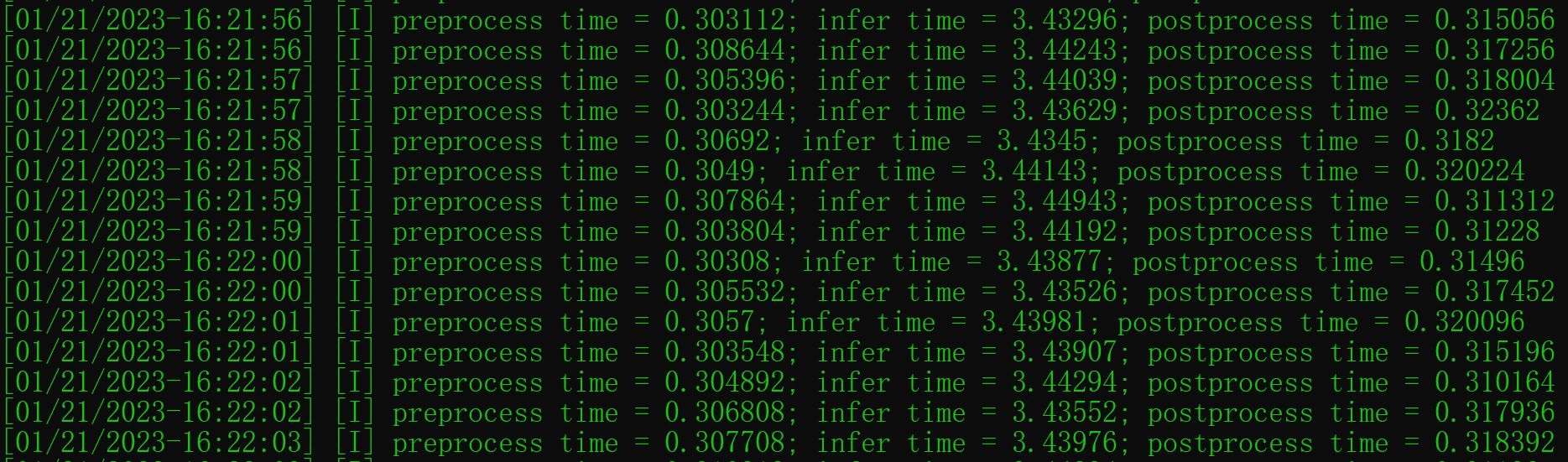

🍉We will test the time of all models on tesla v100 and A100! Now let's preview the performance of yolov8n on RTX2070m(8G):

| model | video resolution | model input size | GPU Memory-Usage | GPU-Util |

|---|---|---|---|---|

| yolov8n | 1920x1080 | 8x3x640x640 | 1093MiB/7982MiB | 14% |

cost time per frame

vsOurs(right).jpg)

yolov8n : Offical( left ) vs Ours( right )

vsOurs(right).jpg)

yolov7-tiny : Offical( left ) vs Ours( right )

vsOurs(right).jpg)

yolov6s : Offical( left ) vs Ours( right )

vsOurs(right)-img2.jpg)

yolov5s : Offical( left ) vs Ours( right )

vsOurs(right)-img1.jpg)

yolov5s : Offical( left ) vs Ours( right )

vsOurs(right-topk-2000).jpg)

libfacedetection : Offical( left ) vs Ours( right topK:2000)

[0].https://github.com/NVIDIA/TensorRT

[1].https://github.com/onnx/onnx-tensorrt

[2].https://github.com/NVIDIA-AI-IOT/torch2trt

[3].https://github.com/shouxieai/tensorRT_Pro

[4].https://github.com/opencv/opencv_zoo