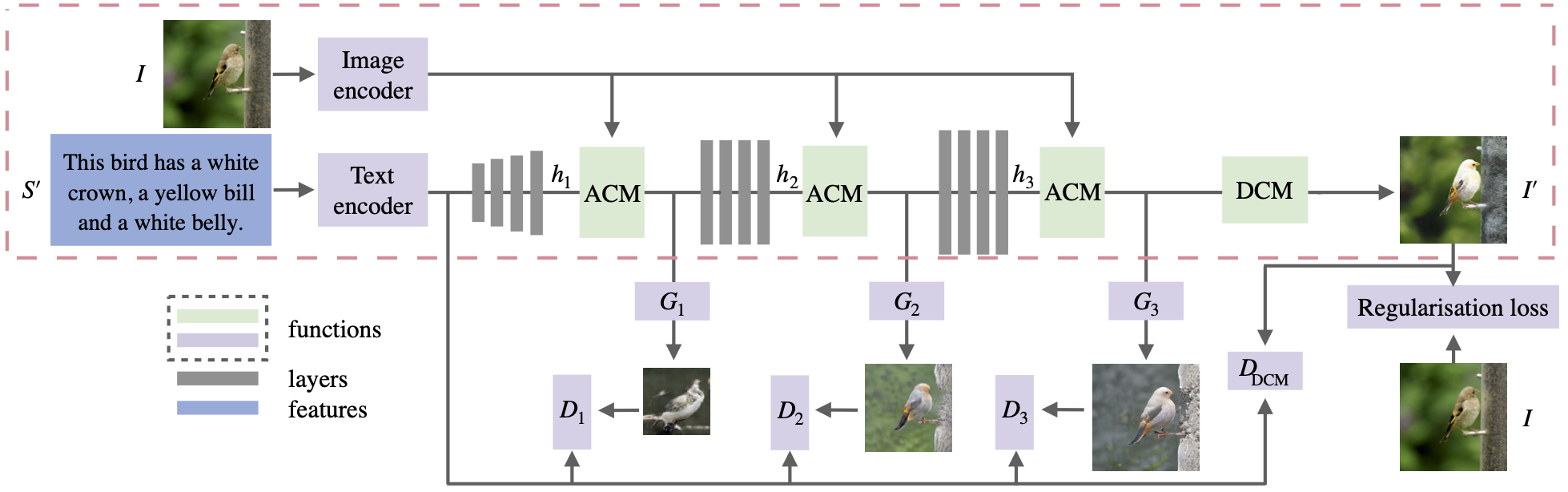

Pytorch implementation for ManiGAN: Text-Guided Image Manipulation. The goal is to semantically edit parts of an image according to the given text while preserving text-irrelevant contents.

ManiGAN: Text-Guided Image Manipulation.

Bowen Li, Xiaojuan Qi, Thomas Lukasiewicz, Philip H. S. Torr.

University of Oxford

CVPR 2020

- Download the preprocessed metadata for bird and coco, and save both into

data/ - Download bird dataset and extract the images to

data/birds/ - Download coco dataset and extract the images to

data/coco/

All code was developed and tested on CentOS 7 with Python 3.7 (Anaconda) and PyTorch 1.1.

DAMSM model includes text encoder and image encoder

- Pre-train DAMSM model for bird dataset:

python pretrain_DAMSM.py --cfg cfg/DAMSM/bird.yml --gpu 0

- Pre-train DAMSM model for coco dataset:

python pretrain_DAMSM.py --cfg cfg/DAMSM/coco.yml --gpu 1

- Train main module for bird dataset:

python main.py --cfg cfg/train_bird.yml --gpu 2

- Train main module for coco dataset:

python main.py --cfg cfg/train_coco.yml --gpu 3

*.yml files include configuration for training and testing.

Save trained main module to models/

- Train DCM for bird dataset:

python DCM.py --cfg cfg/train_bird.yml --gpu 2

- Train DCM for coco dataset:

python DCM.py --cfg cfg/train_coco.yml --gpu 3

- DAMSM for bird. Download and save it to

DAMSMencoders/ - DAMSM for coco. Download and save it to

DAMSMencoders/

-

Main module for bird. Download and save it to

models/ -

Main module for coco. Download and save it to

models/ -

DCM for bird. Download and save it to

models/ -

To verify the IS value on the bird dataset, please use this link. Download and save it to

models/ -

DCM for coco. Download and save it to

models/

- Test ManiGAN model for bird dataset:

python main.py --cfg cfg/eval_bird.yml --gpu 4

- Test ManiGAN model for coco dataset:

python main.py --cfg cfg/eval_coco.yml --gpu 5

- To generate images for all captions in the testing dataset, change B_VALIDATION to

Truein the eval_*.yml. - Inception Score for bird dataset: StackGAN-inception-model.

- Inception Score for coco dataset: improved-gan/inception_score.

- code/main.py: the entry point for training the main module and testing ManiGAN.

- code/DCM.py: the entry point for training the DCM.

- code/trainer.py: creates the main module networks, harnesses and reports the progress of training.

- code/trainerDCM.py: creates the DCM networks, harnesses and reports the progress of training.

- code/model.py: defines the architecture of ManiGAN.

- code/attention.py: defines the spatial and channel-wise attentions.

- code/VGGFeatureLoss.py: defines the architecture of the VGG-16.

- code/datasets.py: defines the class for loading images and captions.

- code/pretrain_DAMSM.py: trains the text and image encoders, harnesses and reports the progress of training.

- code/miscc/losses.py: defines and computes the losses for the main module.

- code/miscc/lossesDCM.py: defines and computes the losses for DCM.

- code/miscc/config.py: creates the option list.

- code/miscc/utils.py: additional functions.

If you find this useful for your research, please use the following.

@inproceedings{li2020manigan,

title={Manigan: Text-guided image manipulation},

author={Li, Bowen and Qi, Xiaojuan and Lukasiewicz, Thomas and Torr, Philip HS},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={7880--7889},

year={2020}

}

This code borrows heavily from ControlGAN repository. Many thanks.