This repository contains the dataset and code for the NAACL 2024 paper "MSCINLI: A Diverse Benchmark for Scientific Natural Language Inference." The dataset can be downloaded directly from here. You can also access the dataset through huggingface.

If you face any difficulties while downloading the dataset, raise an issue in this repository or contact us at msadat3@uic.edu.

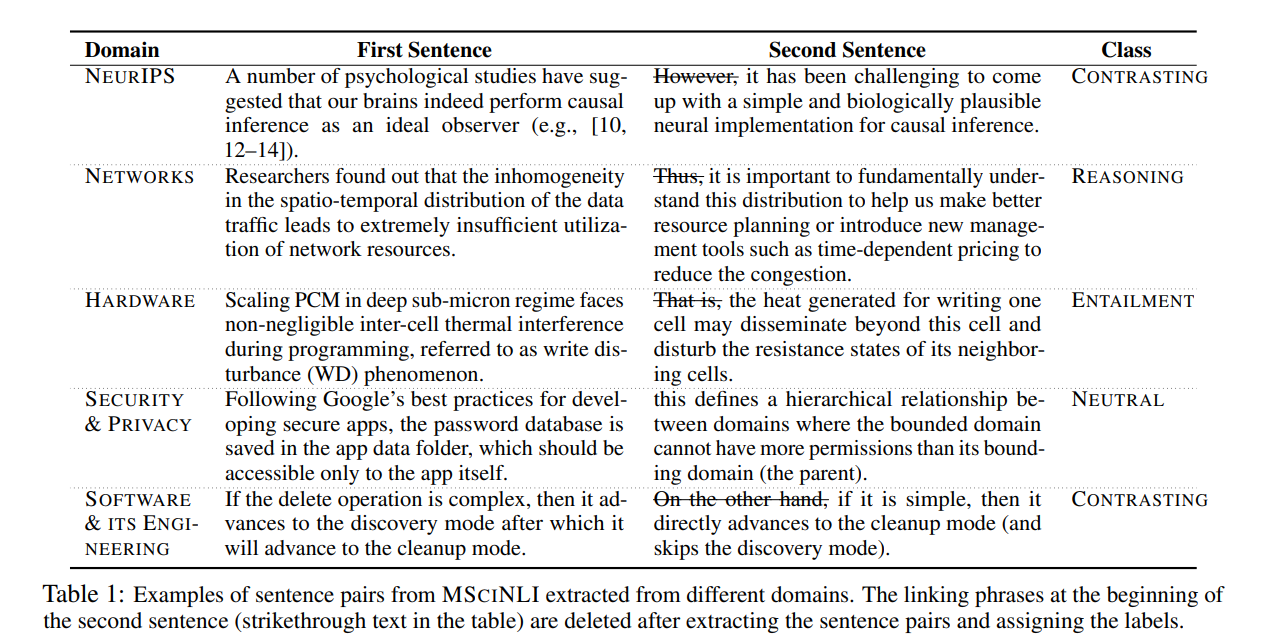

The task of scientific Natural Language Inference (NLI) involves predicting the semantic relation between two sentences extracted from research articles. This task was recently proposed along with a new dataset called SciNLI derived from papers published in the computational linguistics domain. In this paper, we aim to introduce diversity in the scientific NLI task and present MSciNLI, a dataset containing

MSciNLI is derived from the papers published in five different scientific domains: "Hardware", "Networks", "Software & its Engineering", "Security & Privacy", and "NeurIPS." Similar to SciNLI, we use a distant supervision method that exploits the linking phrases between sentences in scientific papers to construct a large training set and directly use these potentially noisy sentence pairs during training. For the test and development sets, we manually annotate

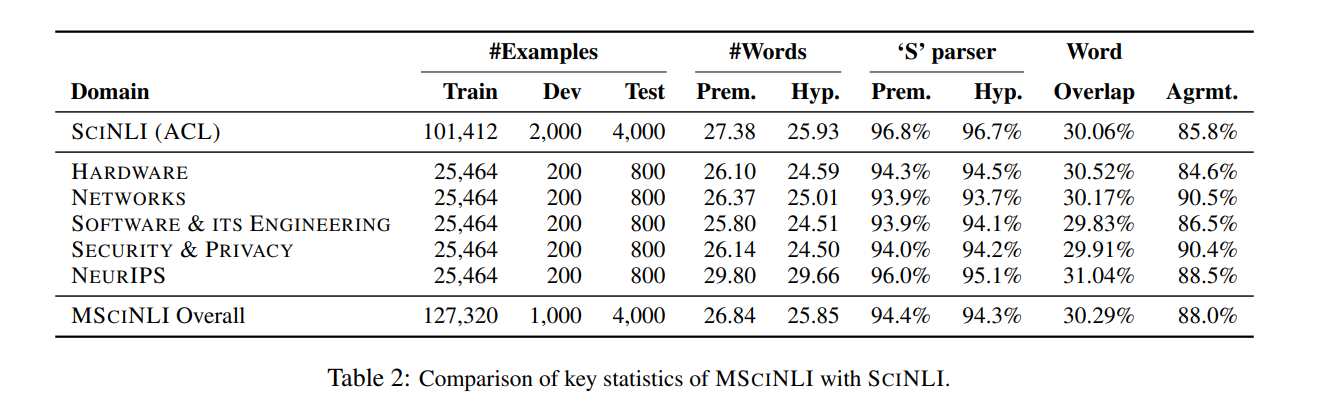

A comparison of key statistics of MSciNLI with those of the previously existing SciNLI can be seen below.

=> train.csv, test.csv and dev.csv contain the training, testing and development data, respectively. Each file has five columns:

* 'id': a unique id for each sample.

* 'sentence1': the premise of each sample.

* 'sentence2': the hypothesis of each sample.

* 'label': corresponding label representing the semantic relation between the premise and hypothesis.

* 'domain': the scientific domain from which each sample is extracted.

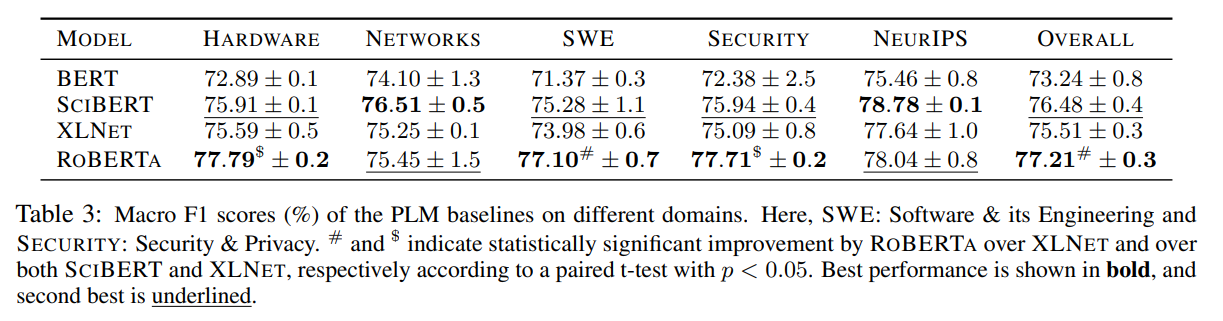

The performance of the Pre-trained Language Model (PLM) baselines can be seen in the Table below. The experimental details are described in our paper.

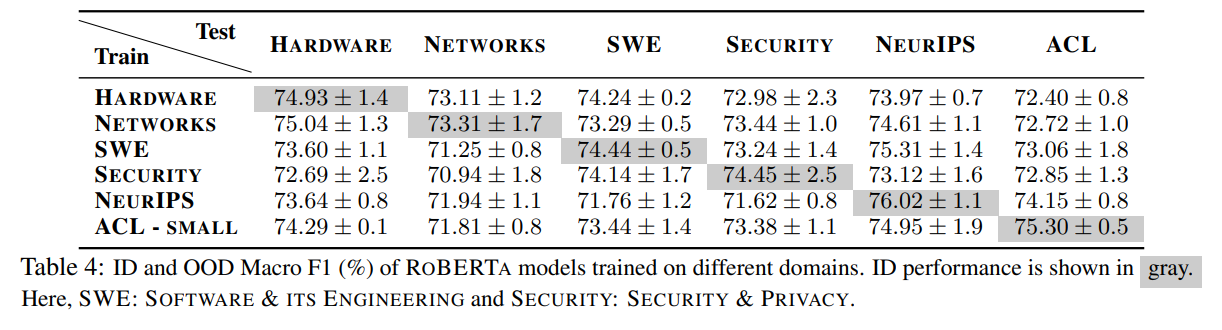

A comparison of the In-domain (ID) and out-of-domain (OOD) performance of the best performing PLM baseline, RoBERTa fine-tuned separately on the five domains instroduced in MSciNLI and the ACL domain from SciNLI can be seen below.

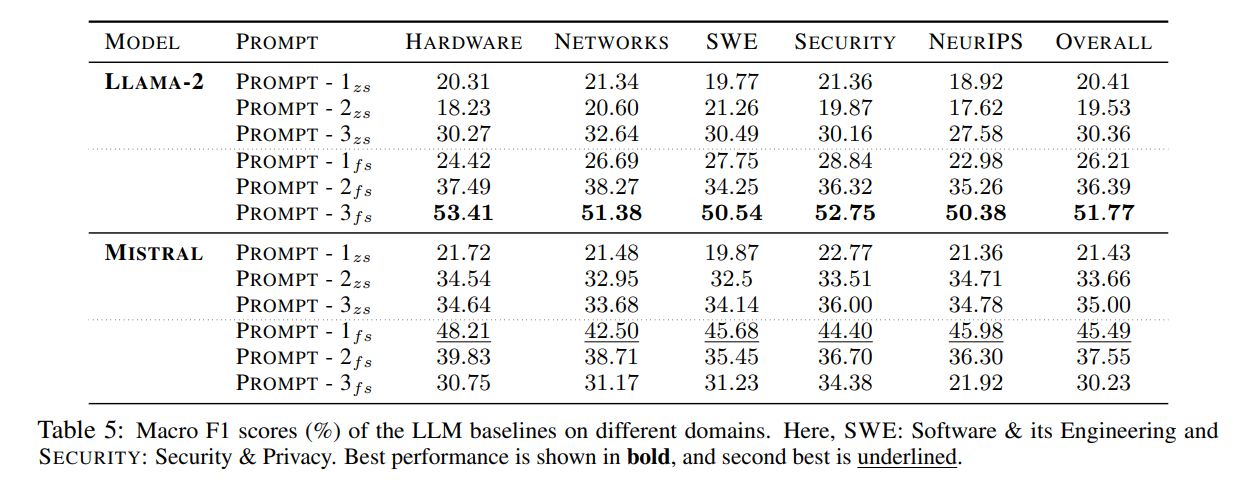

The performance of the Large Language Model (LLM) baselines can be seen in the Table below. The experimental details and the prompts used for the LLMs are described in our paper.

Coming soon!

If you use this dataset, please cite our paper:

@inproceedings{sadat-caragea-2024-mscinli,

title = "MSCINLI: A Diverse Benchmark for Scientific Natural Language Inference",

author = "Sadat, Mobashir and Caragea, Cornelia",

booktitle = "2024 Annual Conference of the North American Chapter of the Association for Computational Linguistics",

month = june,

year = "2024",

address = "Mexico City, Mexico",

publisher = "Association for Computational Linguistics",

url = "",

pages = "",

}

MSciNLI is licensed with Attribution-ShareAlike 4.0 International (CC BY-SA 4.0).

Please contact us at msadat3@uic.edu with any questions.