##Overview

This project creates art using structures learned by the vgg19 neural network. The algorithm starts with a random uniform distribution of noise which is then updated to become the resulting image. This is done by giving the vgg19 network three separate inputs: random uniform noise, an image to copy style from, and an image to copy content from. The activations of the vgg19 network are then compared for each of the given inputs. The loss becomes the sum of these differences. To minimize the loss, we take the derivative of the loss with respect to the model input. Then, the model input (noise) is updated to reflect its contribution to the loss. This process is iterated about 1,500 times for optimal results.

###To use:

- Download the weights for the trained vgg19 network here. Then put it in the root of the project.

- To run using default settings :

python3 transfer.py- If you wish to sepcify the content and style images:

python3 transfer.py -style_image_path [path to image] -content_image_path [path to image]- To find out all command line arguments:

python3 transfer.py -h- To duplicate my results exactly:

- Make sure the shell script is executable

chmod +x batchRun.sh - Run the script

./batchRun.sh

###Dependencies: 1. Python3 2. Tensorflow 3. Pillow 4. Scipy 5. Numpy 6. (optional) GPU

###Comparison

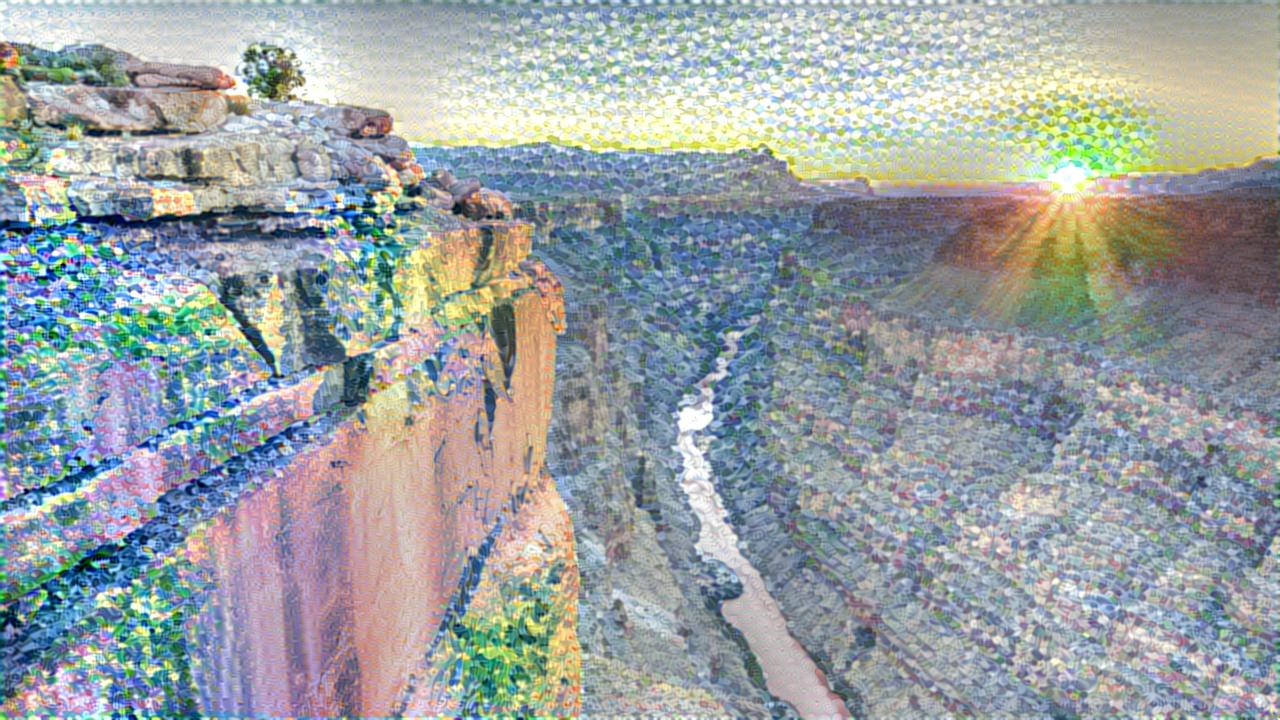

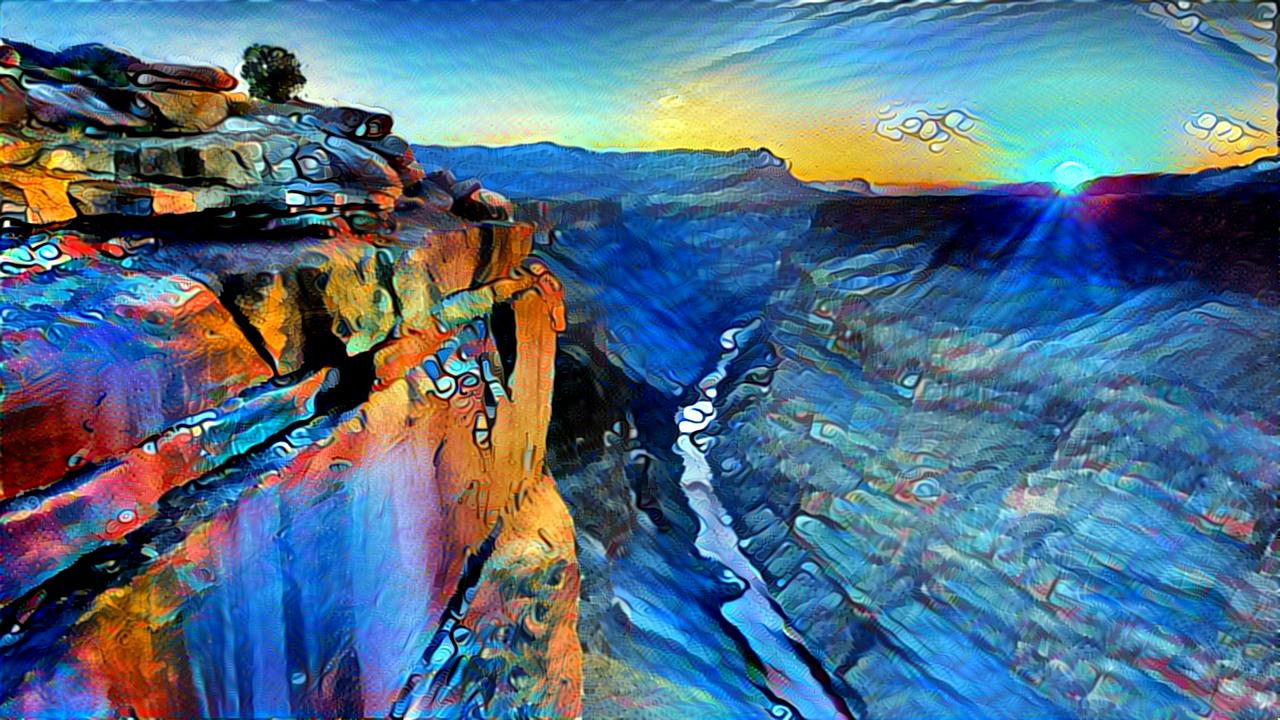

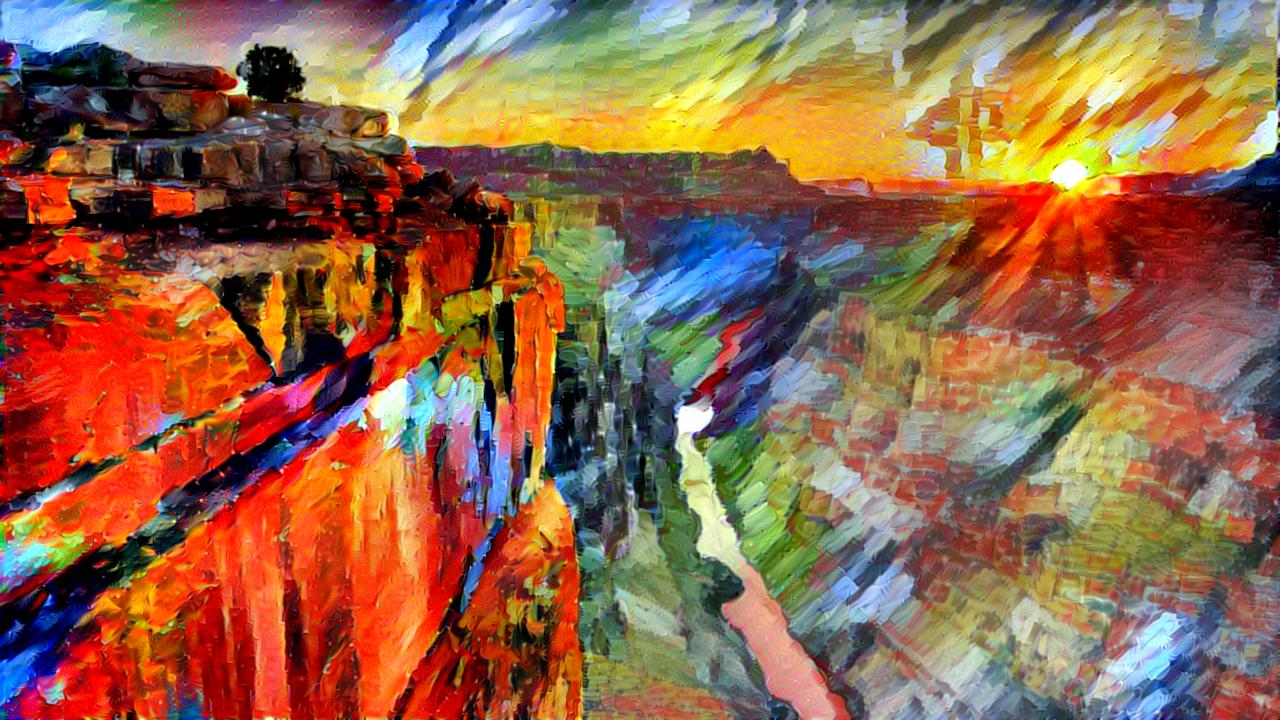

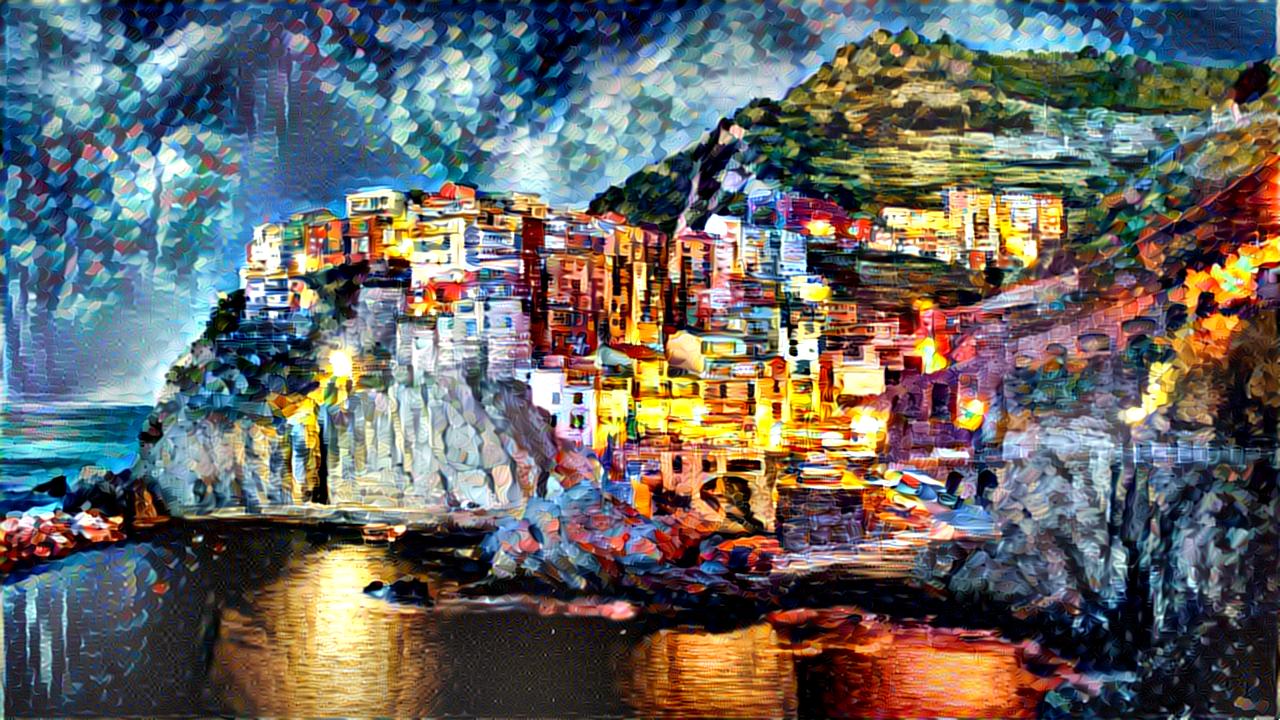

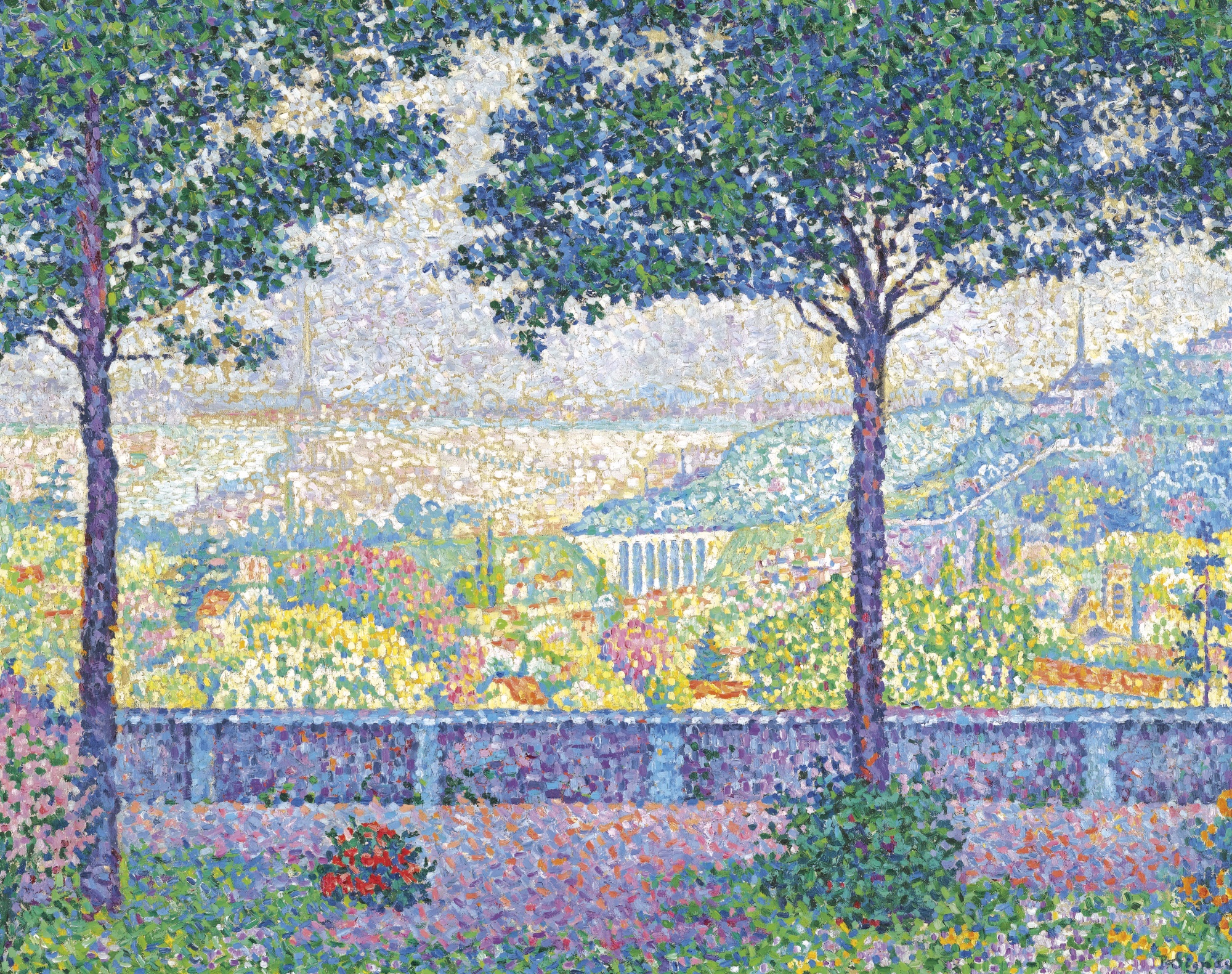

Content:

| Style | Result |

|---|---|

|

|

|

|

|

|

|

|

|

|

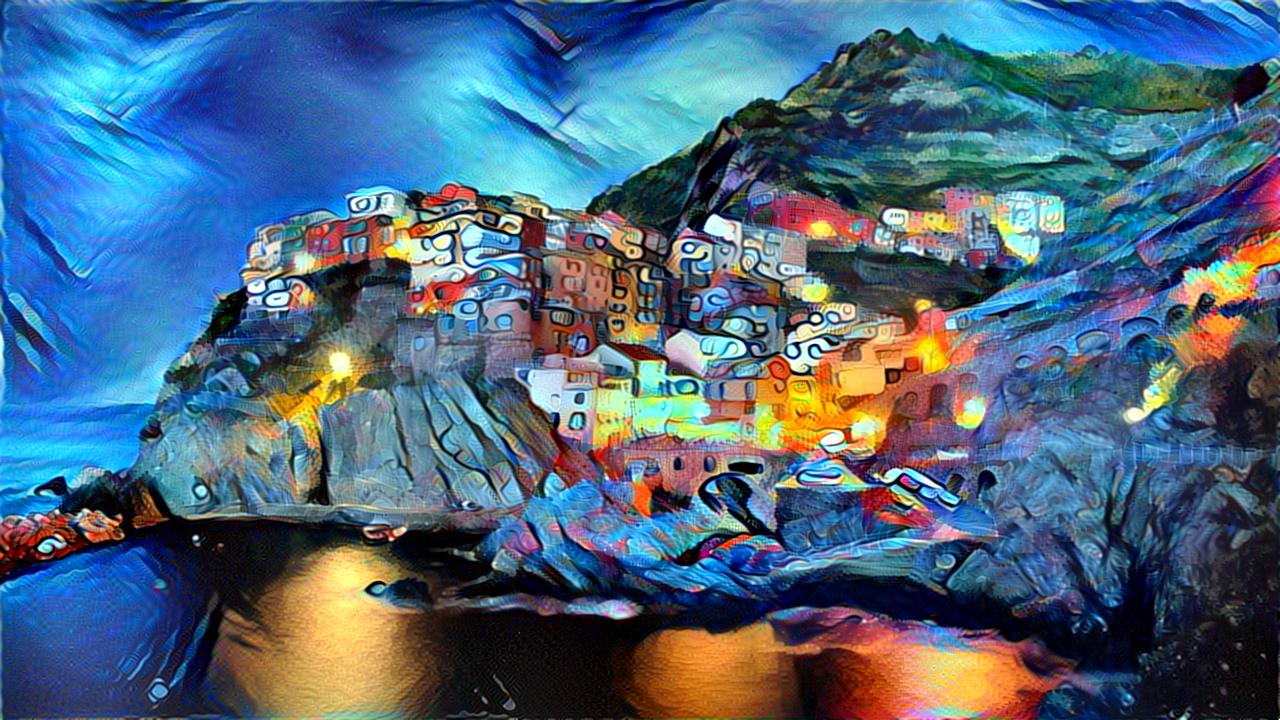

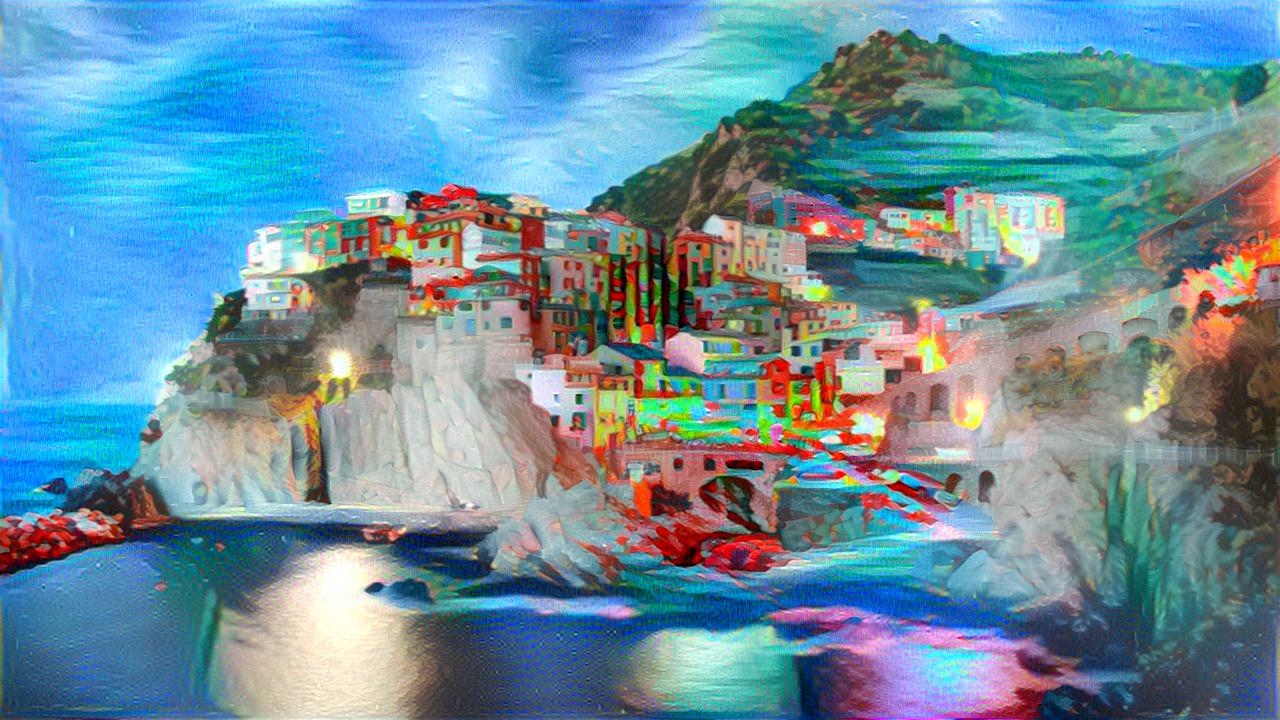

Content:

| Style | Result |

|---|---|

|

|

|

|

|

|

|

|

|

|

###Credits

#####References

- Texture Synthesis Using Convolutional Neural Networks (Gatys et al.)

- Understanding Deep Image Representations by Inverting Them (Mahendran,Vedaldi)

#####VGG network