This reference implementation reviews some design decisions from the baseline, and incorporates new recommended infrastructure options for a multicluster (and multiregion) architecture. This implementation and document are meant to guide the multiple distinct teams introduced in the AKS baseline through the process of expanding from a single cluster to a multicluster solution. The fundamental driver for this reference architecture is Reliability, and it uses the Geode cloud design pattern.

Note: This implementation does not use AKS Fleet Manager capability or any other automated cross-cluster management technologies, but instead represents a manual approach to combining multiple AKS clusters together. Operating fleets containing a large number of clusters is usually best performed with advanced and dedicated tooling. This implementation supports a small scale and introduces some of the core concepts that will be necessary regardless of your scale or tooling choices.

Throughout the reference implementation, you will see reference to Contoso Bicycle. They are a fictional, small, and fast-growing startup that provides online web services to its clientele on the east coast of the United States. This narrative provides grounding for some implementation details, naming conventions, etc. You should adapt as you see fit.

| 🎓 Foundational Understanding |

|---|

| If you haven't familiarized yourself with the general-purpose AKS baseline cluster architecture, you should start there before continuing here. The architecture rationalized and constructed in that implementation is the direct foundation of this body of work. This reference implementation avoids rearticulating points that are already addressed in the AKS baseline cluster, and we assume you've already read it before reading the guidance in this architecture. |

The Contoso Bicycle app team that owns the a0042 workload app has deployed an AKS cluster strategically located in the East US 2 region, because this is where most of their customer base can be found. They have operated this single AKS cluster following Microsoft's recommended baseline architecture. They followed the guidance that AKS baseline clusters should be deployed across multiple availability zones within the same region.

However, now they realize that if the East US 2 region went fully down, availability zone coverage is not sufficient. Even though the SLAs are acceptable for their business continuity plan, they are starting to reconsider their options, and how their stateless application (Application ID: a0042) could increase its availability in case of a complete regional outage.

They started conversations with the business unit (BU0001) to increment the number of clusters by one. In other words, they are proposing to move to a multicluster infrastructure solution in which multiple instances of the same application could live in different Azure regions.

This architectural decision has multiple implications for the Contoso Bicycle organization. It isn't just about following the baseline twice, or adding another the region to get a twin infrastructure. They need to look for how they can efficiently share some of their Azure resources, as well as detect those that need to be added. They also need to consider how to deploy more than one cluster as well as operate them, and which specific regions they deploy to. There are many other factors that they need to consider while striving for higher availability.

This project has a companion article that describes some of the challenges, design patterns, and best practices for an AKS multi-region solution designed for high availability. You can find this article on the Azure Architecture Center at Azure Kubernetes Service (AKS) baseline for multiregion clusters. If you haven't already reviewed it, we suggest you read it. It gives context to the considerations applied in this implementation. Ultimately, this implementation is the direct implementation of that specific architectural guidance.

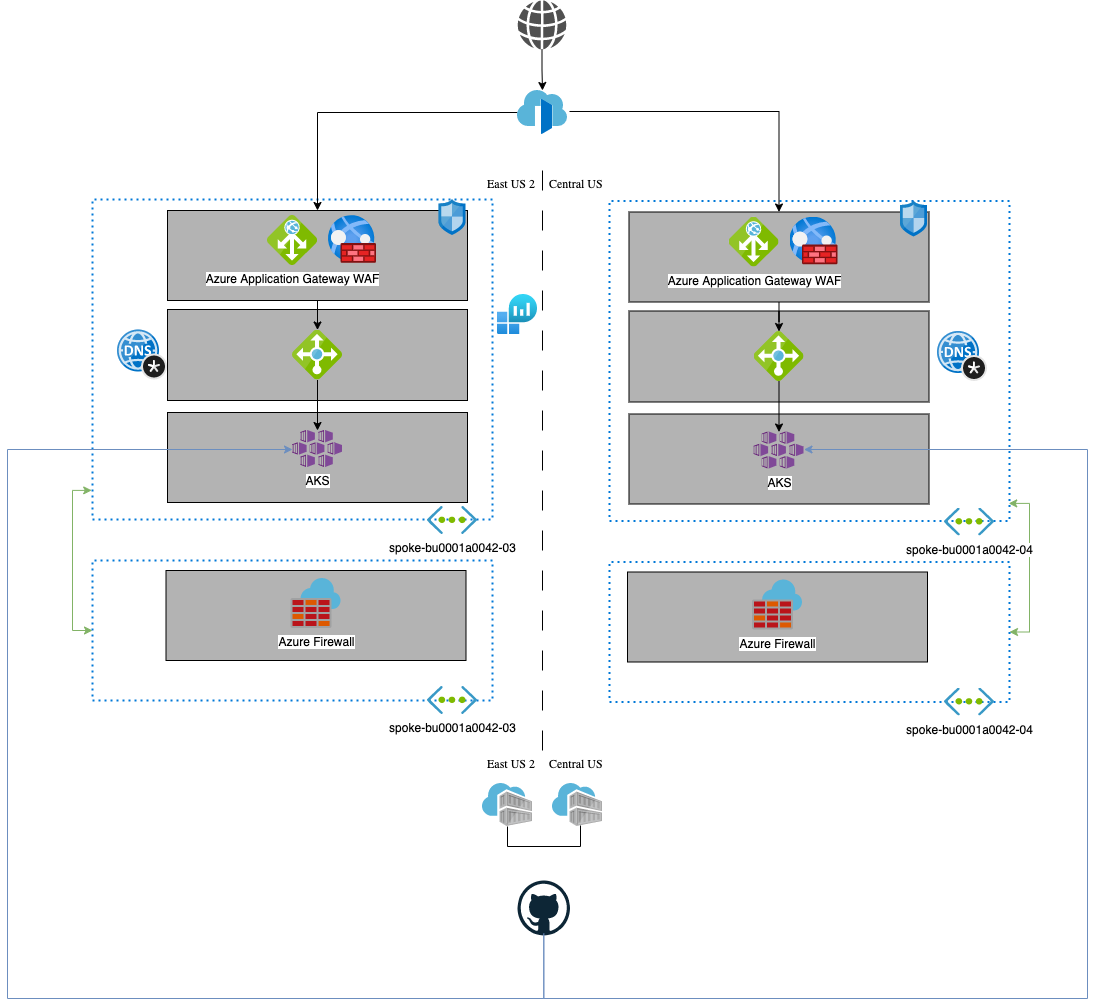

This architecture is infrastructure focused, more so than on workload. It concentrates on two AKS clusters, including concerns like multiregion deployments, the desired state and bootstrapping of the clusters, geo-replication, network topologies, and more.

The implementation presented here, like in the baseline, is the minimum recommended starting (baseline) for a multi-cluster AKS solution. This implementation integrates with Azure services that deliver geo-replication, a centralized observability approach, a network topology that supports multiregional growth, and traffic balancing.

Finally, this implementation uses the ASP.NET Docker samples as an example workload. This workload is purposefully uninteresting, as it is here exclusively to help you experience the multicluster infrastructure.

- Azure Kubernetes Service (AKS) v1.30

- Azure virtual networks (hub-spoke)

- Azure Front Door (classic)

- Azure Application Gateway with web application firewall (WAF)

- Azure Container Registry

- Azure Monitor Log Analytics

- Flux v2 GitOps Operator [AKS-managed extension]

- Traefik Ingress Controller

- Azure Workload Identity [AKS-managed add-on]

- Azure Key Vault Secret Store CSI Provider [AKS-managed add-on]

- Begin by ensuring you install and meet the prerequisites

- Plan your Microsoft Entra integration

- Deploy the shared services for your clusters

- Build the hub-spoke network

- Procure client-facing and AKS ingress controller TLS certificates

- Deploy the two AKS clusters and supporting services

- Just like the cluster, there are workload prerequisites to address

- Configure AKS ingress controller with Azure Key Vault integration

- Deploy the workload

- Perform end-to-end deployment validation

Most of the Azure resources deployed in the prior steps will incur ongoing charges unless removed.

The main costs of this reference implementation are (in order):

| Component | Approximate cost |

|---|---|

| Azure Firewall dedicated to control outbound traffic | ~40% |

| Azure Front Door (Premium) dedicated to globally load balance traffic between muliple regions | ~14% |

| Node pool virtual machines used inside the cluster | ~31% |

| Application Gateway, which controls the ingress traffic to the workload | ~11% |

| Log Analytics | ~4% |

Azure Firewall can be a shared resource, and maybe your company already has one and you can reuse in existing regional hubs.

Azure Front Door (Premium) is a shared global resource to distribute traffic among multiple regional endpoints. Your company might already have one to reuse as well.

The virtual machines are used to host the nodes for the AKS cluster. The cluster can be shared by several applications. You can analyze the size and the number of nodes. The reference implementation has the minimum recommended nodes for production environments. In a multicluster environment, you have at least two clusters and should choose a scale appropriate to your workload. You should perform traffic analysis and consider your failover strategy and autoscaling configuration when planning your virtual machine scaling strategy.

Keep an eye on Log Analytics data growth as time goes by and manage the information that it collects. The main cost is related to data ingestion into the Log Analytics workspace, and you can fine tune the data ingestion to remove low-value data.

There is WAF protection enabled on Application Gateway and Azure Front Door. The WAF rules on Azure Front Door have extra cost. You can disable these rules if you decide you don't need them. However, the consequence is that potentially malicious traffic will arrive at Application Gateway to be processed by its WAF. These requests can use resources from Application Gateway instead of being eliminated as quickly as possible.

This reference implementation intentionally does not cover all scenarios. If you are looking for other topics that are not addressed here, visit AKS baseline for the complete list of covered scenarios around AKS.

- Azure Kubernetes Service documentation

- Microsoft Azure Well-Architected Framework

- Microservices architecture on AKS

- Mission-critical baseline architecture on Azure

Please see our contributor guide.

This project has adopted the Microsoft Open Source Code of Conduct. For more information see the Code of Conduct FAQ or contact opencode@microsoft.com with any additional questions or comments.

With ❤️ from Microsoft Patterns & Practices, Azure Architecture Center.