Welcome to the Transformer code: Step-by-step Understanding project! 🌟 In this repository, you'll embark on a visually pleasing journey through the intricacies of the Transformer architecture. From Embedding to MultiHeadAttention, Encoder, and Decoder, each component is elegantly explained with detailed code and comment. Let's unravel the magic of Transformers together!

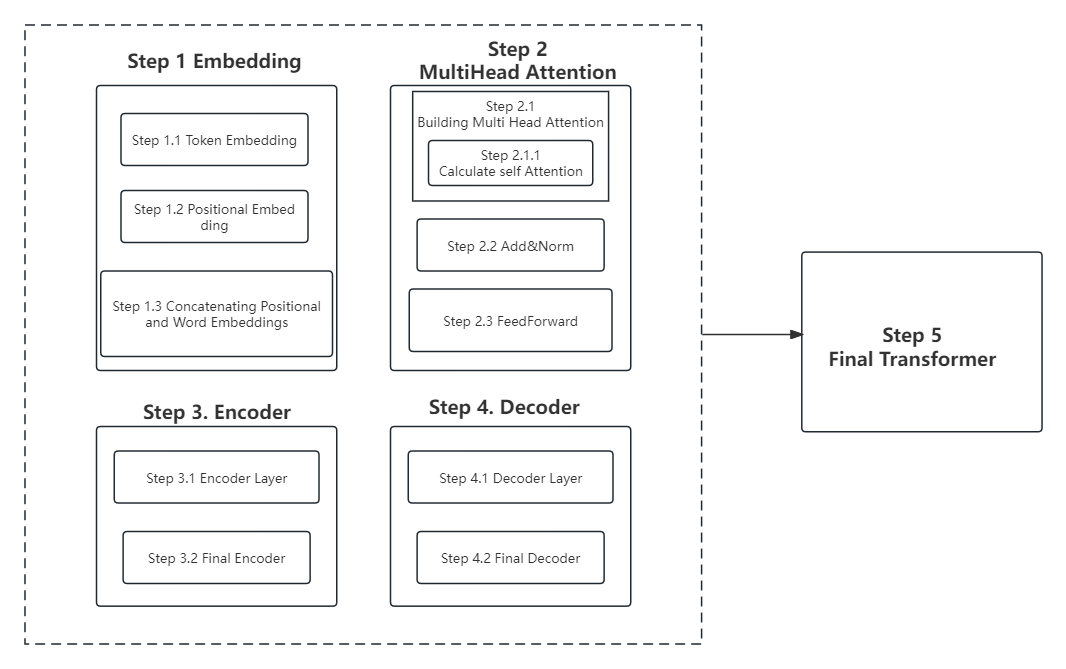

The following is an overall structure diagram of the code, including each step and its respective sub-modules.

🚀 Although I wish to provide a detailed and comprehensive explanation of the Transformer to ensure that everyone can understand it, this article still has many shortcomings. The next step is to delve into more detailed explanations of certain aspects.

- Provide a detailed explanation of the masking technique and its application.

- Conduct input-output tests for each step to help readers better understand how it works.

- Explain practical tasks based on the Transformer architecture, such as machine translation and text classification.

Look forward to the upcoming content updates. Also, feel free to leave questions and suggestions in the comments section. I will do my best to answer and improve them.

📜 This project is licensed under the MIT License, fostering an open and collaborative canvas for knowledge sharing.

Feel free to star ⭐️ this repository if you find it visually delightful! Happy coding! 🎨🤖✨