Cartography is a Python tool that consolidates infrastructure assets and the relationships between them in an intuitive graph view powered by a Neo4j database.

Cartography aims to enable a broad set of exploration and automation scenarios. It is particularly good at exposing otherwise hidden dependency relationships between your service's assets so that you may validate assumptions about security risks.

Service owners can generate asset reports, Red Teamers can discover attack paths, and Blue Teamers can identify areas for security improvement. All can benefit from using the graph for manual exploration through a web frontend interface, or in an automated fashion by calling the APIs.

Cartography is not the only security graph tool out there, but it differentiates itself by being fully-featured yet generic and extensible enough to help make anyone better understand their risk exposure, regardless of what platforms they use. Rather than being focused on one core scenario or attack vector like the other linked tools, Cartography focuses on flexibility and exploration.

You can learn more about the story behind Cartography in our presentation at BSidesSF 2019.

Time to set up the server that will run Cartography. Cartography should work on both Linux and Windows servers, but bear in mind we've only tested it in Linux so far. Cartography requires Python 3.4 or greater.

-

Get and install the Neo4j graph database on your server.

-

Go to the Neo4j download page, click "Community Server" and download Neo4j Community Edition 3.2.*.

⚠️ At this time we run our automated tests on Neo4j version 3.2.*. 3.3.* will work but it will currently fail the [test syntax test](https://github.com/lyft/cartography/blob/8f3f4b739e0033a7849c35cfa8edd3b0067be509/tests/integration/cartography/data/jobs/test_syntax.py) ⚠️ -

Install Neo4j on the server you will run Cartography on.

-

-

If you're an AWS user, prepare your AWS account(s)

-

If you only have a single AWS account

- Set up an AWS identity (user, group, or role) for Cartography to use. Ensure that this identity has the built-in AWS SecurityAudit policy (arn:aws:iam::aws:policy/SecurityAudit) attached. This policy grants access to read security config metadata.

- Set up AWS credentials to this identity on your server, using a

configandcredentialfile. For details, see AWS' official guide.

-

If you want to pull from multiple AWS accounts, see here.

-

-

If you're a GCP user, prepare your GCP credential(s)

- Create an identity - either a User Account or a Service Account - for Cartography to run as

- Ensure that this identity has the securityReviewer role attached to it.

- Ensure that the machine you are running Cartography on can authenticate to this identity.

- Method 1: You can do this by setting your

GOOGLE_APPLICATION_CREDENTIALSenvironment variable to point to a json file containing your credentials. As per SecurityCommonSense™️, please ensure that only the user account that runs Cartography has read-access to this sensitive file. - Method 2: If you are running Cartography on a GCE instance or other GCP service, you can make use of the credential management provided by the default service accounts on these services. See the official docs on Application Default Credentials for more details.

- Method 1: You can do this by setting your

-

Get and run Cartography

-

Run

pip install cartographyto install our code. -

Finally, to sync your data:

-

If you have one AWS account, run

cartography --neo4j-uri <uri for your neo4j instance; usually bolt://localhost:7687> -

If you have more than one AWS account, run

AWS_CONFIG_FILE=/path/to/your/aws/config cartography --neo4j-uri <uri for your neo4j instance; usually bolt://localhost:7687> --aws-sync-all-profiles

The sync will pull data from your configured accounts and ingest data to Neo4j! This process might take a long time if your account has a lot of assets.

-

-

Once everything has been installed and synced, you can view the Neo4j web interface at http://localhost:7474. You can view the reference on this here.

ℹ️ Already know how to query Neo4j? You can skip to our reference material!

If you already know Neo4j and just need to know what are the nodes, attributes, and graph relationships for our representation of infrastructure assets, you can skip this handholdy walkthrough and see our quick canned queries. You can also view our reference material.

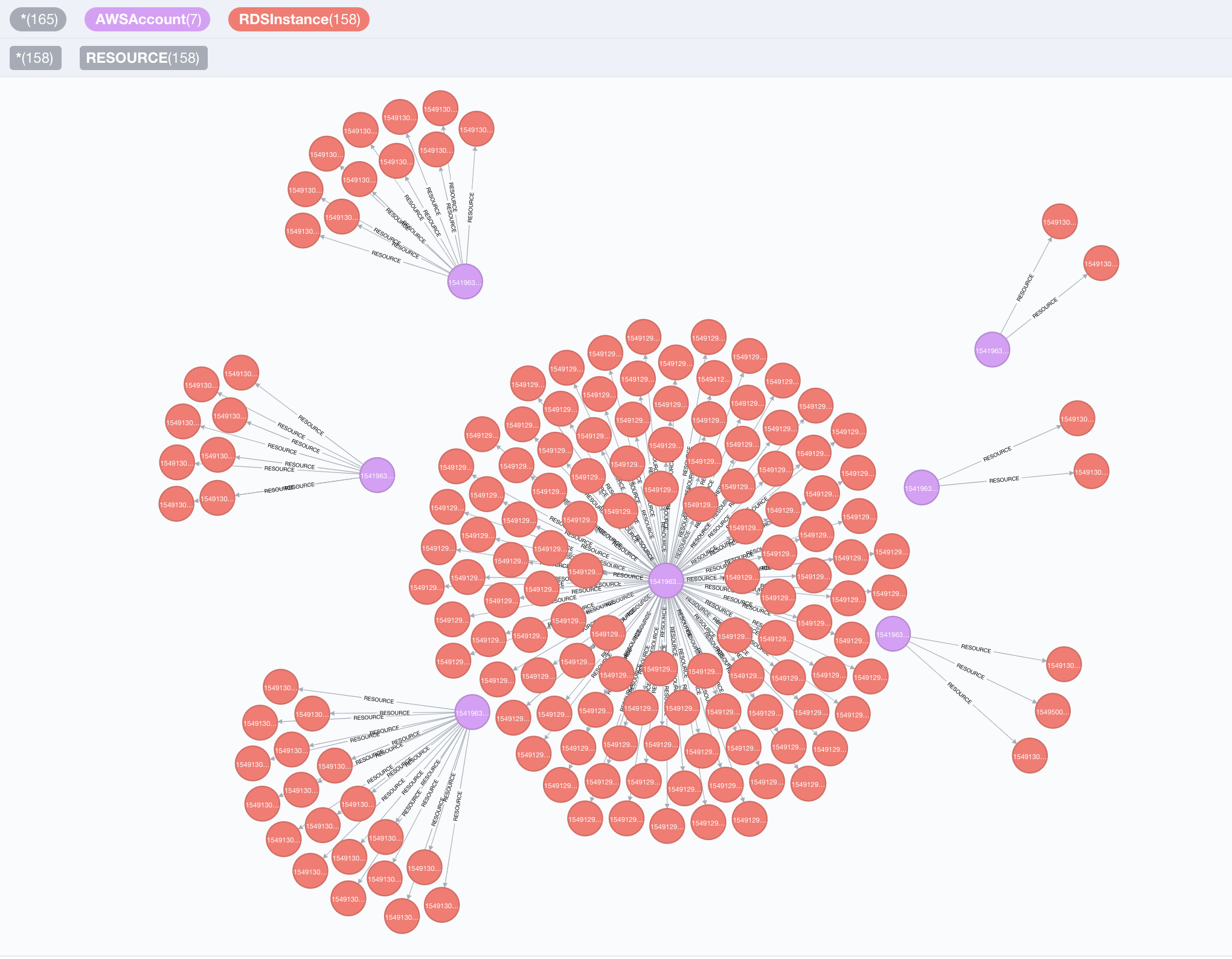

MATCH (aws:AWSAccount)-[r:RESOURCE]->(rds:RDSInstance)

return *

In this query we asked Neo4j to find all [:RESOURCE] relationships from AWSAccounts to RDSInstances, and return the nodes and the :RESOURCE relationships.

We will do more interesting things with this result next.

You can adjust the node colors, sizes, and captions by clicking on the node type at the top of the query. For example, to change the color of an AWSAccount node, first click the "AWSAccount" icon at the top of the view to select the node type

and then pick options on the menu that shows up at the bottom of the view like this:

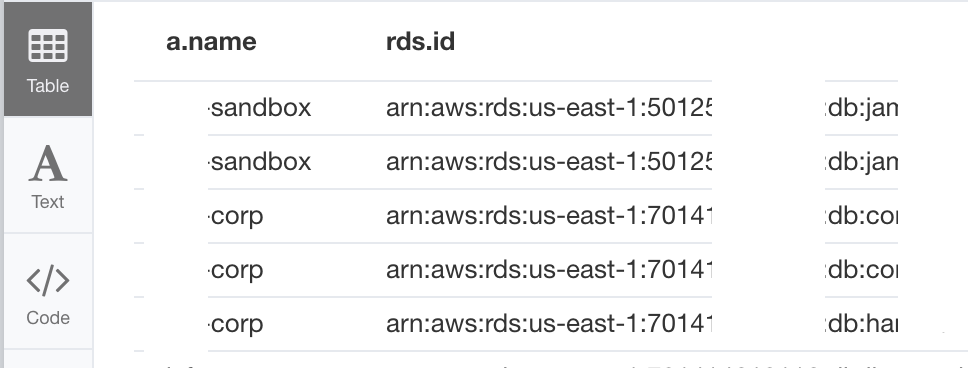

Which RDS instances have encryption turned off?

MATCH (a:AWSAccount)-[:RESOURCE]->(rds:RDSInstance{storage_encrypted:false})

RETURN a.name, rds.id

The results show up in a table because we specified attributes like a.name and rds.id in our return statement (as opposed to having it return *). We used the "{}" notation to have the query only return RDSInstances where storage_encrypted is set to False.

If you want to go back to viewing the graph and not a table, simply make sure you don't have any attributes in your return statement -- use return * to return all nodes decorated with a variable label in your MATCH statement, or just return the specific nodes and relationships that you want.

Let's look at some other AWS assets now.

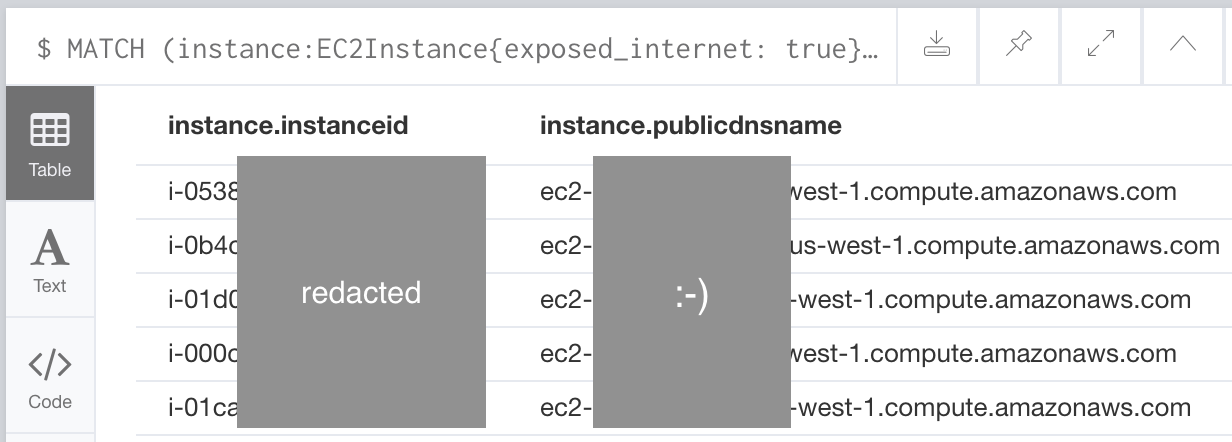

Which EC2 instances are directly exposed to the internet?

MATCH (instance:EC2Instance{exposed_internet: true})

RETURN instance.instanceid, instance.publicdnsname

These instances are open to the internet either through permissive inbound IP permissions defined on their EC2SecurityGroups or their NetworkInterfaces.

If you know a lot about AWS, you may have noticed that EC2 instances don't actually have an exposed_internet field. We're able to query for this because Cartography performs some data enrichment to add this field to EC2Instance nodes.

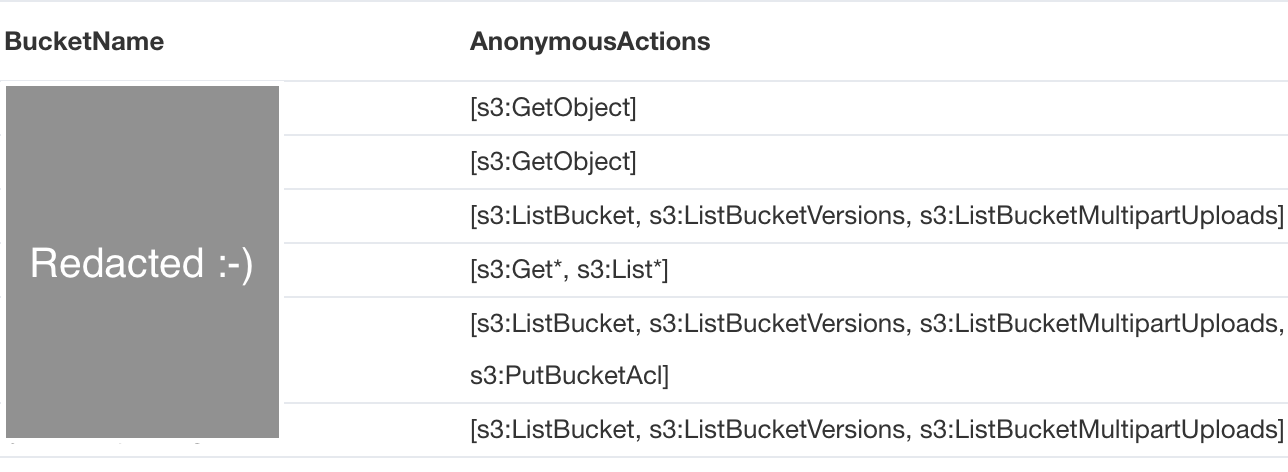

Which S3 buckets have a policy granting any level of anonymous access to the bucket?

MATCH (s:S3Bucket)

WHERE s.anonymous_access = true

RETURN s

These S3 buckets allow for any user to read data from them anonymously. Similar to the EC2 instance example above, S3 buckets returned by the S3 API don't actually have an anonymous_access field and this field is added by one of Cartography's data augmentation steps.

A couple of other things to notice: instead of using the "{}" notation to filter for anonymous buckets, we can use SQL-style WHERE clauses. Also, we used the SQL-style AS operator to relabel our output header rows.

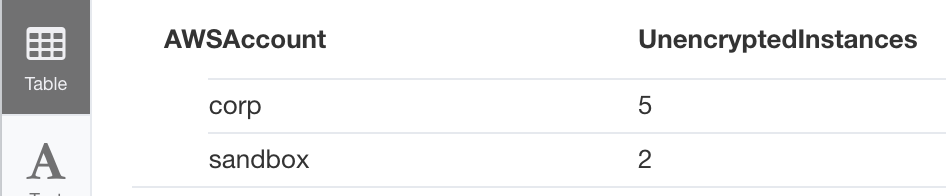

Let's go back to analyzing RDS instances. In an earlier example we queried for RDS instances that have encryption turned off. We can aggregate this data by AWSAccount with a small change:

MATCH (a:AWSAccount)-[:RESOURCE]->(rds:RDSInstance)

WHERE rds.storage_encrypted = false

RETURN a.name as AWSAccount, count(rds) as UnencryptedInstances

If you want to learn more in depth about Neo4j and Cypher queries you can look at this tutorial and see this reference card.

You can add your own custom attributes and relationships without writing Python code! Here's how.

This project is governed by Lyft's code of conduct. All contributors and participants agree to abide by its terms.

See these docs.

We require a CLA for code contributions, so before we can accept a pull request we need to have a signed CLA. Please visit our CLA service and follow the instructions to sign the CLA.

In general all enhancements or bugs should be tracked via github issues before PRs are submitted. We don't require them, but it'll help us plan and track.

When submitting bugs through issues, please try to be as descriptive as possible. It'll make it easier and quicker for everyone if the developers can easily reproduce your bug.

Our only method of accepting code changes is through Github pull requests.

Detailed view of our schema and all data types 😁.

MATCH (aws:AWSAccount)-[r:RESOURCE]->(rds:RDSInstance)

return *

Which RDS instances have encryption turned off?

MATCH (a:AWSAccount)-[:RESOURCE]->(rds:RDSInstance{storage_encrypted:false})

return a.name, rds.id

Which EC2 instances are directly exposed to the internet?

MATCH (instance:EC2Instance{exposed_internet: true})

RETURN instance.instanceid, instance.publicdnsname

Which S3 buckets have a policy granting any level of anonymous access to the bucket?

MATCH (s:S3Bucket)

WHERE s.anonymous_access = true

RETURN s

MATCH (a:AWSAccount)-[:RESOURCE]->(rds:RDSInstance)

WHERE rds.storage_encrypted = false

return a.name as AWSAccount, count(rds) as UnencryptedInstances

Cartography adds custom attributes to nodes and relationships to point out security-related items of interest. Unless mentioned otherwise these data augmentation jobs are stored in cartography/data/jobs/analysis. Here is a summary of all of Cartography's custom attributes.

-

exposed_internetindicates whether the asset is accessible to the public internet.-

Elastic Load Balancers: The

exposed_internetflag is set toTruewhen the load balancer'sschemefield is set tointernet-facing. This indicates that the load balancer has a public DNS name that resolves to a public IP address. -

EC2 instances: The

exposed_internetflag on an EC2 instance is set toTruewhen any of following apply:-

The instance is part of an EC2 security group or is connected to a network interface connected to an EC2 security group that allows connectivity from the 0.0.0.0/0 subnet.

-

The instance is connected to an Elastic Load Balancer that has its own

exposed_internetflag set toTrue.

-

-

ElasticSearch domain:

exposed_internetis set toTrueif the ElasticSearch domain has a policy applied to it that makes it internet-accessible. This policy determination is made by using the policyuniverse library. The code for this augmentation is implemented atcartography.intel.aws.elasticsearch._process_access_policy().

-

-

anonymous_accessindicates whether the asset allows access without needing to specify an identity.- S3 buckets:

anonymous_accessis set toTrueon an S3 bucket if this bucket has an S3Acl with a policy applied to it that allows the predefined AWS "Authenticated Users" or "All Users" groups to access it. These determinations are made by using the policyuniverse library.

- S3 buckets:

There are many ways to allow Cartography to pull from more than one AWS account. We can't cover all of them, but we can show you the way we have things set up at Lyft. In this scenario we will assume that you are going to run Cartography on an EC2 instance.

-

Pick one of your AWS accounts to be the "Hub" account. This Hub account will pull data from all of your other accounts - we'll call those "Spoke" accounts.

-

Set up the IAM roles: Create an IAM role named

cartography-read-onlyon all of your accounts. Configure the role on all accounts as follows:-

Attach the built-in AWS SecurityAudit IAM policy (arn:aws:iam::aws:policy/SecurityAudit) to the role. This grants access to read security config metadata.

-

Set up a trust relationship so that the Spoke accounts will allow the Hub account to assume the

cartography-read-onlyrole. The resulting trust relationship should look something like this:{ "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Principal": { "AWS": "arn:aws:iam::<Hub's account number>:root" }, "Action": "sts:AssumeRole" } ] } -

Allow a role in the Hub account to assume the

cartography-read-onlyrole on your Spoke account(s).-

On the Hub account, create a role called

cartography-service. -

On this new

cartography-servicerole, add an inline policy with the following JSON:{ "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Resource": "arn:aws:iam::*:role/cartography-read-only", "Action": "sts:AssumeRole" } ] }This allows the Hub role to assume the

cartography-read-onlyrole on your Spoke accounts. -

When prompted to name the policy, you can name it anything you want - perhaps

CartographyAssumeRolePolicy.

-

-

-

Set up your EC2 instance to correctly access these AWS identities

-

Attach the

cartography-servicerole to the EC2 instance that you will run Cartography on. You can do this by following these official AWS steps. -

Ensure that the

[default]profile in yourAWS_CONFIG_FILEfile (default~/.aws/configin Linux, and%UserProfile%\.aws\configin Windows) looks like this:[default] region=<the region of your Hub account, e.g. us-east-1> output=json -

Add a profile for each AWS account you want Cartography to sync with to your

AWS_CONFIG_FILE. It will look something like this:[profile accountname1] role_arn = arn:aws:iam::<AccountId#1>:role/cartography-read-only region=us-east-1 output=json credential_source = Ec2InstanceMetadata [profile accountname2] role_arn = arn:aws:iam::<AccountId#2>:role/cartography-read-only region=us-west-1 output=json credential_source = Ec2InstanceMetadata ... etc ...

-