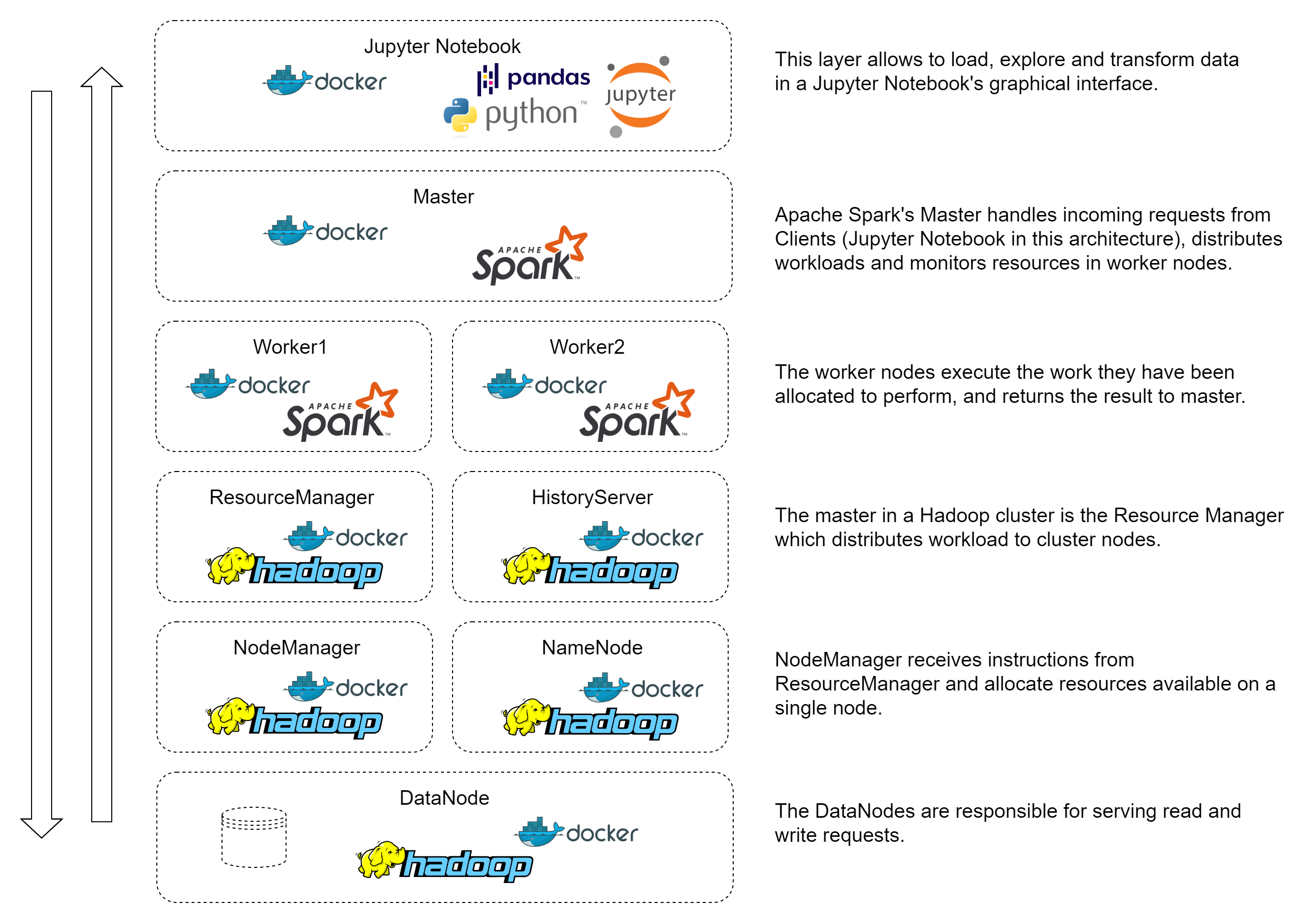

Scale your data management by distributing workload and storage on Hadoop and Spark Clusters, explore and transform your data in Jupyter Notebook.

Purpose for this tutorial is to show how to get started with Hadoop, Spark and Jupyter for your BigData solution, deploy as Docker Containers.

- Only confirmed working on Linux/Windows (Apple Silicon might have issues).

- Ensure Docker is installed.

Execute bash master-build.sh to start the the build and start the containers.

Access Hadoop UI on ' http://localhost:9870 '

Access Spark Master UI on ' http://localhost:8080 '

Access Jupyter UI on ' http://localhost:8888 '

- Fork the Project

- Create your Feature Branch (

git checkout -b feature/featureName) - Commit your Changes (

git commit -m 'Add some featureName') - Push to the Branch (

git push origin feature/featureName) - Open a Pull Request

LinkedIn : martin-karlsson

Twitter : @HelloKarlsson

Email : hello@martinkarlsson.io

Webpage : www.martinkarlsson.io

Project Link: github.com/martinkarlssonio/big-data-solution