K-FAC in TensorFlow is an implementation of K-FAC, an

approximate second-order optimization method, in TensorFlow. When applied to

feedforward and convolutional neural networks, K-FAC can converge >3.5x

faster in >14x fewer iterations than SGD with Momentum.

kfac is compatible with Python 2 and 3 and can be installed directly via

pip,

# Assumes tensorflow or tensorflow-gpu installed

$ pip install kfac

# Installs with tensorflow-gpu requirement

$ pip install 'kfac[tensorflow_gpu]'

# Installs with tensorflow (cpu) requirement

$ pip install 'kfac[tensorflow]'K-FAC, short for "Kronecker-factored Approximate Curvature", is an approximation to the Natural Gradient algorithm designed specifically for neural networks. It maintains a block-diagonal approximation to the Fisher Information matrix, whose inverse preconditions the gradient.

K-FAC can be used in place of SGD, Adam, and other Optimizer implementations.

Experimentally, K-FAC converges >3.5x faster than well-tuned SGD.

Unlike most optimizers, K-FAC exploits structure in the model itself (e.g. "What are the weights for layer i?"). As such, you must add some additional code while constructing your model to use K-FAC.

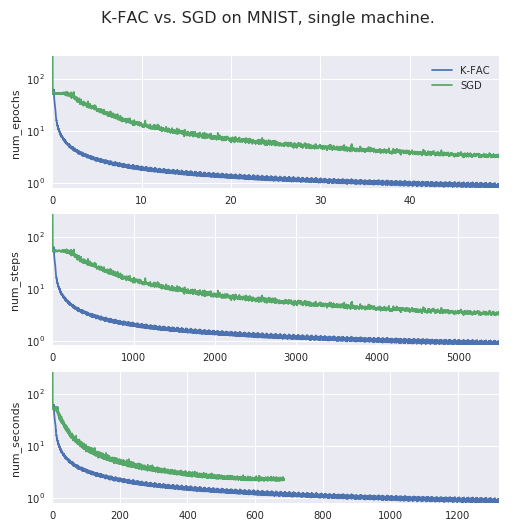

K-FAC can take advantage of the curvature of the optimization problem, resulting in faster training. For an 8-layer Autoencoder, K-FAC converges to the same loss as SGD with Momentum in 3.8x fewer seconds and 14.7x fewer updates. See how training loss changes as a function of number of epochs, steps, and seconds:

If you have a feedforward or convolutional model for classification that is converging too slowly, K-FAC is for you. K-FAC can be used in your model if:

- Your model defines a posterior distribution.

- Your model uses only fully-connected or convolutional layers (residual connections OK).

- You are training on CPU or GPU.

- You can modify model code to register layers with K-FAC.

Using K-FAC requires three steps:

- Registering layer inputs, weights, and pre-activations with a

LayerCollection. - Minimizing the loss with a

KfacOptimizer. - Keeping K-FAC's preconditioner updated.

# Build model.

w = tf.get_variable("w", ...)

b = tf.get_variable("b", ...)

logits = tf.matmul(x, w) + b

loss = tf.reduce_mean(

tf.nn.softmax_cross_entropy_with_logits(labels=y, logits=logits))

# Register layers.

layer_collection = LayerCollection()

layer_collection.register_fully_connected((w, b), x, logits)

layer_collection.register_categorical_predictive_distribution(logits)

# Construct training ops.

optimizer = KfacOptimizer(..., layer_collection=layer_collection)

_, cov_update_op, _, inv_update_op, _, _ = optimizer.make_ops_and_vars()

train_op = optimizer.minimize(loss)

# Minimize loss.

with tf.Session() as sess:

...

sess.run([train_op, cov_update_op, inv_update_op])See examples/ for runnable, end-to-end illustrations.

- Alok Aggarwal

- Daniel Duckworth

- James Martens

- Matthew Johnson

- Olga Wichrowska

- Roger Grosse