Fast artistic style transfer by using feed forward network.

checkout resize-conv branch which provides better result.

- input image size: 1024x768

- process time(CPU): 17.78sec (Core i7-5930K)

- process time(GPU): 0.994sec (GPU TitanX)

$ pip install chainer

Download VGG16 model and convert it into smaller file so that we use only the convolutional layers which are 10% of the entire model.

sh setup_model.sh

Need to train one image transformation network model per one style target. According to the paper, the models are trained on the Microsoft COCO dataset.

python train.py -s <style_image_path> -d <training_dataset_path> -g <use_gpu ? gpu_id : -1>

python generate.py <input_image_path> -m <model_path> -o <output_image_path> -g <use_gpu ? gpu_id : -1>

This repo has pretrained models as an example.

- example:

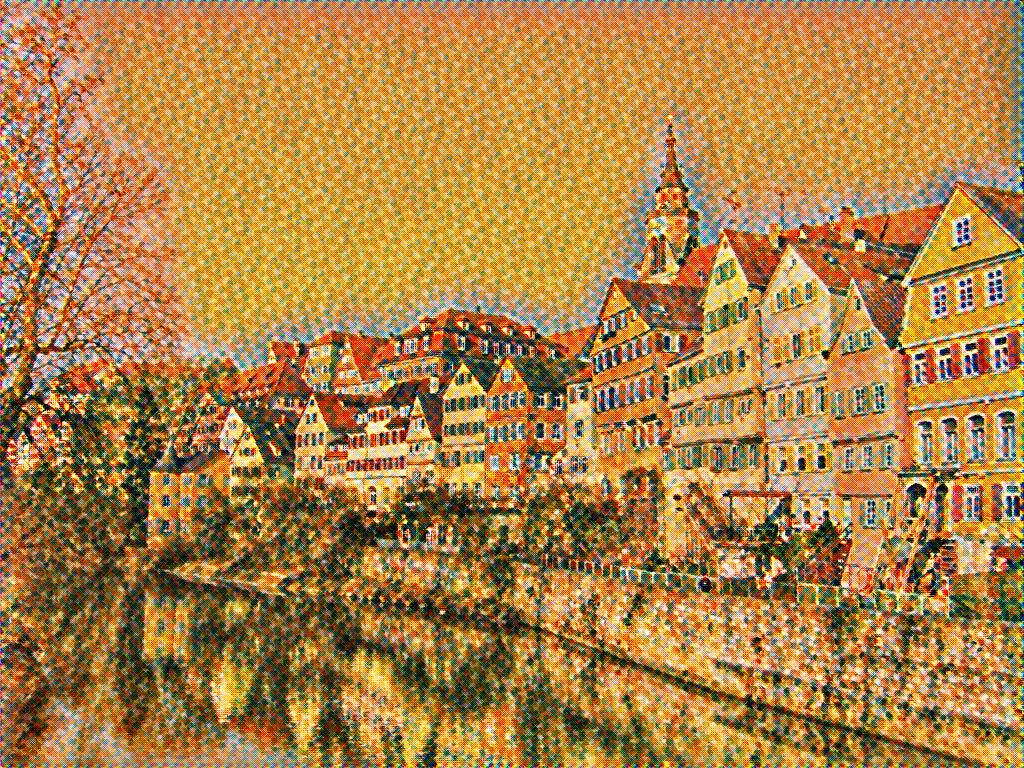

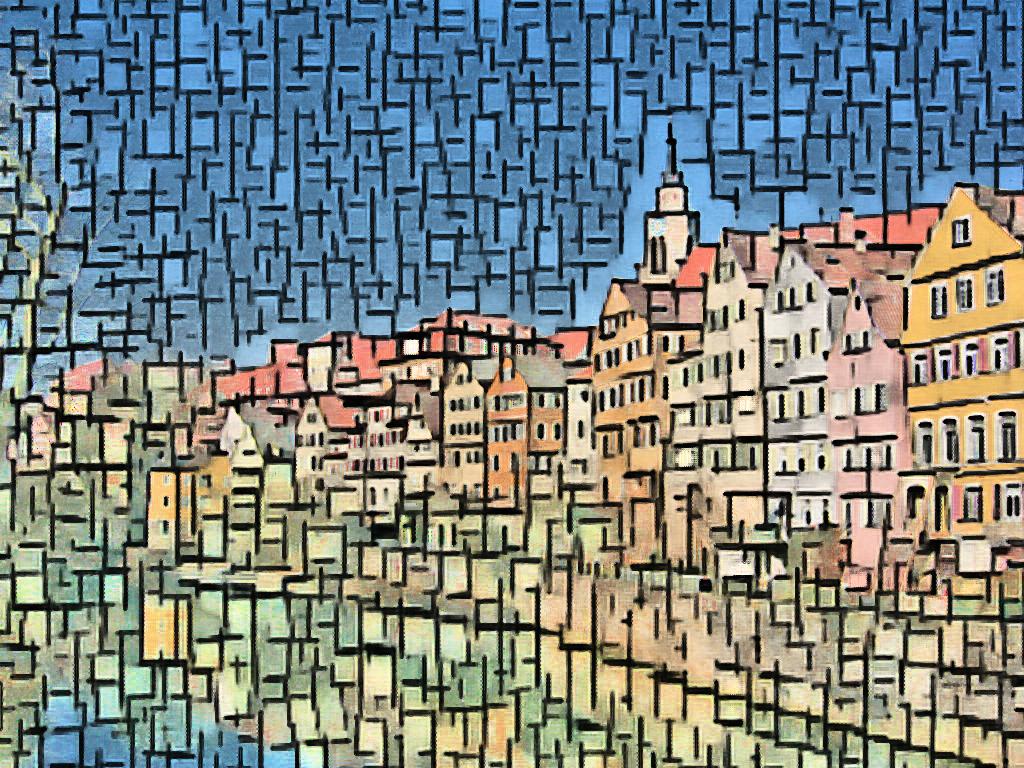

python generate.py sample_images/tubingen.jpg -m models/composition.model -o sample_images/output.jpg

or

python generate.py sample_images/tubingen.jpg -m models/seurat.model -o sample_images/output.jpg

python generate.py <input_image_path> -m <model_path> -o <output_image_path> -g <use_gpu ? gpu_id : -1> --keep_colors

Fashizzle Dizzle created pre-trained models collection repository, chainer-fast-neuralstyle-models. You can find a variety of models.

- Convolution kernel size 4 instead of 3.

- Training with batchsize(n>=2) causes unstable result.

This version is not compatible with the previous versions. You can't use models trained by the previous implementation. Sorry for the inconvenience!

MIT

Codes written in this repository based on following nice works, thanks to the author.

- chainer-gogh Chainer implementation of neural-style. I heavily referenced it.

- chainer-cifar10 Residual block implementation is referred.