This repository contains the source code for DM-Codec.

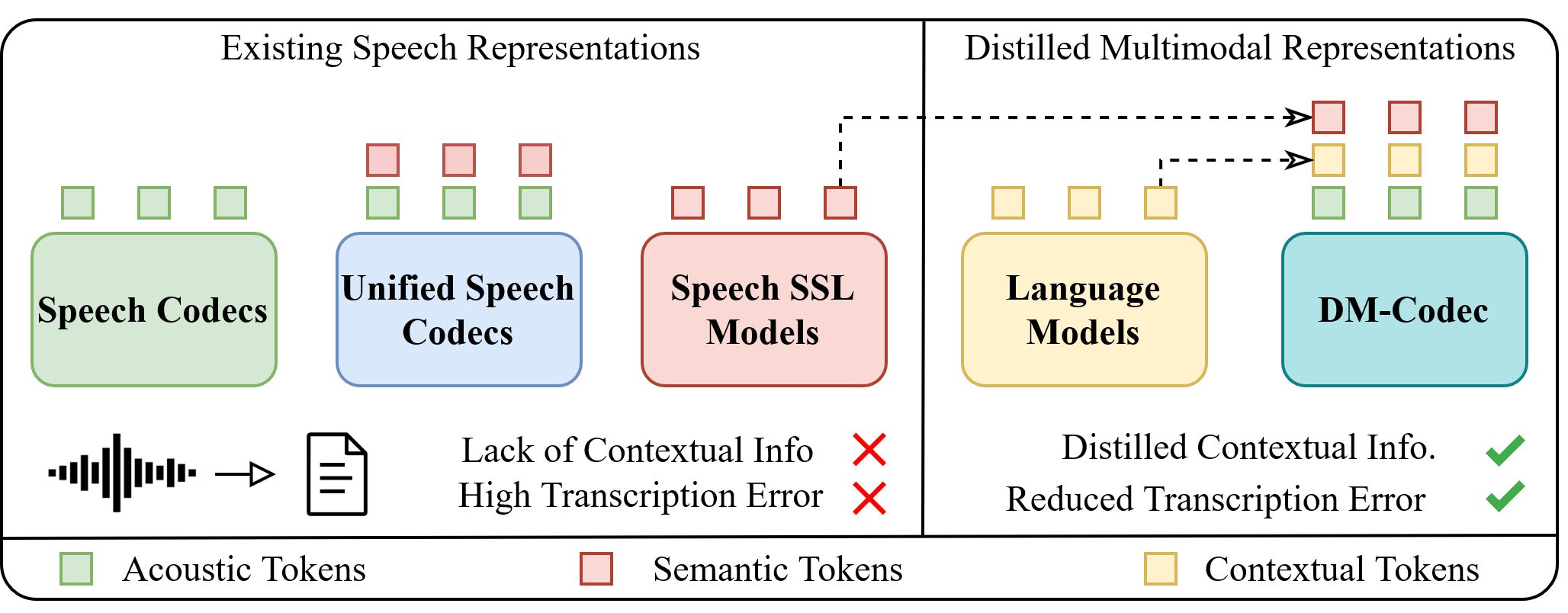

As illustrated in Figure 1, DM-Codec introduces speech tokenization approaches using discrete acoustic, semantic, and contextual tokens. DM-Codec integrates these multimodal representations for robust speech tokenization, learning comprehensive speech representations.

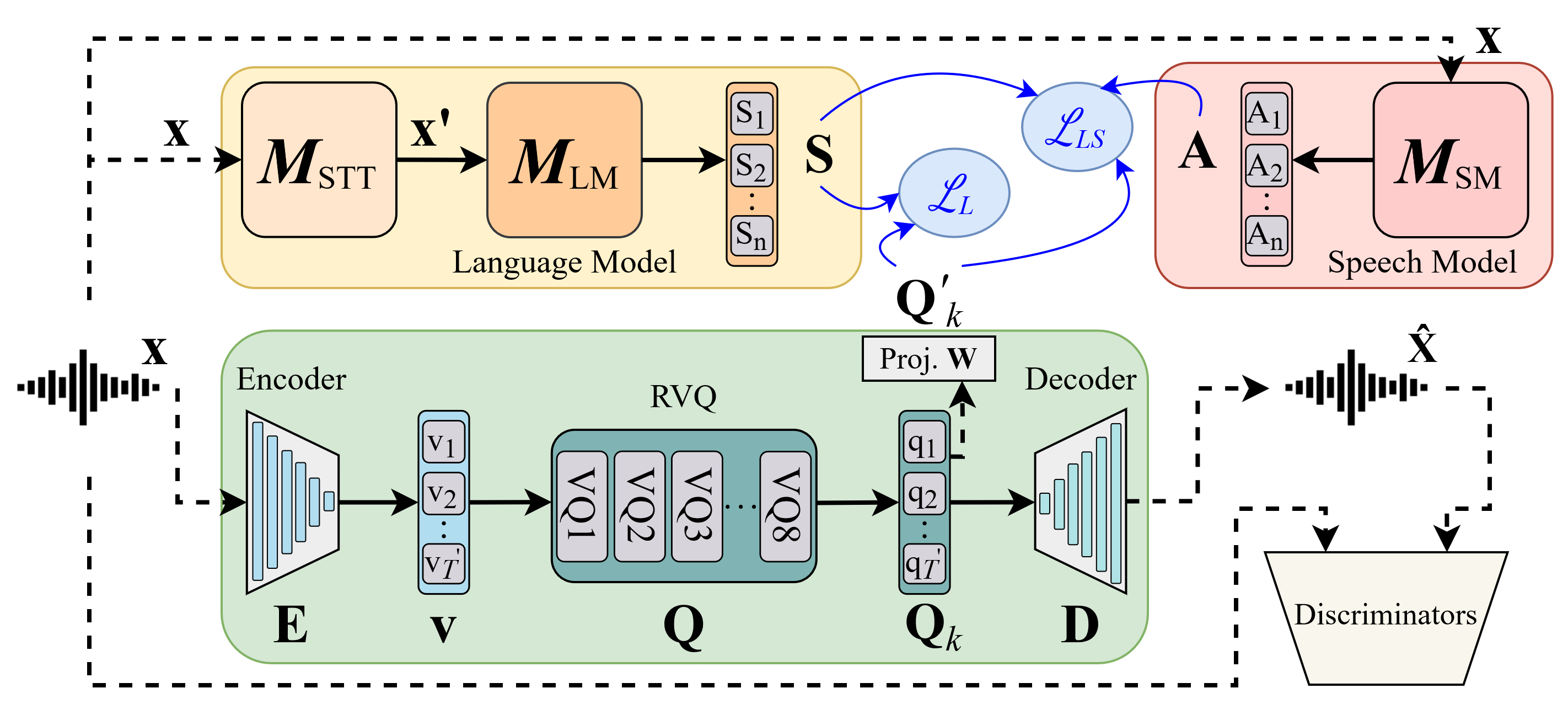

The DM-Codec framework is further detailed in Figure 2. The framework consists of an encoder that extracts latent representations from the input speech signal. These latent vectors are subsequently quantized using a Residual Vector Quantizer (RVQ). We designed two distinct distillation approaches: (i) distillation from a language model, and (ii) a combined distillation from both a language model (LM) and a speech model (SM). These approaches integrate acoustic,semantic, and contextual representations into the quantized vectors to improve speech representation for downstream tasks.

- We have released code and trained model checkpoints.

More instructions and details will be provided soon.

| Model | Description |

|---|---|

| DM-Codec_checkpoint_LM_SM | Utilizes LM and SM-guided representation distillation approach uniting acoustic, semantic, and contextual representations into DM-Codec. |

| DM-Codec_checkpoint_LM | Utilizes LM-guided representation distillation approach incorporating acoustic and contextual representations into DM-Codec. |

Below, we provide reconstructed speech samples from DM-Codec and compare them with the reconstructed speech from EnCodec, SpeechTokenizer, and FACodec. Download the audio files to listen.

| Codec | Reconstructed Sample 1 | Reconstructed Sample 2 |

|---|---|---|

| Original | Download Sample 1 | Download Sample 2 |

| DM-Codec | Download Sample 1 | Download Sample 2 |

| EnCodec | Download Sample 1 | Download Sample 2 |

| SpeechTokenizer | Download Sample 1 | Download Sample 2 |

| FACodec | Download Sample 1 | Download Sample 2 |