This repository contains the code for the paper "SINA: Sharp Implicit Neural Atlases by Joint Optimisation of Representation and Deformation" by Christoph Großbröhmer, Ziad Al-Haj Hemidi, Fenja Falta and Mattias P. Heinrich. The paper has been accepted to the WBIR workshop 2024 held in conjunction with MICCAI 2024 in Marrakech, Morocco.

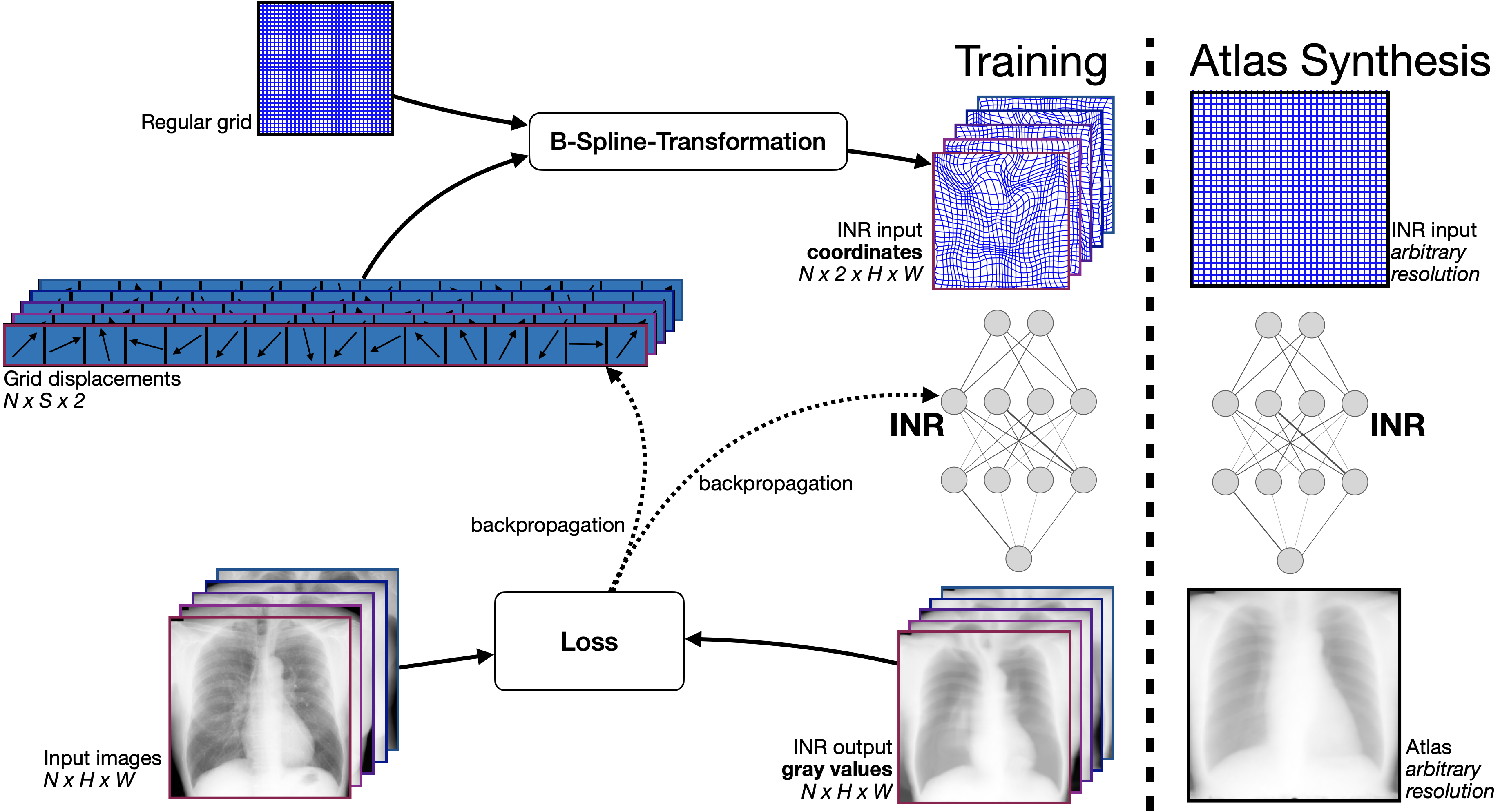

We propose SINA (Sharp Implicit Neural Atlases), a novel framework for medical image atlas synthesis, leveraging the joint optimisation of data representation and registration. By iteratively refining sample-to-atlas registrations and modelling the atlas as a continuous function in an Implicit Neural Representation (INR), we demonstrate the possibility of achieving atlas sharpness while maintaining data fidelity.

We provide visuals for JSRT and OASIS datasets. The videos show the evolution of the atlas from the initial step of the training to the final sharp atlases and are available in the src folder for JSRT and OASIS datasets, respectively.

This code is implemented in Python 3.10 and depends on the following packages:

- torch

- numpy

please see the requirements.txt file for the full list of dependencies.

To install the required packages, create a virtual environment by running the following commands:

conda create -n sina python=3.10

conda activate sinaThen, install the required packages using the following command:

pip install -r requirements.txtDatasets used in this publication can be downloaded from original sources (JSRT, OASIS, AbdomenCTCT).

For OASIS, we provide an exemplary script to download and prepare the dataset to a format that can be used by our training code. For this purpose, run the following command:

python prepare_OASIS.py -o .Upon the acceptance of the paper, we will provide the preprocessed datasets for the JSRT and AbdomenCTCT datasets.

To train SINA, run the following command example for 2D OASIS dataset:

python train_sina2D.py --dataset OASIS_imgs.pth Note: All other flags are already set and correspond to the results in the paper. We provided pre-trained models for all datasets, which are saved in the pretrained_models directory.

To synthesize an atlas, run the following command example (works for all datasets):

python synthesize.py --trained_model pretrained_models/OASIS.pth Note that the pertained 3D model is an LFS file and can be downloaded as follows:

git lfs install

git lfs fetch --all

git lfs pullIf you find this work useful, please consider citing our paper:

@InProceedings{10.1007/978-3-031-73480-9_13,

author="Gro{\ss}br{\"o}hmer, Christoph and Hemidi, Ziad Al-Haj and Falta, Fenja and Heinrich, Mattias P.",

title="SINA: Sharp Implicit Neural Atlases by Joint Optimisation of Representation and Deformation",

booktitle="Biomedical Image Registration",

year="2024",

}