Experimentation on CLIP's classification behaviour by changing prompts and contextual information

This repo explores the CLIP accuracy, when the context of image and input prompt changes.

Simple start running the cells of the notebook to get started.

This notebook can be used to produce visualizations.

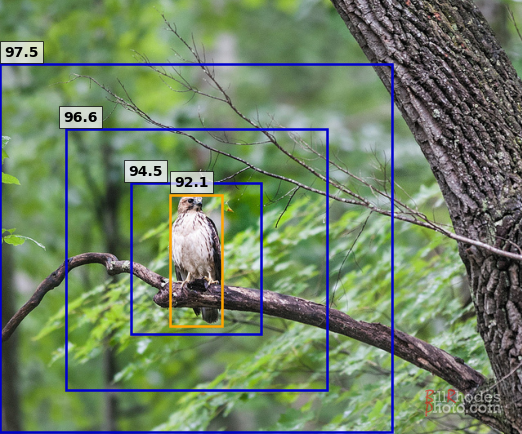

Sample output:

This repo is a modified version of this open-source collab