Semantic Segmentation of LiDAR Point Clouds in Tensorflow 2.6

This repository contains implementations of SqueezeSegV2 [1], Darknet52 [2] and Darknet21 [2] for semantic point cloud segmentation implemented in Keras/Tensorflow 2.6. The repository contains the model architectures, training, evaluation and visualisation scripts.

Usage

Installation

All required libraries are listed in the requirements.txt file. You may install them within a virtual environment with:

pip install -r requirements.txtFor visualizations using matplotlib, you will need to install tkinter:

sudo apt-get install python3-tk

Data Format

This repository relies on the data format as in [1]. A dataset has the following file structure:

.

├── ImageSet

├── train.txt

├── val.txt

├── test.txt

├── train

├── val

├── test

The data samples are located in the directories train, val and test. The *.txt files within the directory

ImageSet contain the filenames for the corresponding samples in data directories.

A data sample is stored as a numpy *.npy file. Each file contains

a tensor of size height X width X 6. The 6 channels correspond to

- X-Coordinate in [m]

- Y-Coordinate in [m]

- Z-Coordinate in [m]

- Intensity (with range [0-255])

- Depth in [m]

- Label ID

For points in the point cloud that are not present (e.g. due to no reflection), the depth will be zero.

A sample dataset can be found in the directory data.

Sample Dataset

This repository provides a sample dataset which can be used as a template for your own dataset. The directory

sample_dataset a small train and val split with 32 samples and 3 samples, respectively. The samples in the train

dataset are automatically annoated by cross-modal label transfer while the validation set was manually annotated.

Data Normalization

For a proper data normalization it is necessary to iterate over training set and determine the mean and std

values for each of the input fields. The script preprocessing/inspect_training_data.py provides such a computation.

# pclsegmentation/pcl_segmentation

$ python3 preprocessing/inspect_training_data.py \

--input_dir="../sample_dataset/train/" \

--output_dir="../sample_dataset/ImageSet"The glob pattern *.npy is applied to the input_dir path. The script computes and prints the mean and std values

for the five input fields. These values should be set in the configuration files in pcl_segmentation/configs as the arrays mc.INPUT_MEAN and

mc.INPUT_STD.

Training

The training of the segmentation networks can be evoked by using the train.py script. It is possible to choose between

three different network architectures: squeezesegv2 [1], darknet21 [2] and darknet52 [2].

The training script uses the dataset splits train and val. The metrics for both splits are constantly computed

during training. The Tensorboard callback also uses the val split for visualisation of the current model prediction.

# pclsegmentation/pcl_segmentation

$ python3 train.py \

--data_path="../sample_dataset" \

--train_dir="../output" \

--epochs=5 \

--model=squeezesegv2Evaluation

For the evaluation the script eval.py can be used.

Note that for the evaluation the flag --image_set can be set to val or test according to datasets which are present

at the data_path.

# pclsegmentation/pcl_segmentation

$ python3 eval.py \

--data_path="../sample_dataset" \

--image_set="val" \

--eval_dir="../eval" \

--path_to_model="../output/model" \

--model=squeezesegv2Inference

Inference of the model can be performed by loading some data samples and by loading the trained model. The script

includes visualisation methods for the segmented images. The results can be stored by providing

--output_dir to the script.

# pclsegmentation/pcl_segmentation

$ python3 inference.py \

--input_path="../sample_dataset/train/*.npy" \

--output_dir="../output/prediction" \

--path_to_model="../output/model" \

--model=squeezesegv2Docker

We also provide a docker environment for training, evaluation and inference. All script can be found in the directory docker.

First, build the environment with

# docker

./docker_build.shThen you can execute the sample training with

# docker

./docker_train.shand you could evaluate the trained model with

# docker

./docker_eval.shFor inference on the sample dataset execute:

# docker

./docker_inference.shTensorboard

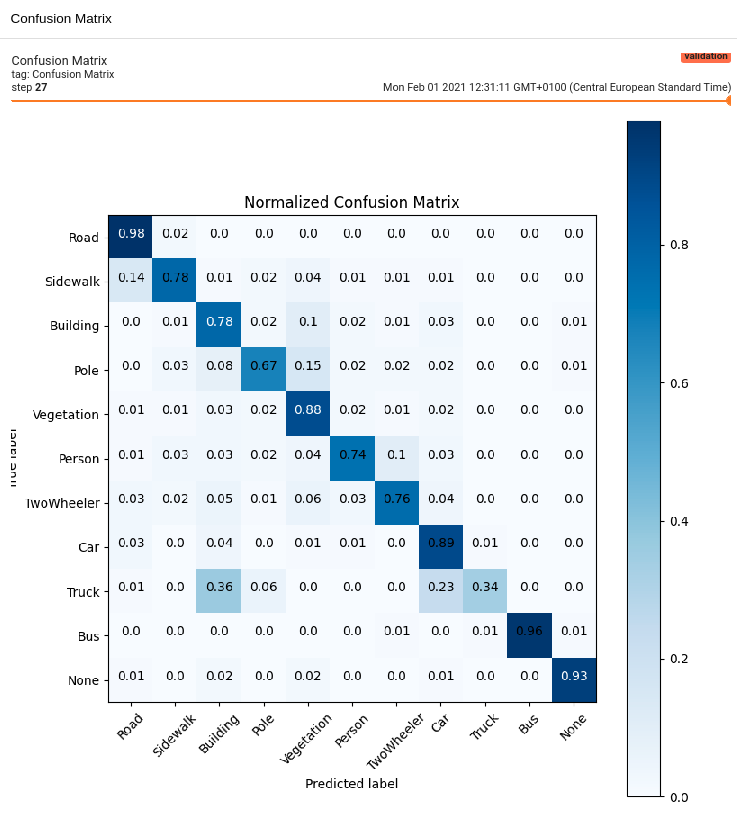

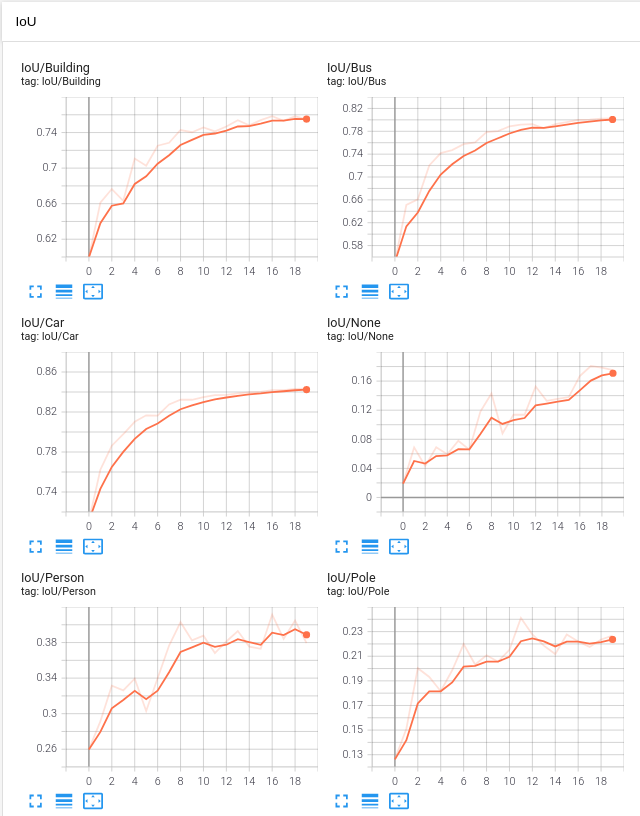

The implementation also contains a Tensorboard callback which visualizes the most important metrics such as the confusion

matrix, IoUs, MIoU, Recalls, Precisions, Learning Rates, different losses and the current model

prediction on a data sample. The callbacks are evoked by Keras' model.fit() function.

# pclsegmentation

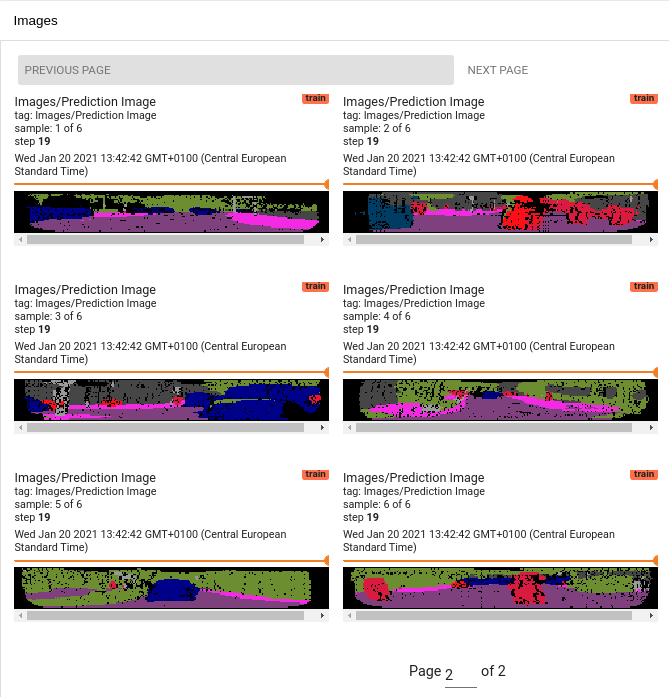

$ tensorboard --logdir ../outputMore Inference Examples

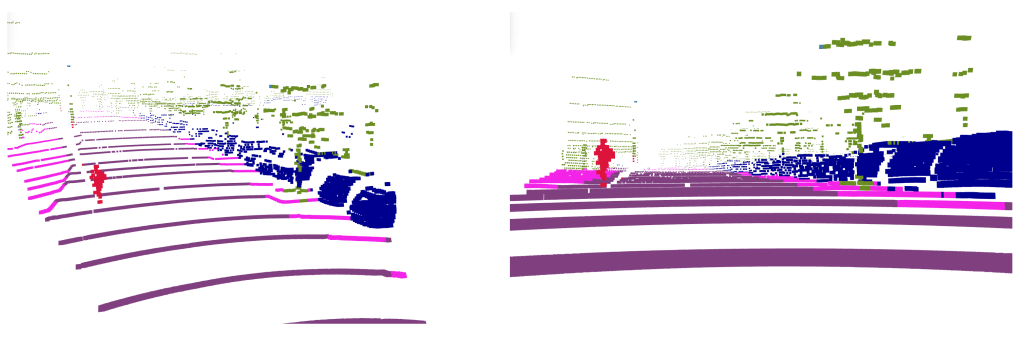

Left image: Prediction - Right Image: Ground Truth

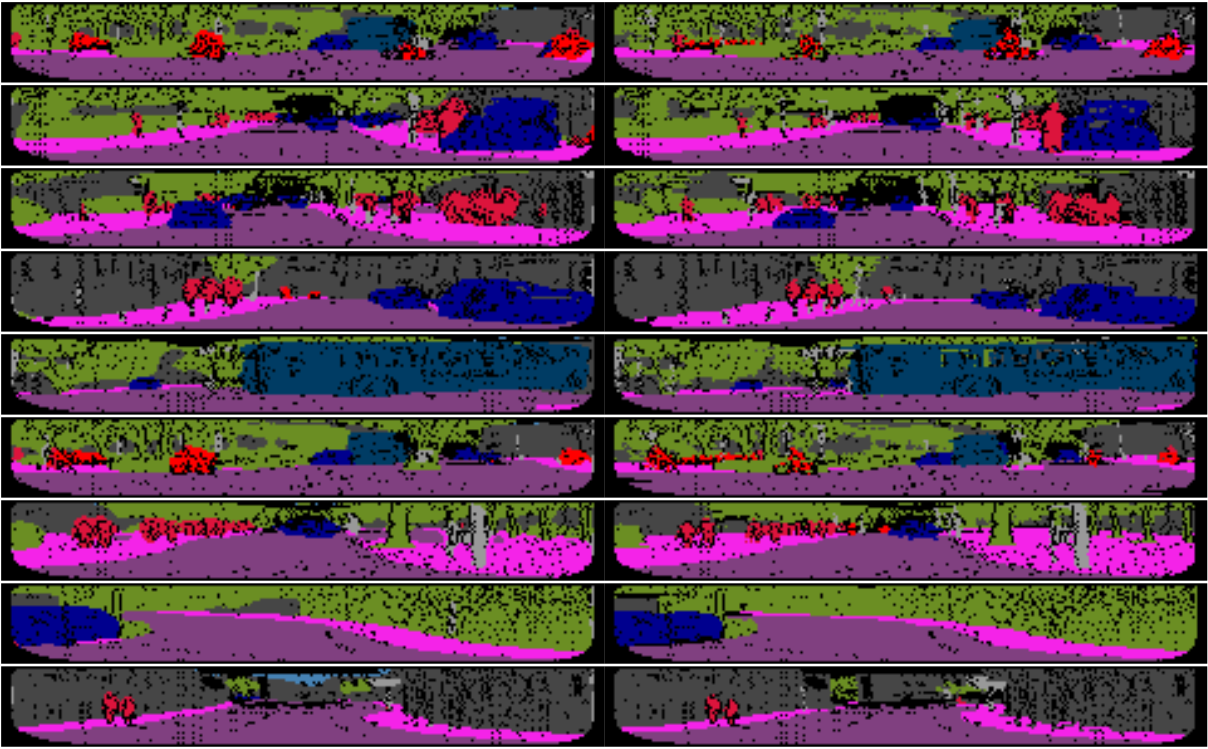

Left image: Prediction - Right Image: Ground Truth

References

The network architectures are based on

- [1] SqueezeSegV2: Improved Model Structure and Unsupervised Domain Adaptation for Road-Object Segmentation from a LiDAR Point Cloud

- [2] RangeNet++: Fast and Accurate LiDAR Semantic Segmentation

TODO

- Faster input pipeline using TFRecords preprocessing

- Docker support

- Implement CRF Postprocessing for SqueezeSegV2

- Implement a Dataloader for the Semantic Kitti dataset

Author of this Repository

Mail: till.beemelmanns (at) ika.rwth-aachen.de

Citation

We hope the provided code can help in your research. If this is the case, please cite:

@misc{Beemelmanns2021,

author = {Till Beemelmanns},

title = {Semantic Segmentation of LiDAR Point Clouds in Tensorflow 2.6},

year = 2021,

url = {https://github.com/ika-rwth-aachen/PCLSegmentation},

doi={10.5281/zenodo.4665751}

}