Synthetic Boost: Leveraging Synthetic Data for Enhanced Vision-Language Segmentation in Echocardiography

by Rabin Adhikari*, Manish Dhakal*, Safal Thapaliya*, Kanchan Poudel, Prasiddha Bhandari, Bishesh Khanal

*Equal contribution

This repository contains the data and source code used to produce the results presented in the 4th International Workshop of Advances in Simplifying Medical UltraSound (ASMUS).

Paper link: [arXiv] [Springer]

Accurate segmentation is essential for echocardiography-based assessment of cardiovascular diseases. However, the variability among sonographers and the inherent challenges of ultrasound images hinder precise segmentation. By leveraging the joint representation of image and text modalities, Vision-Language Segmentation Models (VLSMs) can incorporate rich contextual information, potentially aiding in accurate and explainable segmentation. However, the lack of readily available data in echocardiography impedes the training of VLSMs. In this study, we explore using synthetic datasets from Semantic Diffusion Models (SDMs) to enhance VLSMs for echocardiography segmentation. We evaluate results for two popular VLSMs (CLIPSeg and CRIS) using seven different kinds of language prompts derived from several attributes, automatically extracted from echocardiography images, segmentation masks, and their metadata. Our results show improved metrics and faster convergence when pretraining VLSMs on SDM-generated synthetic images before finetuning on real images. The code, configs, and prompts are available here.

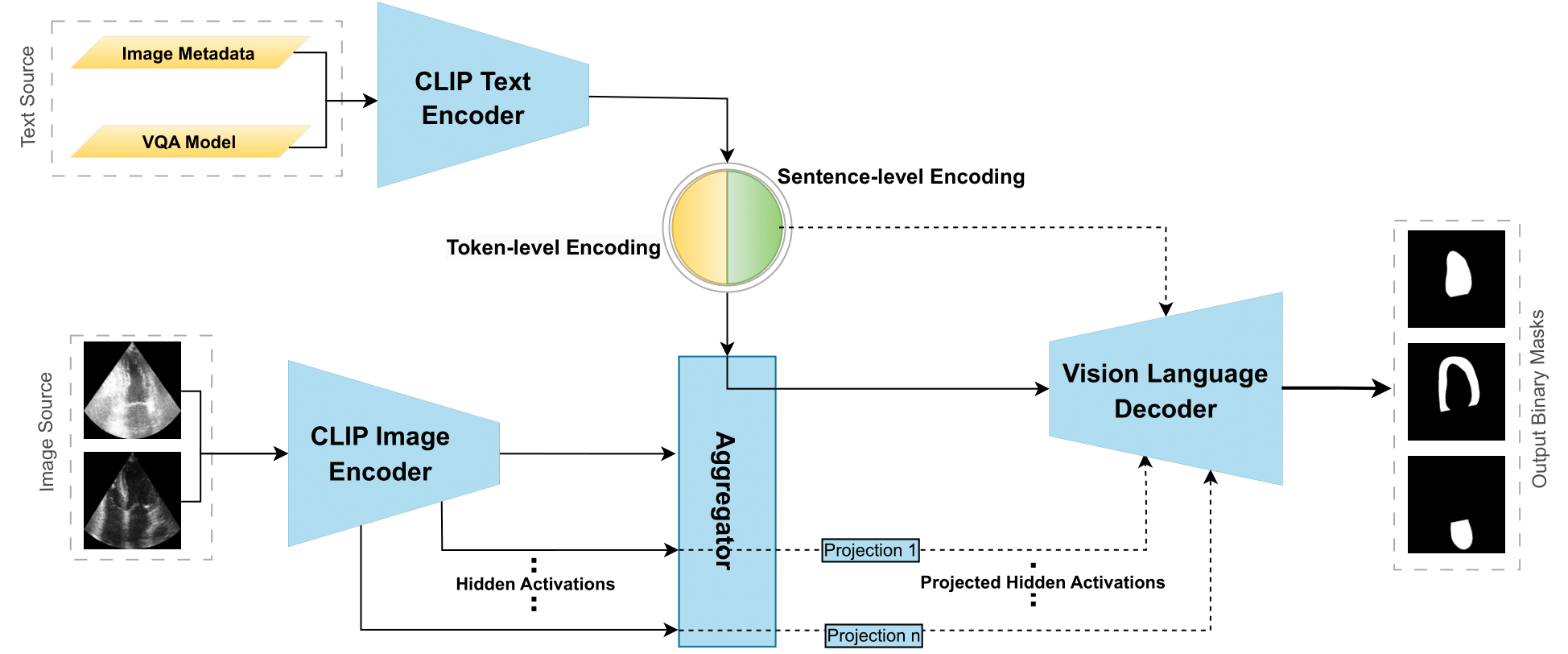

The key components in the architecture are a Text Encoder, an Image Encoder, a Vision-Language Decoder (VLD), and an Aggregator. The images and the corresponding prompts are passed to the CLIP image and text encoders, respectively. The Aggregator generates intermediate representations utilizing image-level, sentence-level, or word-level representations to feed to the VLD. The VLD outputs a binary mask for an image-text pair.

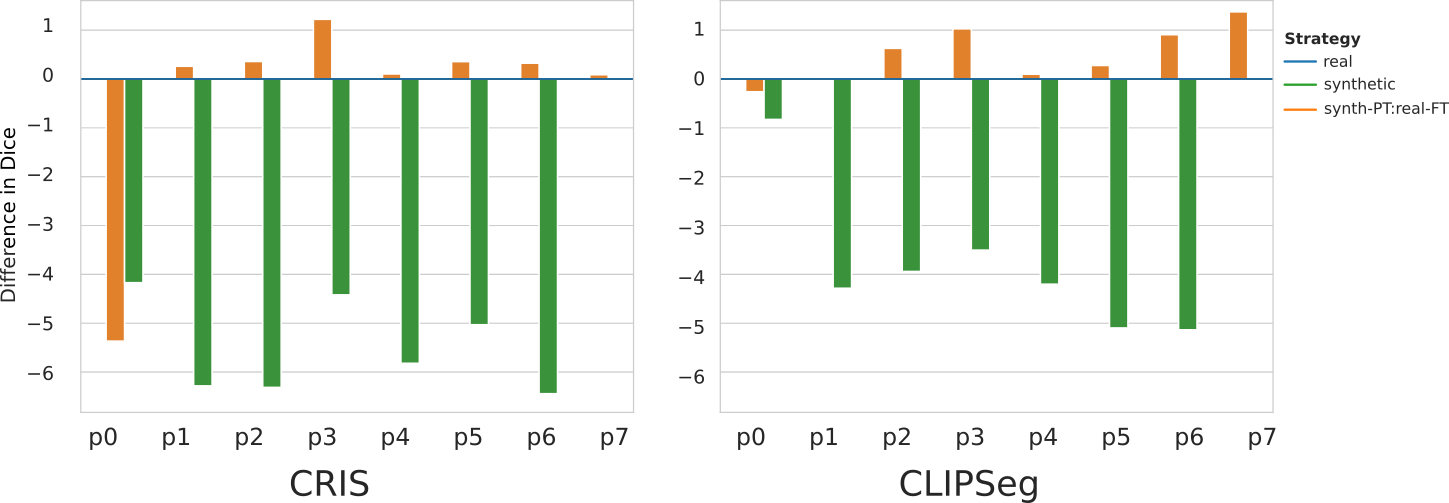

The following figure shows the difference in mean dice scores between different training strategies for CLIPSeg and CRIS for different prompts.

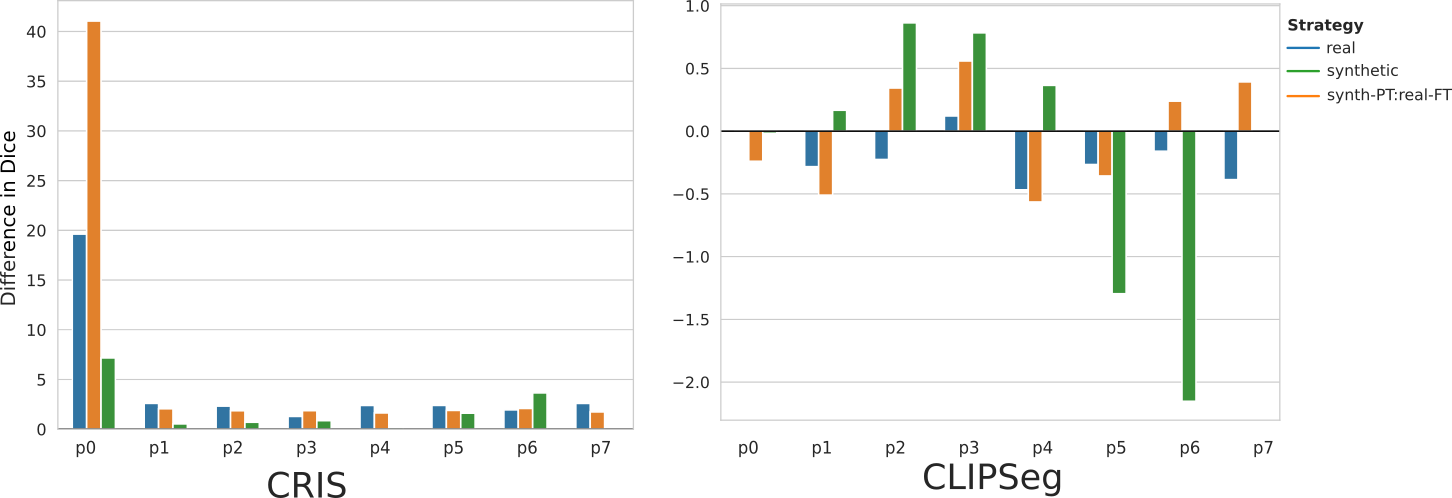

The following figure shows the difference between mean dice scores when the encoders are frozen and when the encoders are trained for different prompts. CRIS's model performance improves when the encoders are trained along with the decoder. In contrast, CLIPSeg's performance degrades when encoders are trained.

For reproducibility please refer REPRODUCIBILITY.md.

All Python source code (including .py and .ipynb files) is made available

under the MIT license.

You can freely use and modify the code, without warranty, so long as you provide attribution to the authors.

See LICENSE for the full license text.

The manuscript text (including all LaTeX files), figures, and data/models produced as part of this research are available under the Creative Commons Attribution 4.0 License (CC-BY). See LICENSE for the full license text.

Please cite this work as followings:

Adhikari, R., Dhakal, M., Thapaliya, S., Poudel, K., Bhandari, P., & Khanal, B. (2023, October). Synthetic Boost: Leveraging Synthetic Data for Enhanced Vision-Language Segmentation in Echocardiography. In International Workshop on Advances in Simplifying Medical Ultrasound (pp. 89-99). Cham: Springer Nature Switzerland.

@inproceedings{adhikari2023synthetic,

title={Synthetic Boost: Leveraging Synthetic Data for Enhanced Vision-Language Segmentation in Echocardiography},

author={Adhikari, Rabin and Dhakal, Manish and Thapaliya, Safal and Poudel, Kanchan and Bhandari, Prasiddha and Khanal, Bishesh},

booktitle={International Workshop on Advances in Simplifying Medical Ultrasound},

pages={89--99},

year={2023},

organization={Springer}

}