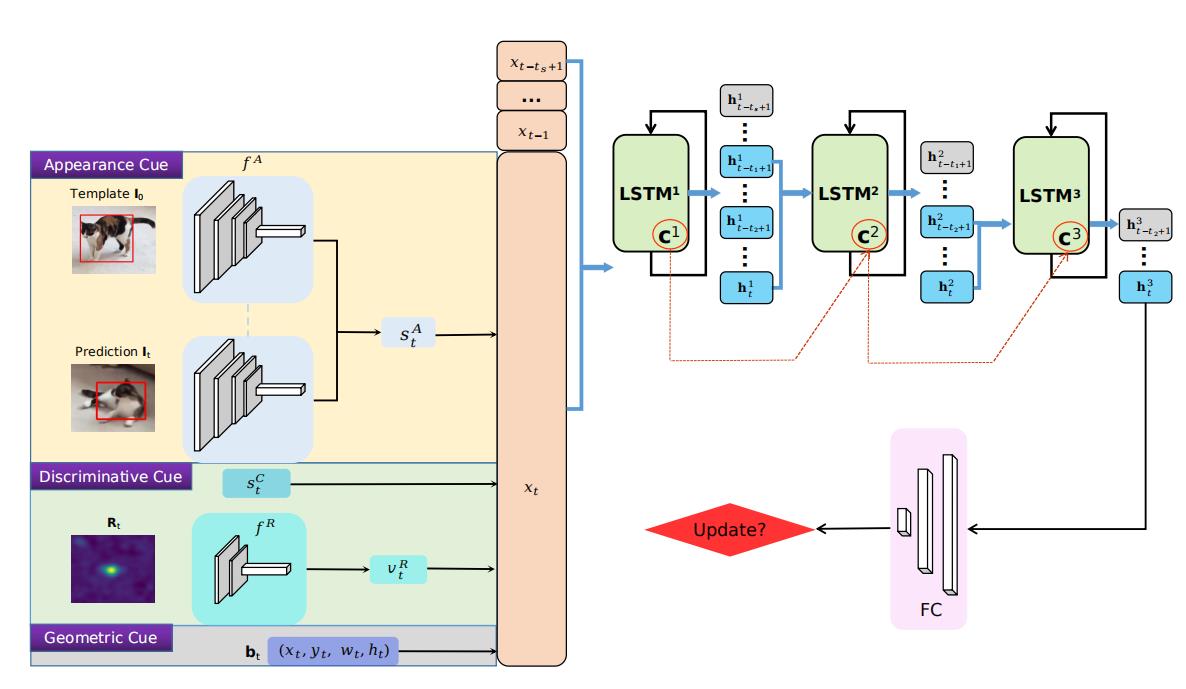

- High-Performance Long-Term Tracking with Meta-Updater(CVPR2020 Oral && Best Paper Nomination).

Our Meta-updater can be easily embedded into other online-update algorithms(Dimp, ATOM, ECO, RT-MDNet...) to make their online-update more accurately in long-term tracking task. More info.

+MU = Basetracker+Meta-Updater

+LTMU = Basetracker+Meta-Updater+Global Detection+Verifier+Bbox refine

| Tracker | LaSOT(AUC) | VOT2020 LT(F) | VOT2018 LT(F) | TLP(AUC) | Link |

|---|---|---|---|---|---|

| RT-MDNet | 0.335 | 0.338 | 0.367 | 0.276 | Paper/Code/Results |

| RT-MDNet+MU | 0.354 | 0.396 | 0.407 | 0.337 | Paper/Code/Results |

| ATOM | 0.511 | 0.497 | 0.510 | 0.399 | Paper/Code/Results |

| ATOM+MU | 0.541 | 0.620 | 0.628 | 0.473 | Paper/Code/Results |

| DiMP | 0.568 | 0.573 | 0.587 | 0.514 | Paper/Code/Results |

| DiMP+MU | 0.594 | 0.641 | 0.649 | 0.564 | Paper/Code/Results |

| DiMP+LTMU | 0.602 | 0.691 | - | 0.572 | Paper/Code/Results |

| PrDiMP | 0.612 | 0.632 | 0.631 | 0.535 | Paper/Code/Results |

| PrDiMP+MU | 0.615 | 0.661 | 0.675 | 0.582 | Paper/Code/Results |

| SuperDiMP | 0.646 | 0.647 | 0.667 | 0.552 | Paper/Code/Results |

| SuperDiMP+MU | 0.658 | 0.704 | 0.707 | 0.595 | Paper/Code/Results |

| D3S | 0.494 | 0.437 | 0.445 | 0.390 | Paper/Code/Results |

| D3S+MU | 0.520 | 0.518 | 0.534 | 0.413 | Paper/Code/Results |

| ECO | - | - | - | - | Paper/Code/Results |

| ECO+MU | - | - | - | - | Paper/Code/Results |

| MDNet | - | - | - | - | Paper/Code/Results |

| MDNet+MU | - | - | - | - | Paper/Code/Results |

- Google Drive

- Baidu Yun 提取码:kexg

Please cite the above publication if you use the code or compare with the LTMU tracker in your work. Bibtex entry:

@inproceedings{Dai_2020_CVPR,

author = {Kenan Dai, Yunhua Zhang, Dong Wang, Jianhua Li, Huchuan Lu, Xiaoyun Yang},

title = {{High-Performance Long-Term Tracking with Meta-Updater},

booktitle = {CVPR},

year = {2020}

}