This repository contains the data, codes and model checkpoints for our paper "Probing Simile Knowledge from Pre-trained Language Models".

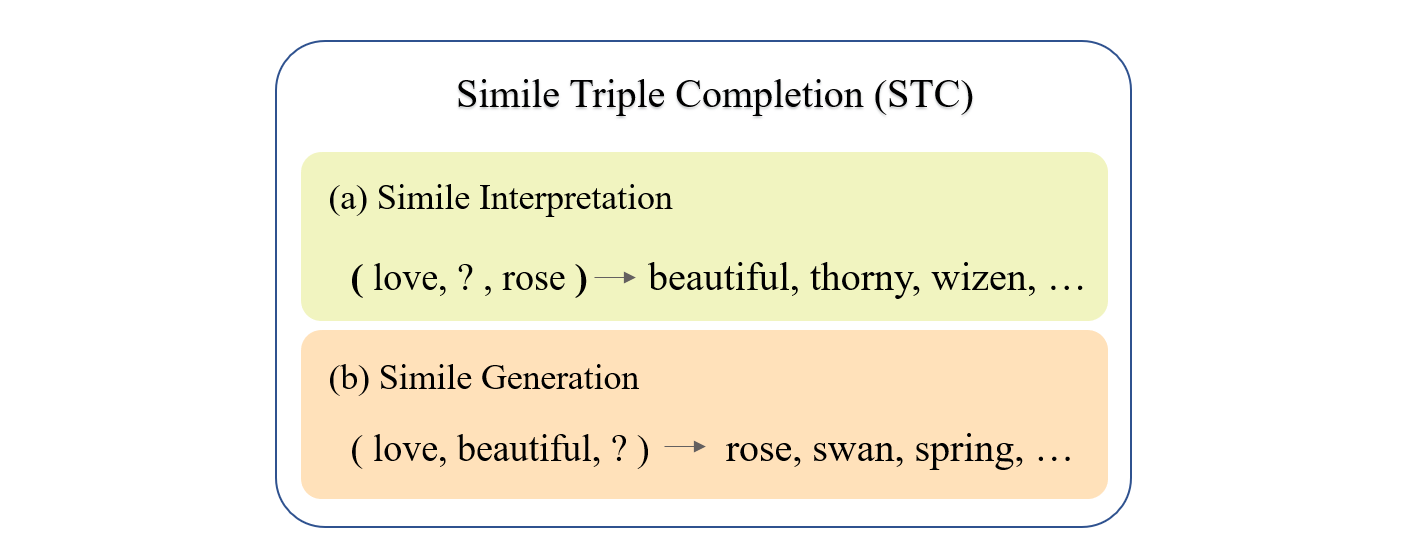

The simile interpretation (SI) task and the simile generation (SG) task can be unified into the form of Simile Triple Completion (STC) as shown in the following figure.

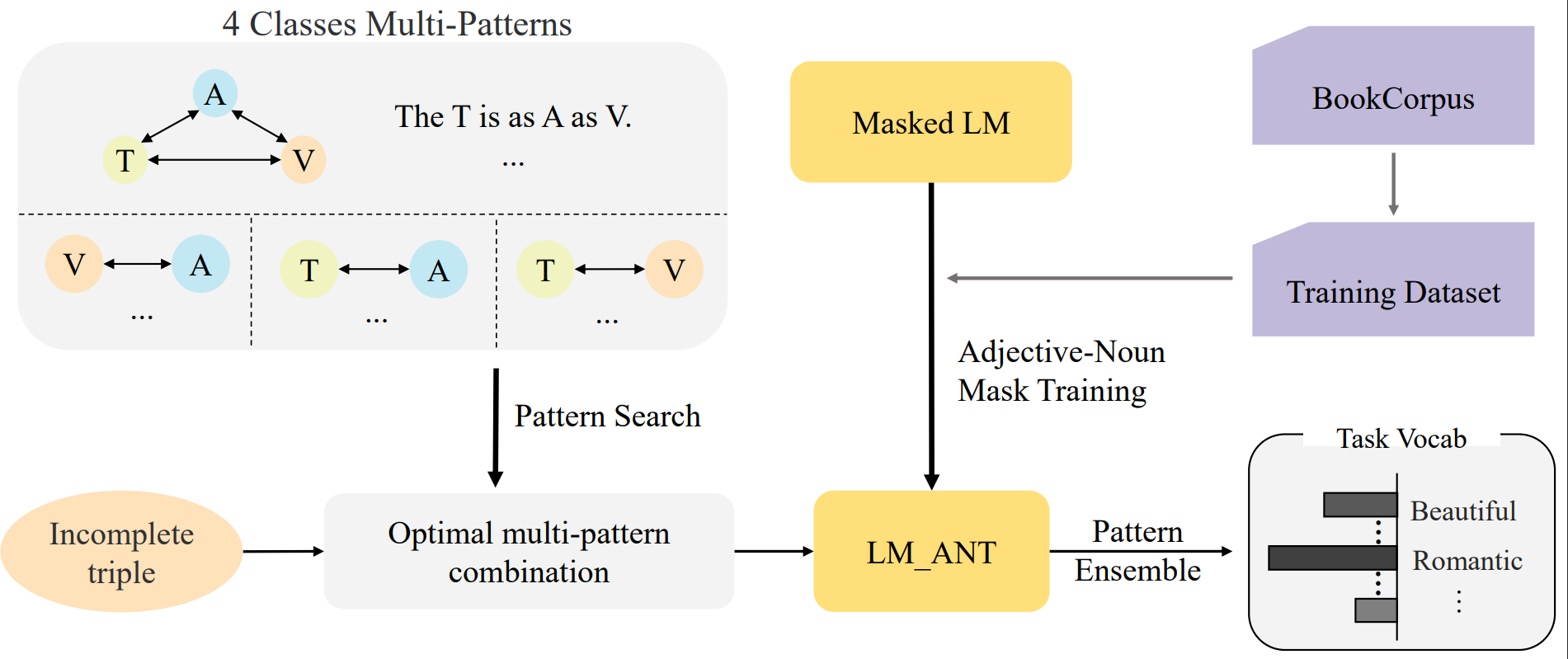

In this paper, we probe simile knowledge from PLMs to solve the simile interpretation (SI) and simile generation (SG) tasks in the unified framework of simile triple completion for the first time. The framework of our method as shown in the following figure.

We apply a secondary pre-training stage (the adjective-noun mask training) to improve the diversity of predicted words.

First, we utilize trankit to construct the training set by selecting sentences from BookCorpus that contains amod dependencies. The extracted sentences are uploaded to Google Drive.

Second, we mask a word at the end of amod relation, instead of randomly masking, and all words are masked no more than 5 times. The constructed dataset are uploaded to Google Drive.

Finally, the PLM is finetuned on the constructed dataset with MLM loss. You can import our finetuned model nairoj/Bert_ANT by using HuggingFace's Transformers.

Our method of SI and SG are shown in this Colab Script.