Atchuth Naveen Chilaparasetti

docker-compose upSetup MiniKube

minikube addons enable metrics-server

minikube start --extra-config kubelet.EnableCustomMetrics=trueDeploy Kubernetes containers

kubectl apply -f k8sTo start the service:

minikube service web-load-balancer-serviceFor Frontend Test,

Server Url : http://127.0.0.1:8080/home

Test Url : https://tensorflow.org/images/blogs/serving/cat.jpg

Set up Locust for Stress Testing

From the directory containing locustfile.py, run

locustTesting UI on http://127.0.0.1:8089/

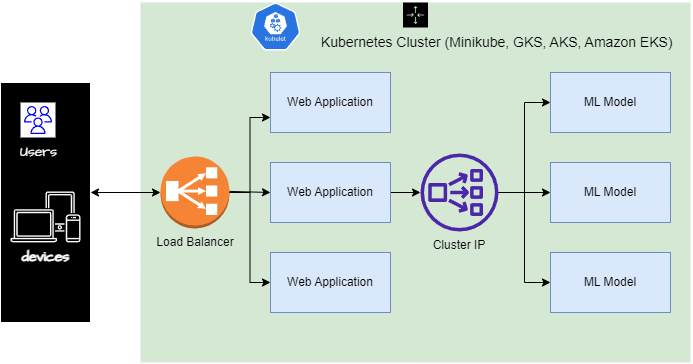

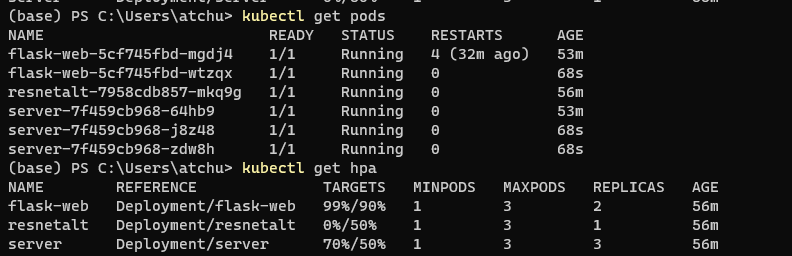

Performed tests using two model servers that take performs image calculation and a common web server that routes requests from the front end. Further directed requests to only one of the model servers and limited its max cpu utilization to 50% whereas the web server container can max cpu utilization of 90%. So, with more load, Kubernetes prefers to scale more instances of model servers.

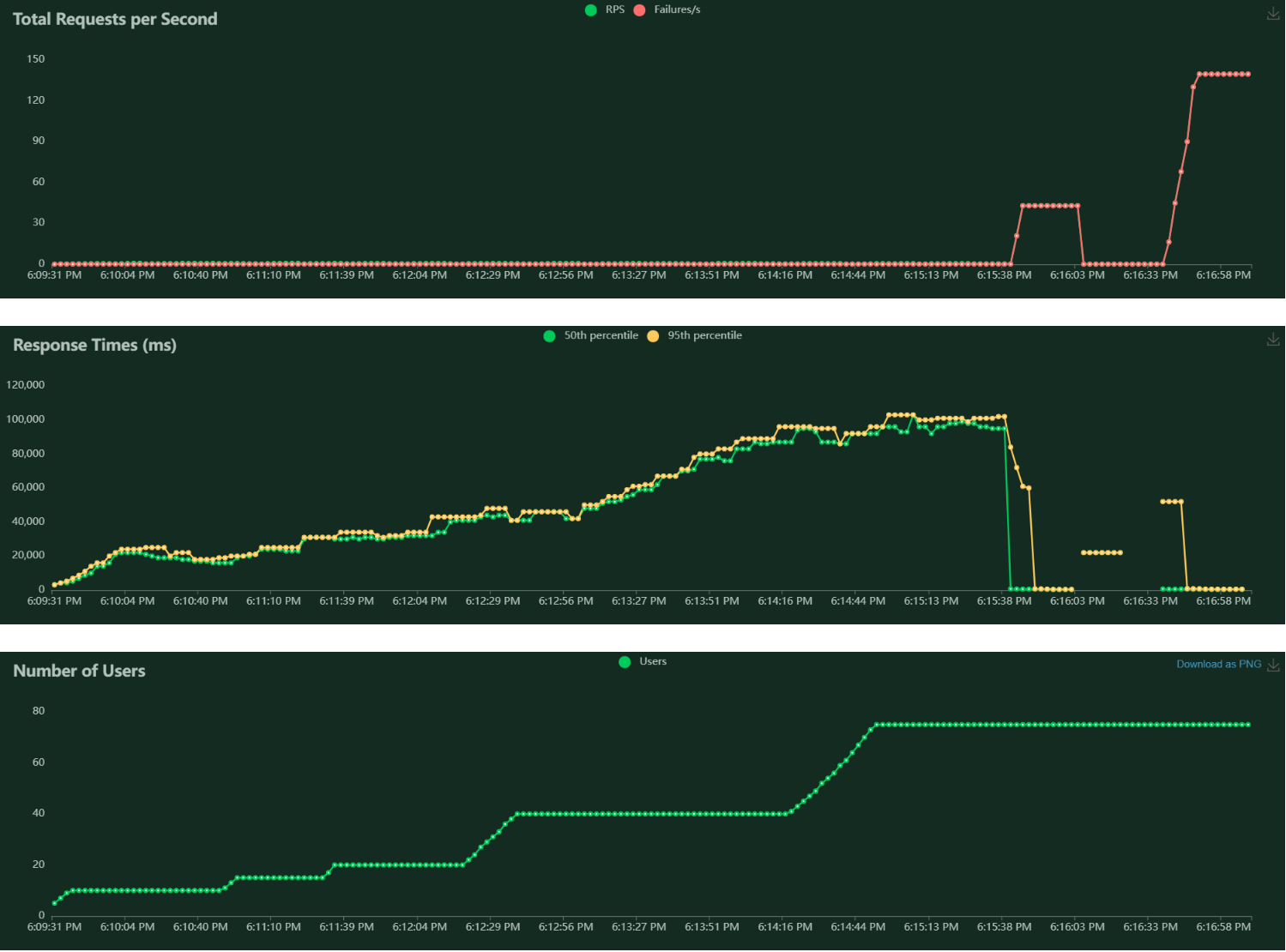

- Response rate - The response rate increases steadily and at 75 users, the system no longer can support.

- Resource Usage - At 75 users, the system crashes continuously and the web server goes down. But Kubernetes makes sure to restart the container as soon as it detects a failure.

- Response rate

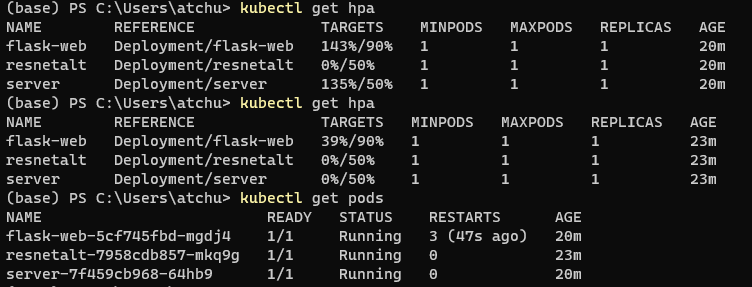

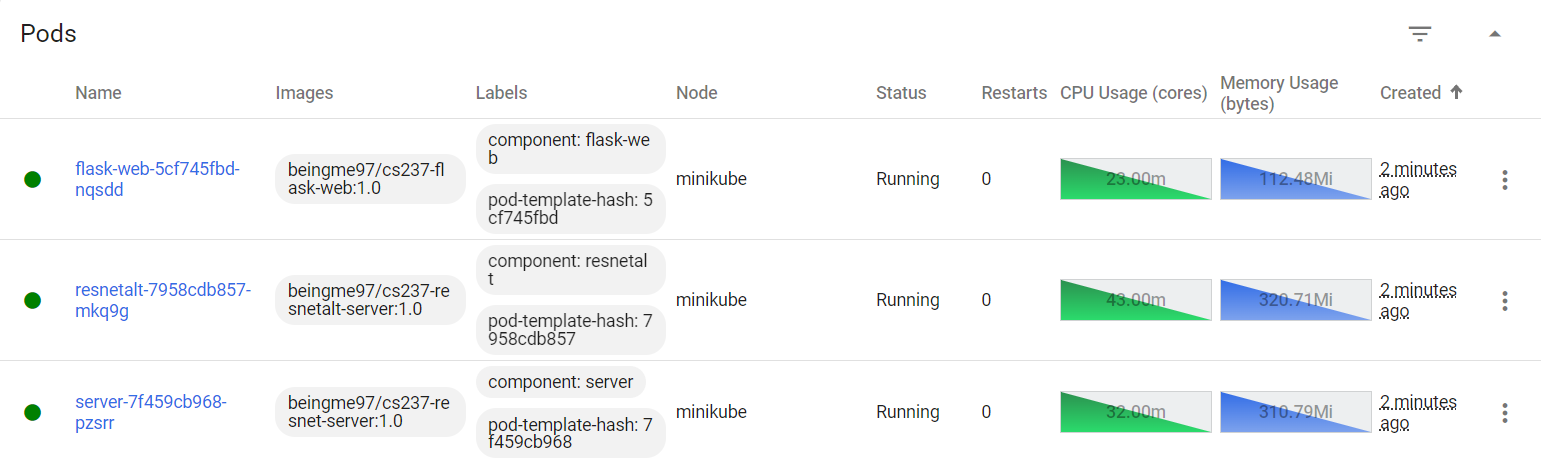

- Resource Usage at the start

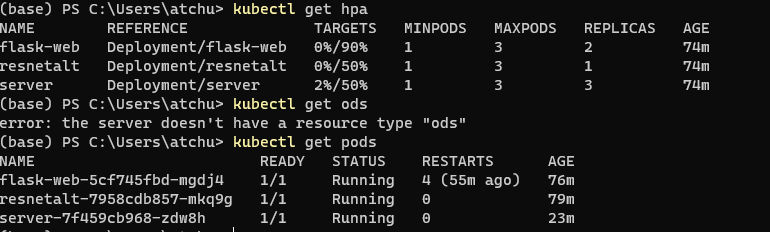

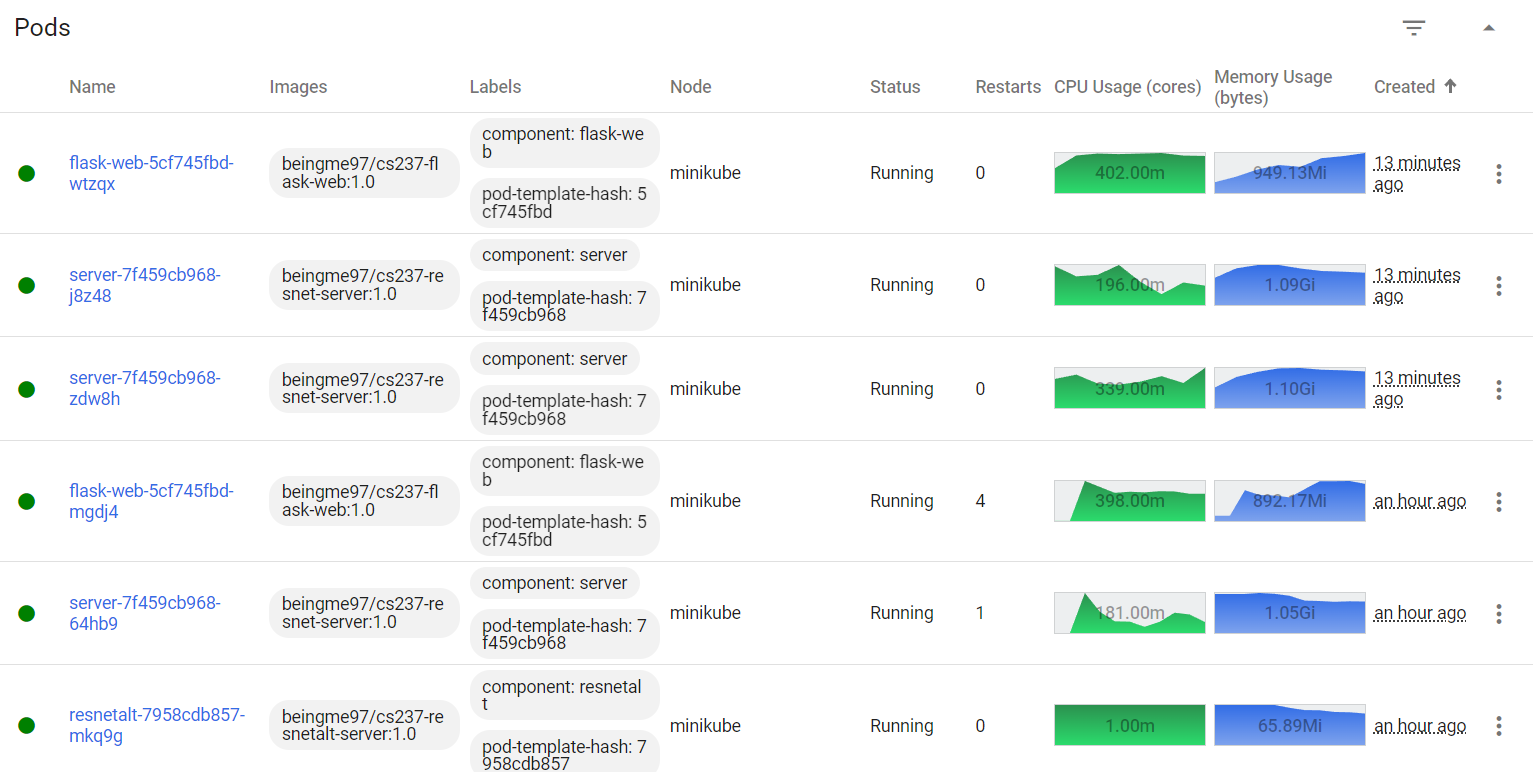

- Resource Usage at the time of scaling - Kubernetes automatically spawns two more instances for model server 1 that is in use whereas the other model server is idle. Because the web server can have resource usage up to 90%, Kubernetes adjusted the resources between the model and web servers appropriately.

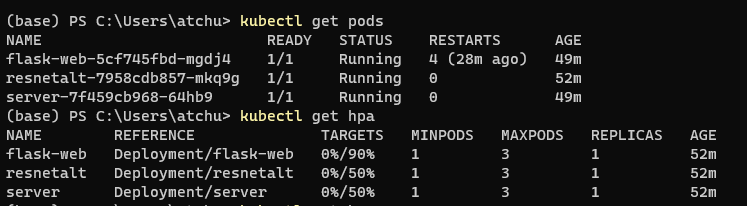

- Resource Usage during downscaling - When no requests are being sent, Kubernetes automatically performs downscaling

- Resource Usage from minikube dashboard

minikube dashboard- Resource usage at full user load

As all the tests are performed locally, there is a resource constraint to perform tests on a full scale. So, we migrated the code Google cloud and performed similar tests and the results are presented. For commands to set up gcloud Kubernetes engine and the test results, refer commands , report.