English | 简体中文

This is a simplified project that deploys the Yolov8 model for object detection on the Windows platform using TensorRT.

Multi-batch inference Supported !

-

Install

CUDAfollowCUDA official website.🚀 RECOMMENDED

CUDA>= 11.3 -

Install

TensorRTfollowTensorRT official website.🚀 RECOMMENDED

TensorRT>= 8.2 -

Install python requirement.

pip install -r requirement.txt

-

Install

ultralyticspackage for ONNX export or TensorRT API building.pip install ultralytics

-

Prepare your own PyTorch weight such as

yolov8n.pt.

1. Prepare trained model *.pt or pull from ultralytics directly.

You can export your onnx model by ultralytics API and add postprocess such as bbox decoder and NMS into ONNX model at the same time.

python3 export-det.py \

--weights yolov8n.pt \

--iou-thres 0.65 \

--conf-thres 0.25 \

--topk 100 \

--opset 11 \

--sim \

--input-shape 4 3 640 640 \

--device cuda:0--weights: The PyTorch model you trained.--iou-thres: IOU threshold for NMS plugin.--conf-thres: Confidence threshold for NMS plugin.--topk: Max number of detection bboxes.--opset: ONNX opset version, default is 11.--sim: Whether to simplify your onnx model.--input-shape: Input shape for you model, should be 4 dimensions.--device: The CUDA deivce you export engine .

trtexec --onnx=yolov8n.onnx --saveEngine=yolov8n.bin --workspace=3000 --verbose --fp16You can infer images with the engine by infer-det.py .

Usage:

python3 infer-det.py \

--engine yolov8n.bin \

--imgs data \

--show \

--out-dir outputs \

--device cuda:0--engine: The Engine you export.--imgs: The images path you want to detect.--show: Whether to show detection results.--out-dir: Where to save detection results images. It will not work when use--showflag.--device: The CUDA deivce you use.--profile: Profile the TensorRT engine.

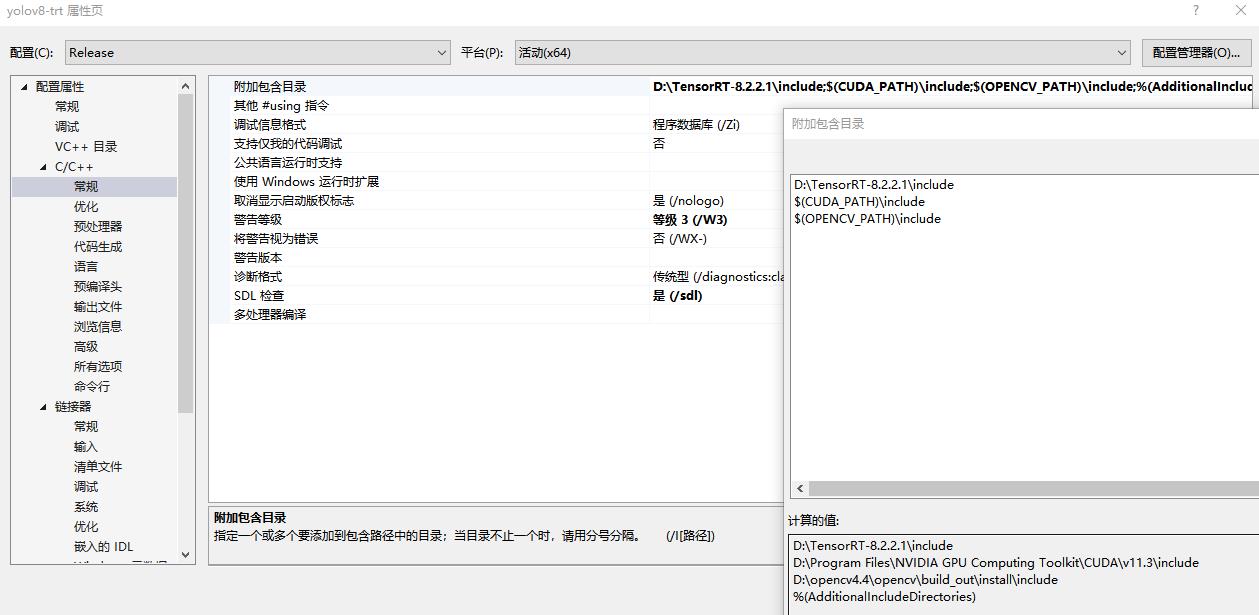

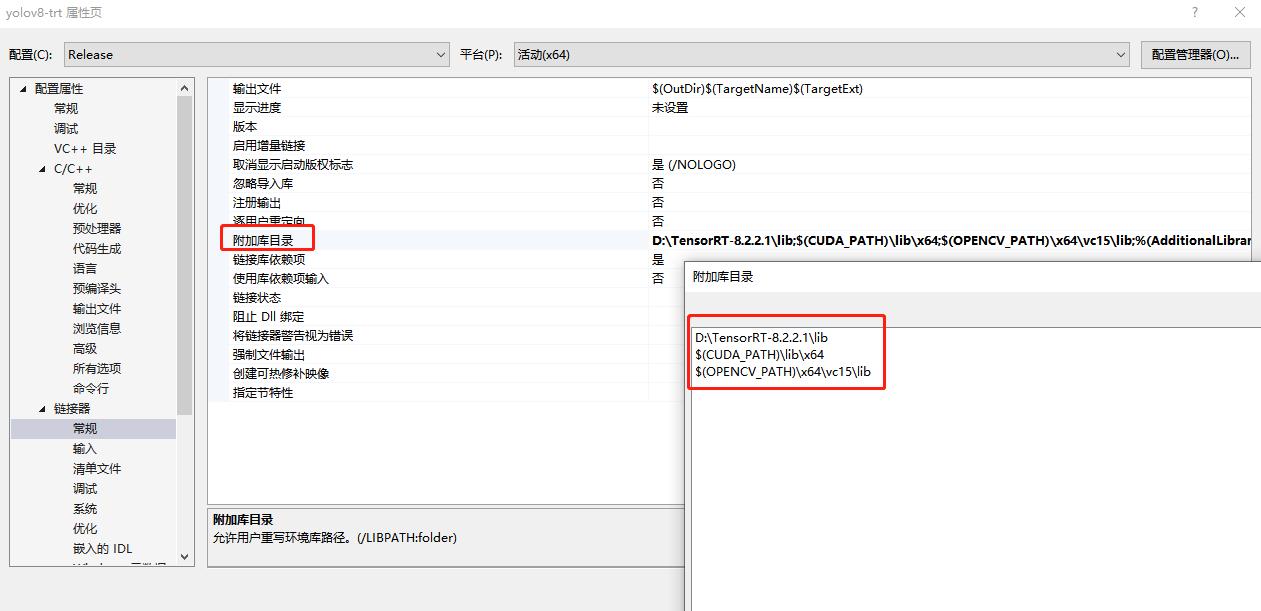

You can infer with c++ in inference/yolov8-trt .

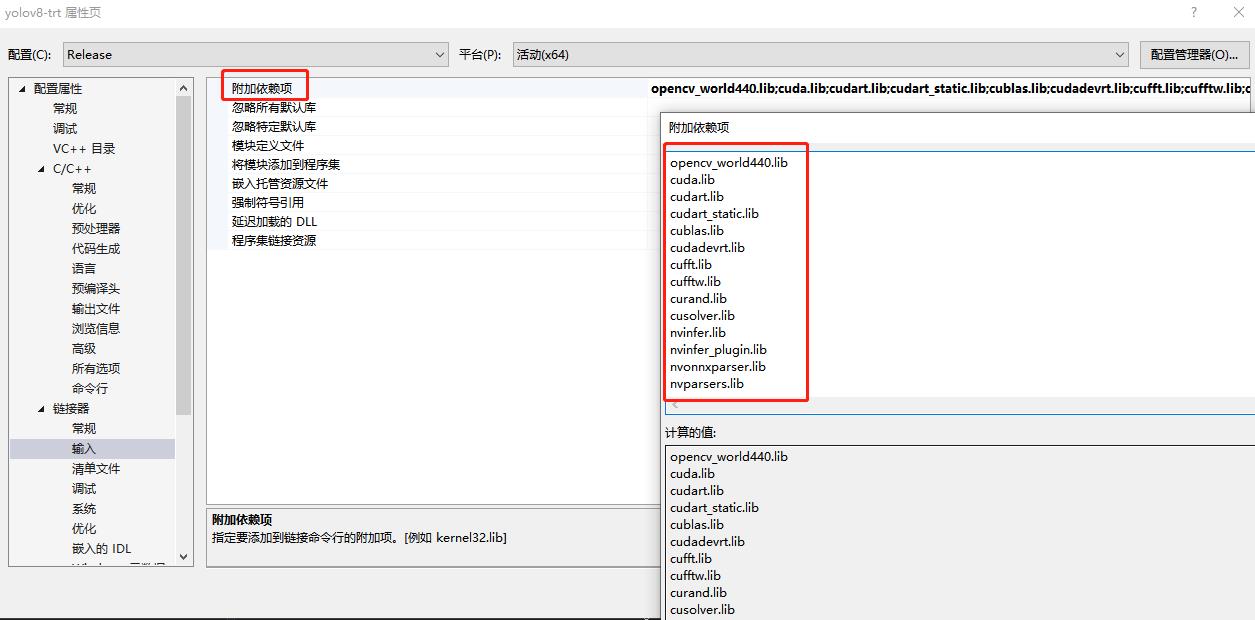

opencv_world440.lib

cuda.lib

cudart.lib

cudart_static.lib

cublas.lib

cudadevrt.lib

cufft.lib

cufftw.lib

curand.lib

cusolver.lib

nvinfer.lib

nvinfer_plugin.lib

nvonnxparser.lib

nvparsers.libUsage:

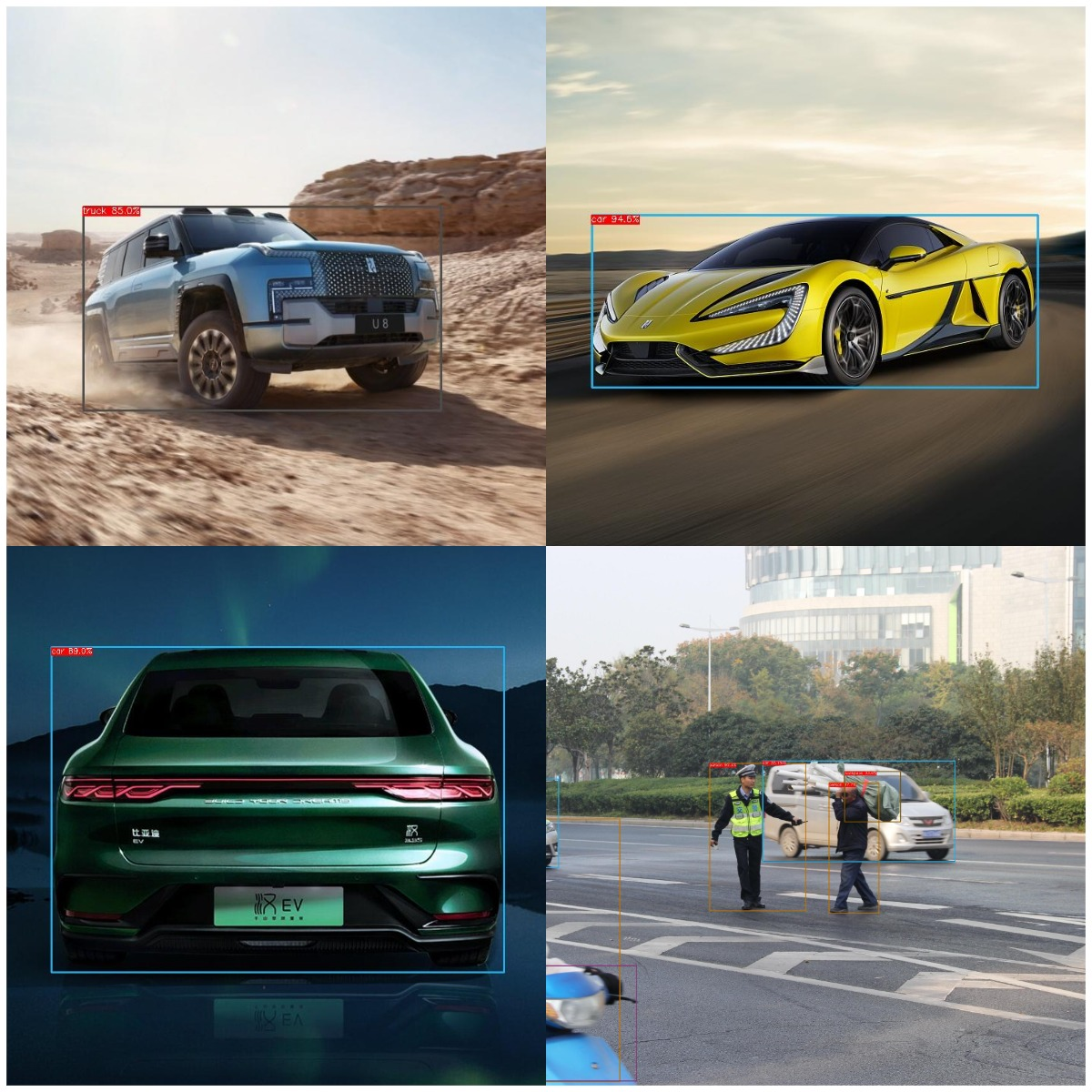

Unzip the inference\yolov8-trt\yolov8-trt\models\yolov8n_b4.zip file to the current directory to obtain the compiled TRT engine yolov8n_b4.bin.

Note:

Different GPU devices require recompilation.

Modify this part:

cudaSetDevice(0); // GPU ID

int img_h = 640;

int img_w = 640;

int batch_size = 4;

const std::string engine_file_path = "models\\yolov8n_b4.bin";

std::string out_path = "results\\"; // path to save results

std::vector<std::string> img_path_list;

img_path_list.push_back("images\\1.jpg"); // image path

img_path_list.push_back("images\\2.jpg");

img_path_list.push_back("images\\3.jpg");

img_path_list.push_back("images\\4.jpg");Compile and run main.cpp.

| model name | input size | batch size | precision | language | GPU | ms/img |

|---|---|---|---|---|---|---|

| yolov8n | 640x640x3 | 1 | FP32 | C++ | GTX 1060 | 5.3 |

| yolov8n | 640x640x3 | 4 | FP32 | C++ | GTX 1060 | 4.35 |

| yolov8l | 640x640x3 | 1 | FP32 | C++ | GTX 1060 | 41 |

| yolov8l | 640x640x3 | 4 | FP32 | C++ | GTX 1060 | 38.25 |

| yolov8m | 1024x1024x3 | 4 | FP32 | C++ | GTX 1060 | 52.46 |

| yolov8m | 1024x1024x3 | 4 | FP16 | C++ | GTX 1060 | 51.05 |

| yolov8m | 1024x1024x3 | 4 | INT8 | C++ | GTX 1060 | 23.23 |

| yolov8m | 1024x1024x3 | 4 | FP32 | C++ | GTX 3070 | 19.625 |

| yolov8m | 1024x1024x3 | 4 | FP16 | C++ | GTX 3070 | 6.095 |

| yolov8m | 1024x1024x3 | 4 | INT8 | C++ | GTX 3070 | 3.1 |

| yolov8m | 1024x1024x3 | 4 | FP32 | C++ | GTX 4070 | 12.27 |

| yolov8m | 1024x1024x3 | 4 | FP16 | C++ | GTX 4070 | 4.49 |

| yolov8m | 1024x1024x3 | 4 | INT8 | C++ | GTX 4070 | 2.74 |

NOTICE:

Limited by its GPU model, the GTX 1060 has poor performance in terms of inference speed, and it does not fully support FP16 precision and FP16 Tensor. Using 30-series or 40-series GPUs can significantly improve performance.