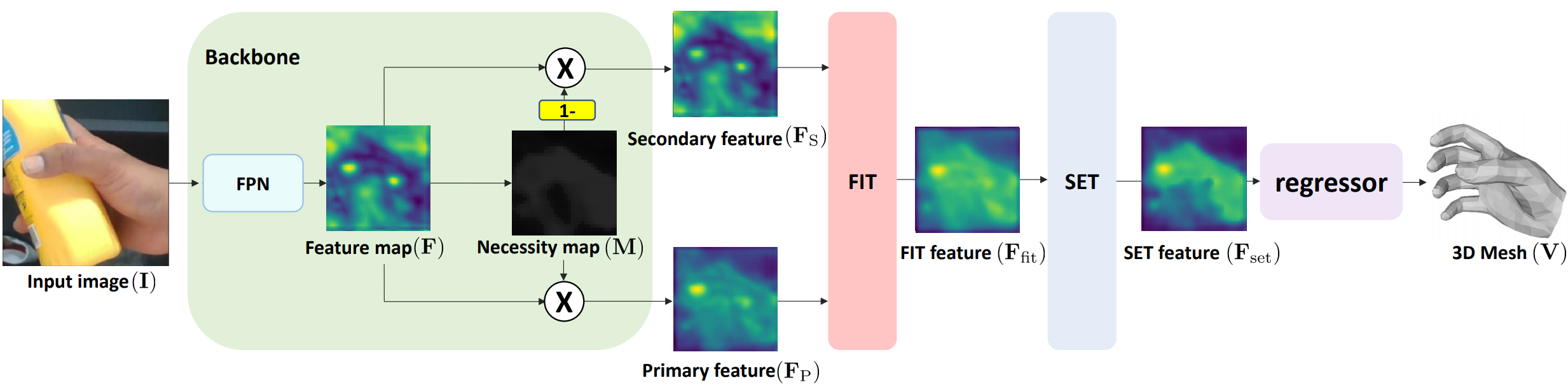

This repository is the offical Pytorch implementation of HandOccNet: Occlusion-Robust 3D Hand Mesh Estimation Network (CVPR 2022). Below is the overall pipeline of HandOccNet.

- Install PyTorch and Python >= 3.7.4 and run

sh requirements.sh. - Download

snapshot_demo.pth.tarfrom here and place atdemofolder. - Prepare

input.jpgatdemofolder. - Download

MANO_RIGHT.pklfrom here and place atcommon/utils/manopth/mano/models. - Go to

demofolder and editbboxin here. - Run

python demo.py --gpu 0if you want to run on gpu 0. - You can see

hand_bbox.png,hand_image.png, andoutput.obj. - Run

python demo_fitting.py --gpu 0 --depth 0.5if you want to get the hand mesh's translation from the camera. The depth argument is initialization for the optimization. - You can see

fitting_input_3d_mesh.jsonthat contains the translation and MANO parameters,fitting_input_3dmesh.obj,fitting_input_2d_prediction.png, andfitting_input_projection.png.

The ${ROOT} is described as below.

${ROOT}

|-- data

|-- demo

|-- common

|-- main

|-- output

datacontains data loading codes and soft links to images and annotations directories.democontains demo codes.commoncontains kernel codes for HandOccNet.maincontains high-level codes for training or testing the network.outputcontains log, trained models, visualized outputs, and test result.

You need to follow directory structure of the data as below.

${ROOT}

|-- data

| |-- HO3D

| | |-- data

| | | |-- train

| | | | |-- ABF10

| | | | |-- ......

| | | |-- evaluation

| | | |-- annotations

| | | | |-- HO3D_train_data.json

| | | | |-- HO3D_evaluation_data.json

| |-- DEX_YCB

| | |-- data

| | | |-- 20200709-subject-01

| | | |-- ......

| | | |-- annotations

| | | | |--DEX_YCB_s0_train_data.json

| | | | |--DEX_YCB_s0_test_data.json

- Download HO3D(version 2) data and annotation files [data][annotation files]

- Download DexYCB data and annotation files [data][annotation files]

- For the MANO layer, I used manopth. The repo is already included in

common/utils/manopth. - Download

MANO_RIGHT.pklfrom here and place atcommon/utils/manopth/mano/models.

You need to follow the directory structure of the output folder as below.

${ROOT}

|-- output

| |-- log

| |-- model_dump

| |-- result

| |-- vis

- Creating

outputfolder as soft link form is recommended instead of folder form because it would take large storage capacity. logfolder contains training log file.model_dumpfolder contains saved checkpoints for each epoch.resultfolder contains final estimation files generated in the testing stage.visfolder contains visualized results.

- Install PyTorch and Python >= 3.7.4 and run

sh requirements.sh. - In the

main/config.py, you can change settings of the model including dataset to use and input size and so on.

In the main folder, set trainset in config.py (as 'HO3D' or 'DEX_YCB') and run

python train.py --gpu 0-3to train HandOccNet on the GPU 0,1,2,3. --gpu 0,1,2,3 can be used instead of --gpu 0-3.

Place trained model at the output/model_dump/.

In the main folder, set testset in config.py (as 'HO3D' or 'DEX_YCB') and run

python test.py --gpu 0-3 --test_epoch {test epoch} to test HandOccNet on the GPU 0,1,2,3 with {test epoch}th epoch trained model. --gpu 0,1,2,3 can be used instead of --gpu 0-3.

- For the HO3D dataset, pred{test epoch}.zip will be generated in

output/resultfolder. You can upload it to the codalab challenge and see the results. - Our trained model can be downloaded from here

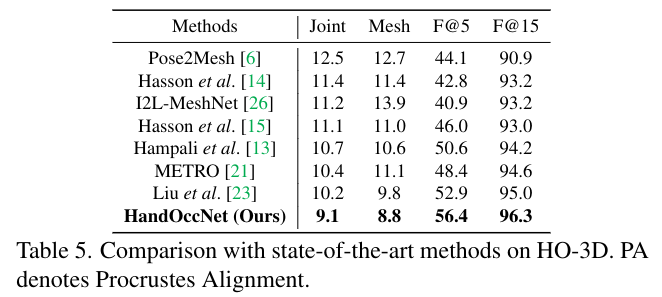

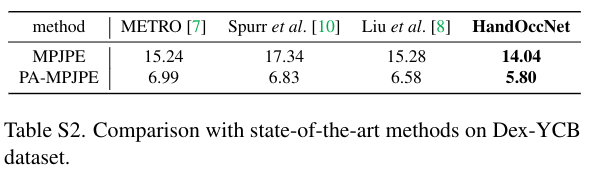

Here I report the performance of the HandOccNet.

@InProceedings{Park_2022_CVPR_HandOccNet,

author = {Park, JoonKyu and Oh, Yeonguk and Moon, Gyeongsik and Choi, Hongsuk and Lee, Kyoung Mu},

title = {HandOccNet: Occlusion-Robust 3D Hand Mesh Estimation Network},

booktitle = {Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2022}

}

For this project, we relied on research codes from: