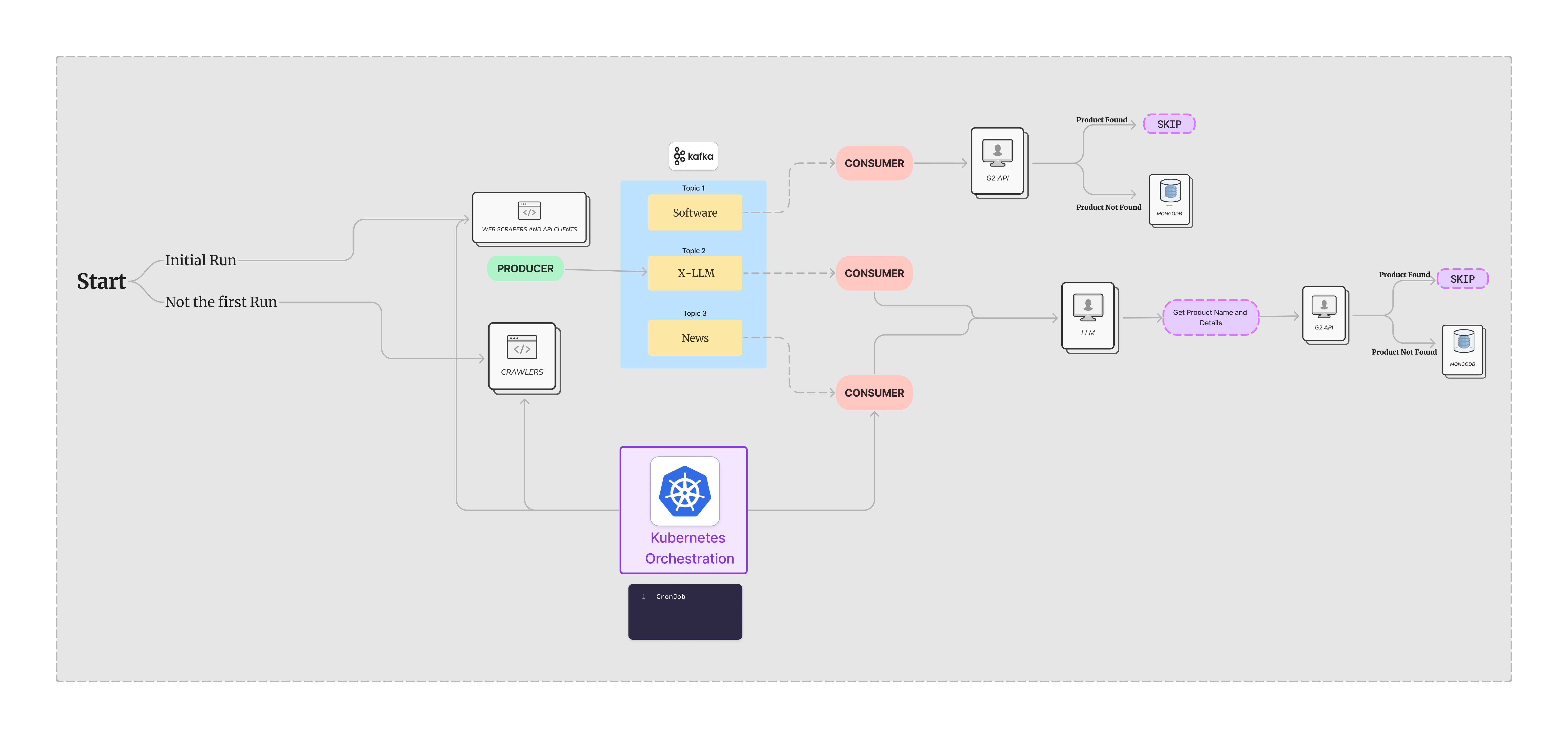

This project automates the listing of B2B software products on G2, ensuring that new software is promptly and efficiently added to the G2 marketplace. By leveraging advanced web scraping techniques, real-time data streaming, and automated workflows, this system maximizes the visibility and accessibility of new software products, particularly in regions where G2 has low penetration.

- Fast and Efficient Listings: Automate the detection and listing of new software products to ensure real-time updates.

- Global Reach: Capture and list software launches worldwide, especially from underrepresented regions.

- Technological Innovation: Utilize modern technologies including web scraping, real-time data streams, and cloud-native services to maintain an efficient workflow.

- Description: These are the primary sources where detailed and technical data about software products can be found. Key sources include software directories, official product pages, and industry-specific news portals.

- Scraping Techniques: Utilize BeautifulSoup for parsing HTML content from static pages and Selenium for interacting with JavaScript-driven dynamic web pages to extract critical data about software releases and updates.

- Websites ProductHunt, Slashdot, Betalist and many more tech news sites regularly post about new software products.

- Purpose: To capture press releases, news articles, and social media posts that announce or discuss the launch of new B2B software products.

- Approach: Deploy custom crawlers through tech news portals like TechCrunch, Wired, and social media platforms such as Twitter and LinkedIn. These crawlers use Selenium to mimic human browsing patterns, ensuring timely and accurate data collection.

- Twitter/X so that the new products from these platforms can also be captured for listing.

- TechAfrica so that the products from low visilibility countries can be tracked.

- Web Scraping: BeautifulSoup, Selenium

- Data Streaming: Apache Kafka

- Data Storage and Management: MongoDB, Docker, Kubernetes

- APIs and Advanced Processing: G2 API, Large Language Models (LLMs)

Comprehensive scrapers are deployed initially to perform a deep crawl of targeted sources to populate the database with existing software products. BeautifulSoup is used for static content and Selenium for dynamic content.

Scheduled daily crawls update the database with new additions and changes, managed via cron jobs or Kubernetes schedulers.

Extracted data is streamed in real-time into Kafka topics designed to segment the data efficiently:

- software for direct product data

- x-llm for processed textual data needing further extraction

- news for updates from news sources about software products

Kafka consumers process data on-the-fly. If new products are detected via the G2 API, they are added to MongoDB.

LLMs analyze textual data from news and social media to extract and verify new product details.

Kubernetes ensures scalable and resilient deployment of all system components.

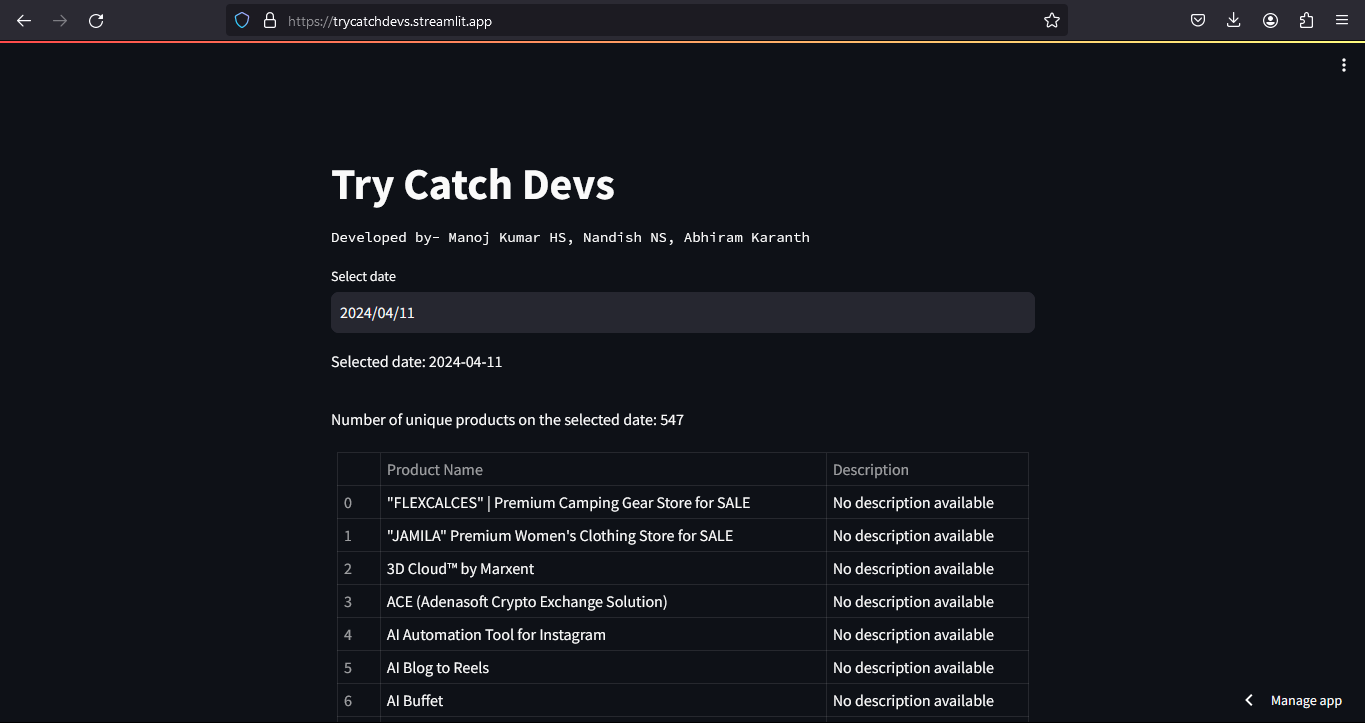

https://trycatchdevs.streamlit.app/run this command in root directory of the project

# start zookeeper and kafka

docker-compose up -dshutdown the kafka and zookeeper

# stop zookeeper and kafka

docker-compose down# Build the image

docker build -t scrape-products .

# run the image

docker run --network="host" scrape-products# Build the product consumer

# Go to the respective directory

cd KafkaConsumer\Software

docker build -t software-consumer .

# run the image

docker run --network="host" software-consumer# Build the product consumer

# Go to the respective directory

cd KafkaConsumer\TwitterLLM

docker build -t twitter-consumer .

# run the image

docker run --network="host" twitter-consumer# Build the product consumer

# Go to the respective directory

cd KafkaConsumer\NewsLLM

docker build -t news-consumer .

# run the image

docker run --network="host" news-consumerkubectl apply -f kafka-zoopkeeper-deployment.yaml

kubectl apply -f scrape-products-cronjob.yaml

kubectl apply -f product-cronjob.yaml

kubectl apply -f twitter-cronjob.yaml

kubectl apply -f news-cronjob.yamlkubectl get cronjobs

kubectl get pods

kubectl get deployments