This codebase is built upon Model Soups repository.

- (Aug. 2024) Model Stock was selected as an oral presentation at ECCV 2024! 🎉

- We will release the full code soon. Stay tuned!

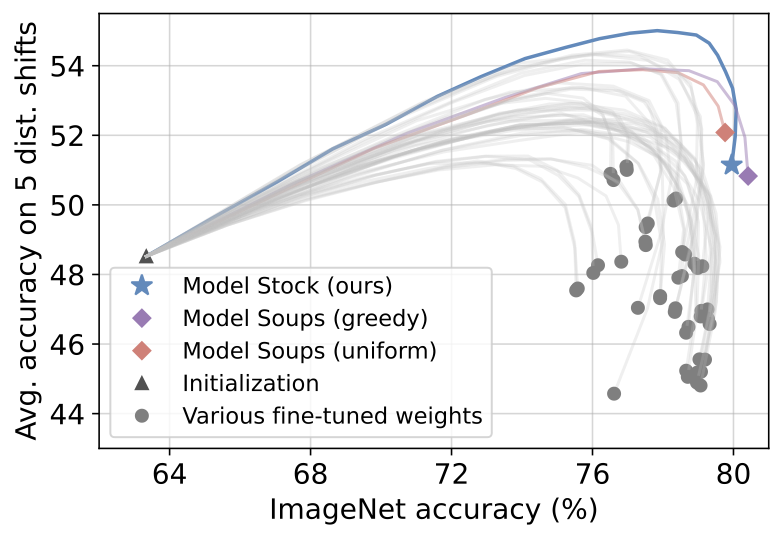

This paper introduces an efficient fine-tuning method for large pre-trained models, offering strong in-distribution (ID) and out-of-distribution (OOD) performance. Breaking away from traditional practices that need a multitude of fine-tuned models for averaging, our approach employs significantly fewer models to achieve final weights yet yield superior accuracy. Drawing from key insights in the weight space of fine-tuned weights, we uncover a strong link between the performance and proximity to the center of weight space. Based on this, we introduce a method that approximates a center-close weight using only two fine-tuned models, applicable during or after training. Our innovative layer-wise weight averaging technique surpasses state-of-the-art model methods such as Model Soup, utilizing only two fine-tuned models. This strategy can be aptly coined Model Stock, highlighting its reliance on selecting a minimal number of models to draw a more optimized-averaged model. We demonstrate the efficacy of Model Stock with fine-tuned models based upon pre-trained CLIP architectures, achieving remarkable performance on both ID and OOD tasks on the standard benchmarks, all while barely bringing extra computational demands.

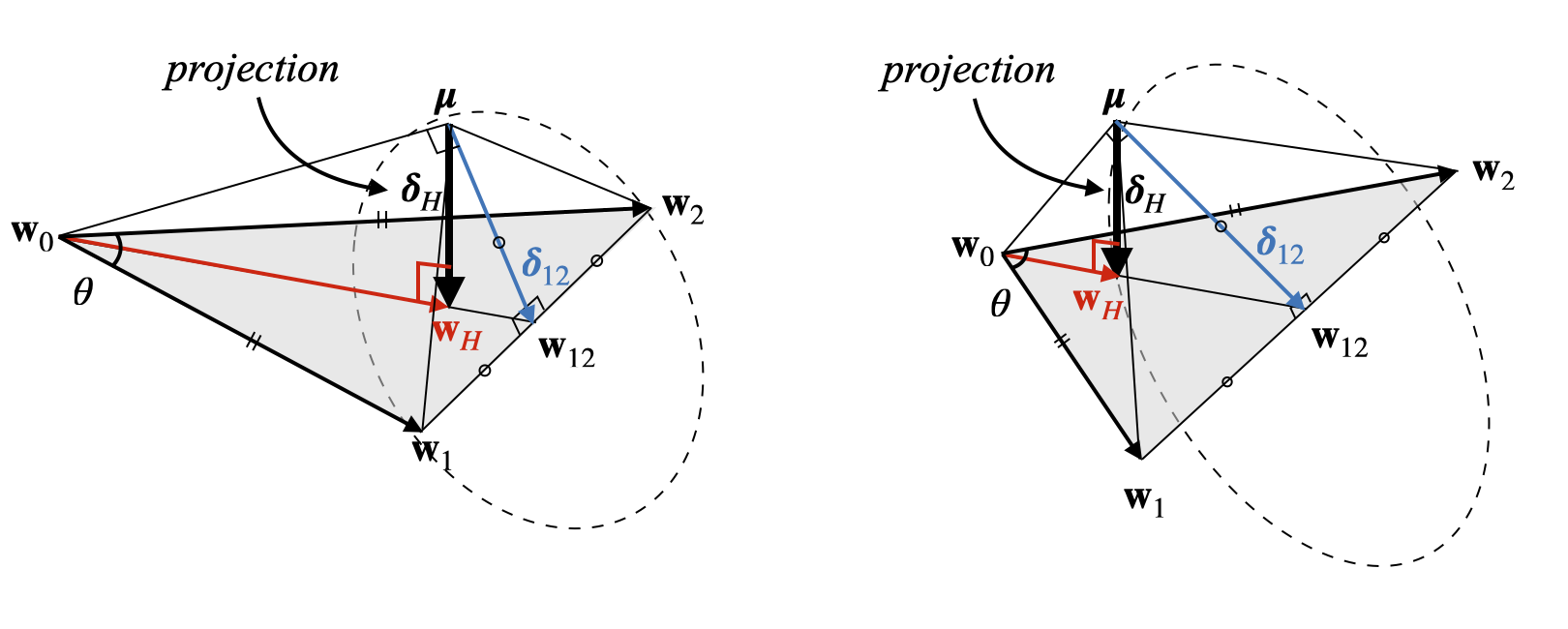

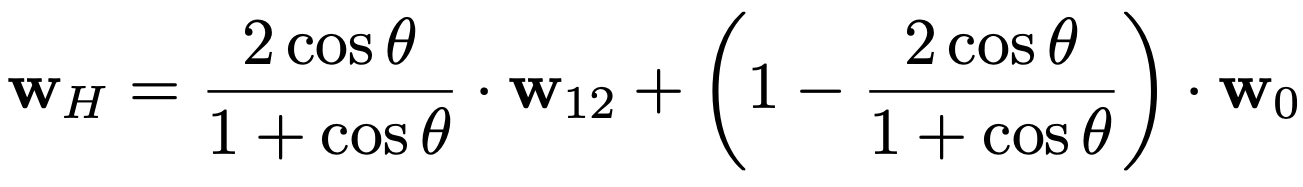

We utilize the geometric properties of the weights of fine-tuned models. We find optimal merging ratio for each layer by minimizing the distance between the merged weight and the center of the weights of fine-tuned models. The following figure shows the overview of Model Stock.

We present two scenarios: a small angle (left) and a large angle (right) between two fine-tuned weights (w_1, w_2) and a pre-trained weight (w_0). The gray triangle spans these weights, representing our search space. The optimal point on this triangle closest to the ideal center (μ) is the perpendicular foot (w_H), determined by the angle between the fine-tuned models. When the angle (θ) is large (right), w_H relies more on w_0. For details, please refer to our paper.

- Install Model Soups repository. We will use its

datasets/andutils.py.

- This tutorial notebook will help understanding how Model Stock works. Note that it is the simplified run of Model Stock without periodic merging.

- This evaluation notebook will show the performance of pre-uploaded Model Stock weights on ImageNet and five distribution shift benchmarks.

- End-to-end training and evaluation code will be released soon.

- Implementation by merge-kit

@inproceedings{,

title={Model Stock: All we need is just a few fine-tuned models},

author={Jang, Dong-Hwan and Yun, Sangdoo and Han, Dongyoon},

year={2024},

booktitle={Proceedings of the European Conference on Computer Vision},

}