This work has been done as part of a project for the COMS 6998: Conversational AI course by Prof. Zhou Yu at Columbia University in Fall 2022 semester.

Empathetic response generation is a crucial aspect of many chatbot conversations and tasks. Previous research has shown promising results in this area by leveraging various annotated datasets and approaches, leading to a wide range of literature and tools that work towards building empathetic conversational agents. We propose a modified version of the MoEL model (Lin et al., 2019) that takes into account not only emotion but also the speaker’s persona in order to capture the nuances of how different personalities express empathy. We attempt to incorporate methods from different empathetic dialogue paradigms into one model and aim to achieve richer results. Specifically, we adapt our model to work with the PEC dataset (Zhong et al., 2020) while leveraging rich persona information about speakers. We also explore the potential of adding additional auxiliary signals to improve empathetic natural language generation through experimentation with meaningful variations of MoEL. Read further here.

This project consists of four major components:

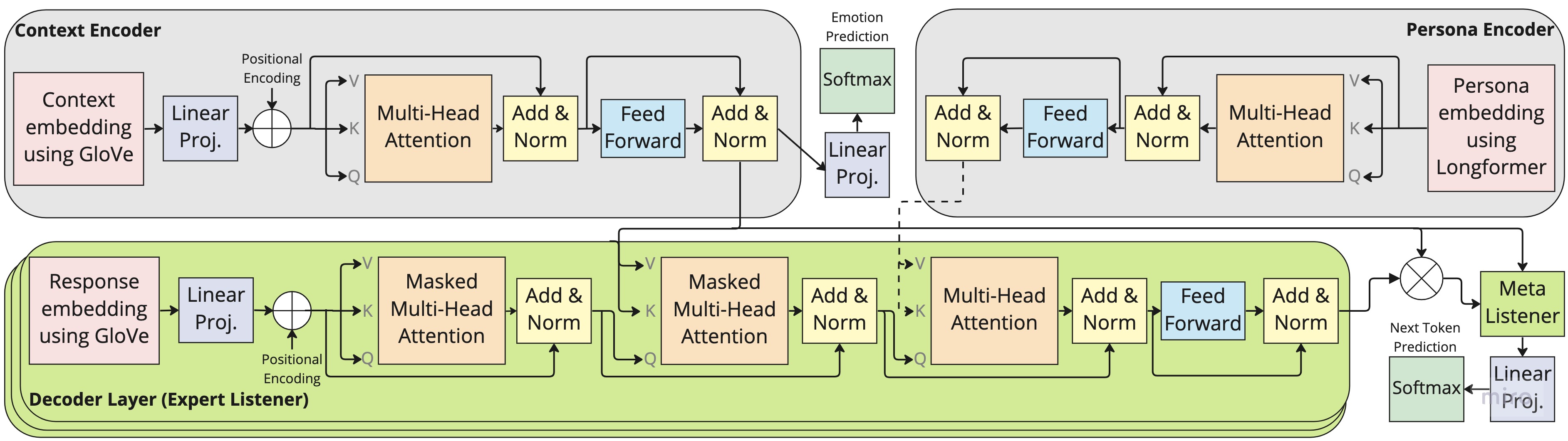

- Processing the PEC dataset to create embeddings from persona sentences. In order to capture entire persona, Longformer was used to create 3,072-dimensional persona embeddings.

- Training of a BERT-based emotion classifier on the Empathetic Dialogue dataset (Rashkin et al., 2018), and using it to annotate the context utterances in PEC with emotion labels required for MoEL.

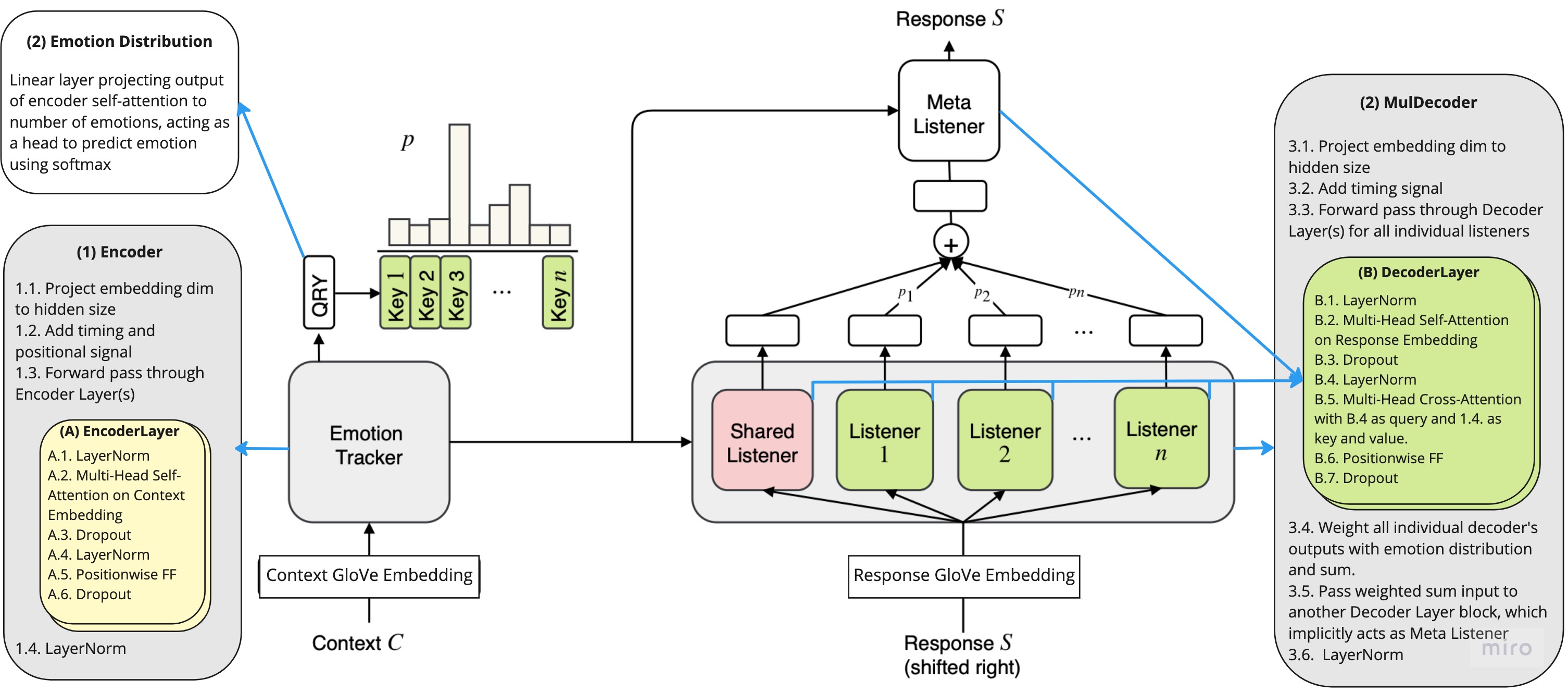

- Understanding MoEL. MoEL is trained on Empathetic Dialogue dataset which consists of 32 distinct emotions, we decided to group similar emotions together in order to simplify the complexity of the task, and work with 16 emotion-groups instead.

- Modifying MoEL's architecture to condition the emotional response generation on persona. This was done by extending the transformer architecture in MoEL to have a layer of cross-attention between emotional NLG text and persona-embedding. Specifically, an additional decoder block was added on top of each of MoEL's individual decoders. The new decoder block queried existing decoder's generated text using a self-attentive persona encoder block's output as key and value. The same has been illustrated in the image below:

While this work requires extensive human evaluations to make strong claims, initial indicators suggest that this method increases the expression of sentiment in MoEL's generated text.

python3 main.py --model experts --label_smoothing --noam --emb_dim 300 --hidden_dim 300 --hop 1 --heads 2 --topk 5 --cuda --pretrain_emb --softmax --basic_learner --schedule 10000

Optional arguments:

--wandb_project moel: To log the training run's parameters on wandb.--ed_16: To train MoEL on the empathetic-dialogue dataset with 16 emotions. This needs the numpy arrays required by MoEL to be present in ed_16 folder.--pec_2: To train MoEL on pec dataset with 2 emotions. This needs the numpy arrays required by MoEL to be present in pec_2 folder.--pec_32: To train MoEL on pec dataset with 32 emotions. This needs the numpy arrays required by MoEL to be present in pec_32 folder.--use_persona: To train MoEL with persona embeddings pec dataset with 32 emotions. This needs the corresponding numpy arrays to be present.