This repository gathers the data and code for the paper Can discrete information extraction prompts generalize across language models?

We share all the generated prompts in this repository. Please refer to the corresponding fork if you want to evaluate the prompts on the language models or generate your own.

- Evaluation: LAMA

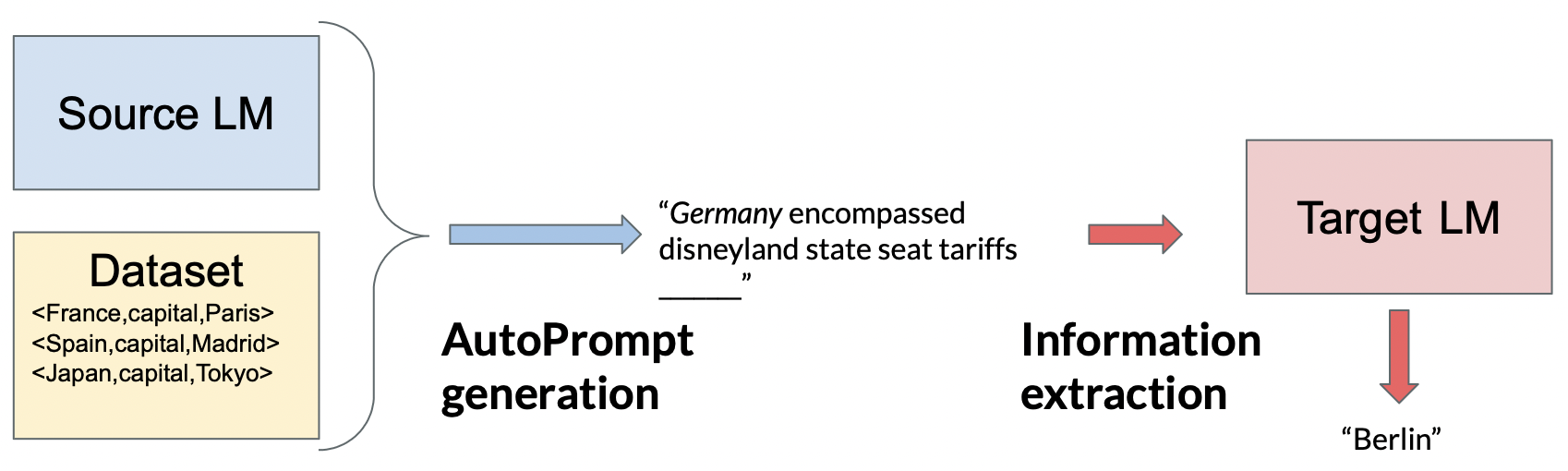

- Single-model training: AutoPrompt

- Mixed-training: Mixed-training AutoPrompt

@inproceedings{rakotonirina2023,

author = {Nathana{\"{e}}l Carraz Rakotonirina and

Roberto Dess{\`{\i}} and

Fabio Petroni and

Sebastian Riedel and

Marco Baroni},

title = {Can discrete information extraction prompts generalize across language

models?},

booktitle = {The Eleventh International Conference on Learning Representations,

{ICLR} 2023, Kigali, Rwanda, May 1-5, 2023},

publisher = {OpenReview.net},

year = {2023},

url = {https://openreview.net/pdf?id=sbWVtxq8-zE},

}