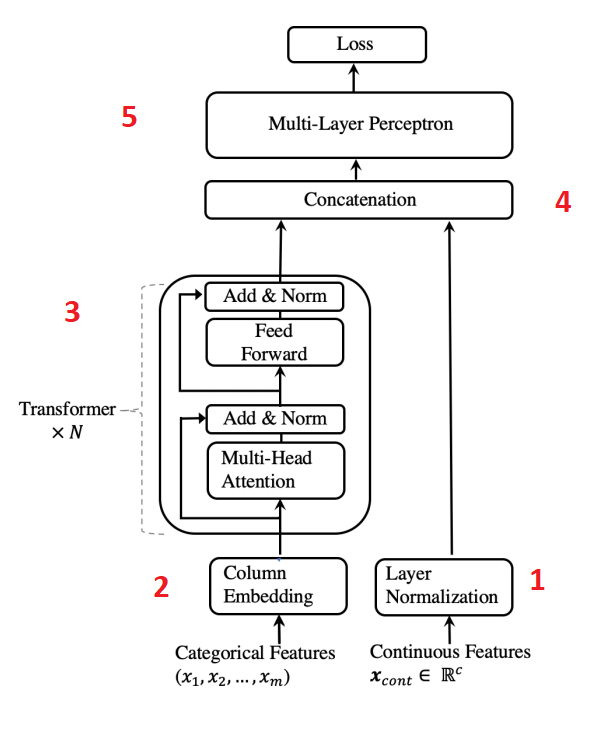

Paper: TabTransformer-2020

- Numerical features are normalised and passed forward

- Categorical features are embedded

- Embeddings are passed through Transformer blocks N times to get contextual embeddings

- Contextual categorical embeddings are concatenated with numerical features

- Concatenation gets passed through MLP to get the required prediction

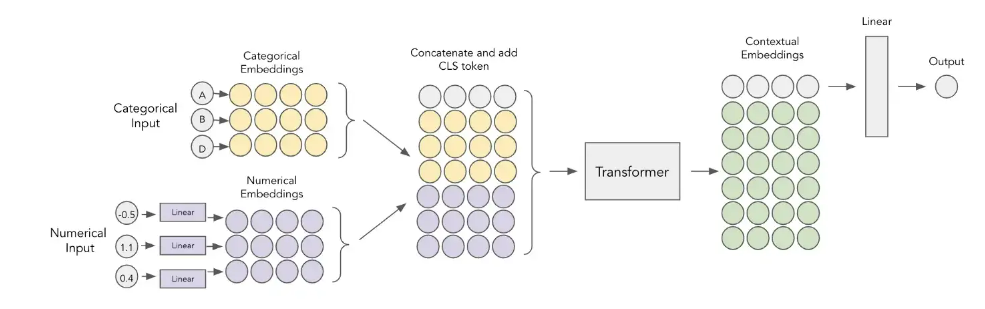

Paper: FT-Transformer-2021

The difference from TabTransformer model is adding Embedding layer for continuous feature

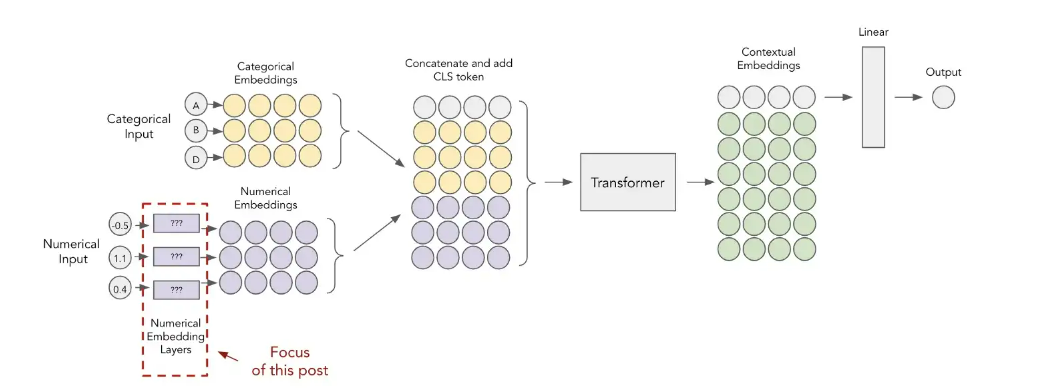

Paper: Embeddings for Numerical Features in Tabular Deep Learning-2022

- Periodic Embeddings ( using concat sinv and cosv )

- Piecewise Linear Encoding (Quantile Binning)

- Target Binning Approach

Target binning involves using the decision tree algorithm to assist in construction of the bins. As we saw above, the quantile approach splits our feature into the bins of equal width but it might be suboptimal in certain cases. A decision tree would be able to find the most meaningful splits with regards to the target. For example, if there was more target variance towards the larger values of the feature, majority of the bins could move to the right.

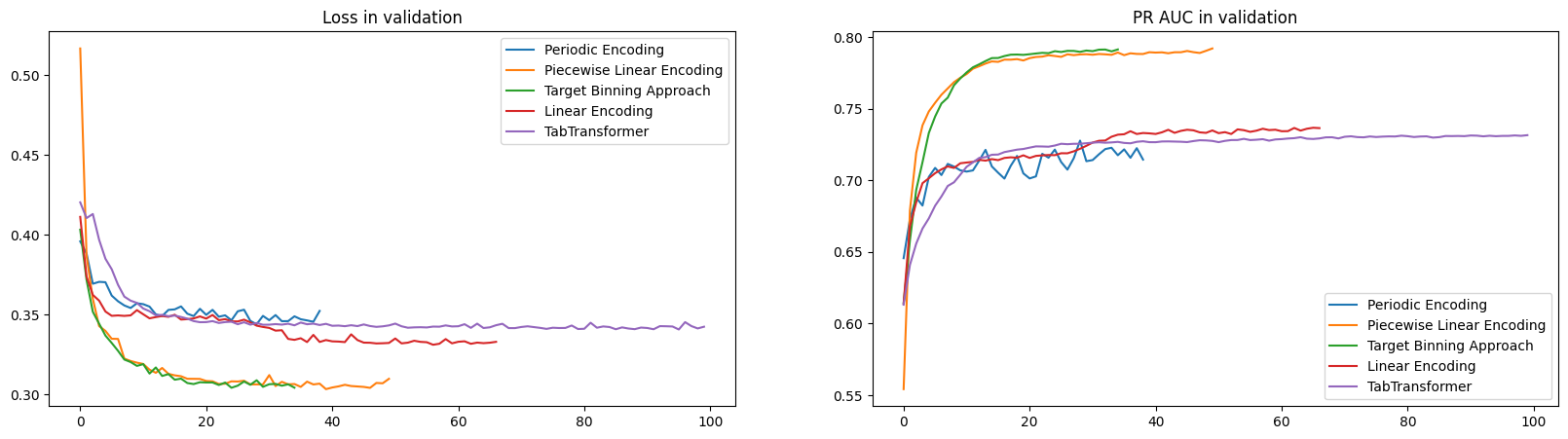

Evaluation models in testing dataset

| Type model | is_explainable | ROC AUC | PR AUC | ACC | F1-score | F1-Possitive |

|---|---|---|---|---|---|---|

| TabTransformer (No numerical Embedding) | No | 0.8962 | 0.7361 | 0.8479 | 0.7653 | 0.63 |

| Linear numerical Embedding | Yes | 0.9001 | 0.7393 | 0.8485 | 0.7796 | 0.66 |

| Periodic numerical Embedding | Yes | 0.8925 | 0.7275 | 0.8443 | 0.765 | 0.77 |

| Piecewise Linear Encoding (Quantile Binning) | Yes | 0.9117 | 0.7879 | 0.86 | 0.7942 | 0.79 |

| Target Binning Approach | yes | 0.9122 | 0.7864 | 0.8592 | 0.7905 | 0.79 |

| Catboost (Tuned) | yes | 0.81 | 0.71 | |||

| ExtraTrees (Tuned) | yes | 0.77 | 0.65 | |||

| Bagging (Tuned) | yes | 0.78 | 0.66 | |||

| Adaboost (Tuned) | yes | 0.79 | 0.67 | |||

| Gradient (Tuned) | yes | 0.81 | 0.71 | |||

| Linear Discriminant Analysis (Bayes’ rule) | No | 0.72 | 0.54 | |||

| Quadratic Discriminant Analysis (Bayes’ rule) | No | 0.69 | 0.50 |