Honglu Zhou*, Asim Kadav, Farley Lai, Alexandru Niculescu-Mizil, Martin Renqiang Min, Mubbasir Kapadia, Hans Peter Graf

(*Contact: honglu.zhou@rutgers.edu)

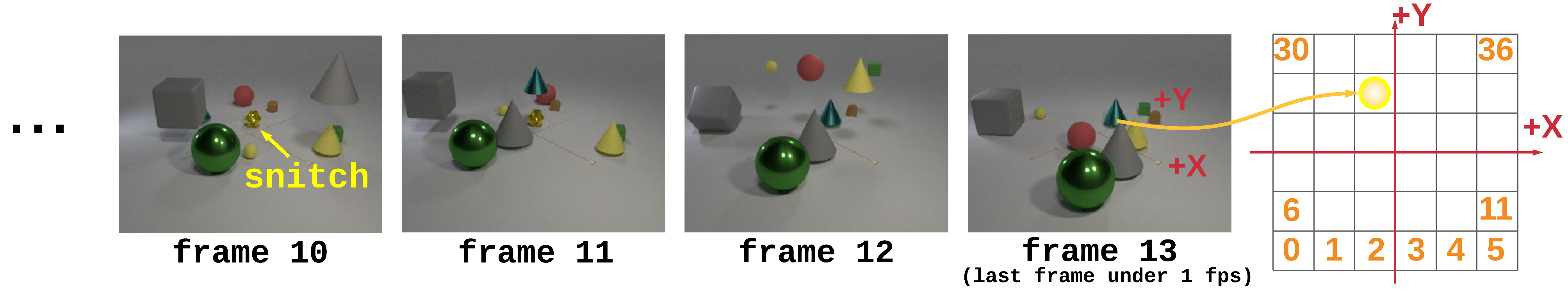

CATER-h is the dataset proposed for the Video Reasoning task, specifically, the problem of Object Permanence, investigated in Hopper: Multi-hop Transformer for Spatiotemporal Reasoning accepted to ICLR 2021. Please refer to our full paper for detailed analysis and evaluations.

This repository provides the CATER-h dataset used in the paper "Hopper: Multi-hop Transformer for Spatiotemporal Reasoning", as well as instructions/code to create the CATER-h dataset.

If you find the dataset or the code helpful, please cite:

Honglu Zhou, Asim Kadav, Farley Lai, Alexandru Niculescu-Mizil, Martin Renqiang Min, Mubbasir Kapadia, Hans Peter Graf. Hopper: Multi-hop Transformer for Spatiotemporal Reasoning. In International Conference on Learning Representations (ICLR), 2021.

@inproceedings{zhou2021caterh,

title = {{Hopper: Multi-hop Transformer for Spatiotemporal Reasoning}},

author = {Zhou, Honglu and Kadav, Asim and Lai, Farley and Niculescu-Mizil, Alexandru and Min, Martin Renqiang and Kapadia, Mubbasir and Graf, Hans Peter},

booktitle = {ICLR},

year = 2021

} A pre-generated sample of the dataset used in the paper is provided here. If you'd like to generate a version of the dataset, please follow instructions in the following.

- All CLEVR requirements (eg, Blender: the code was used with v2.79b).

- This code was used on Linux machines.

- GPU: This code was tested with multiple types of GPUs and should be compatible with most GPUs. By default it will use all the GPUs on the machine.

- All DETR requirements. You can check the site-packages of our conda environment (Python3.7.6) used.

(We modify code provided by CATER.)

-

cd generate/ -

echo $PWD >> blender-2.79b-linux-glibc219-x86_64/2.79/python/lib/python3.5/site-packages/clevr.pth(You can download our blender-2.79b-linux-glibc219-x86_64.) -

Run

time python launch.pyto start generating. Please read through the script to change any settings, paths etc. The command line options should also be easy to follow from the script (e.g.,--num_imagesspecifies the number of videos to generate). -

time python gen_train_test.pyto generate labels for the dataset for each of the tasks. Change the parameters on the top of the file, and run it.

You can find our extracted frame and object features here. The CNN backbone we utilized to obtain the frame features is a pre-trained ResNeXt-101 model. We use DETR trained on the LA-CATER dataset to obtain object features.

-

cd extract/ -

Download our pretrained object detector from here. Create a folder

checkpoints. Put the pretrained object detector into the foldercheckpoints. -

Change paths etc in

extract/configs/CATER-h.yml -

time ./run.sh

This will generate an output folder with pickle files that save the frame index of the last visible snitch and the detector's confidence.

- Run

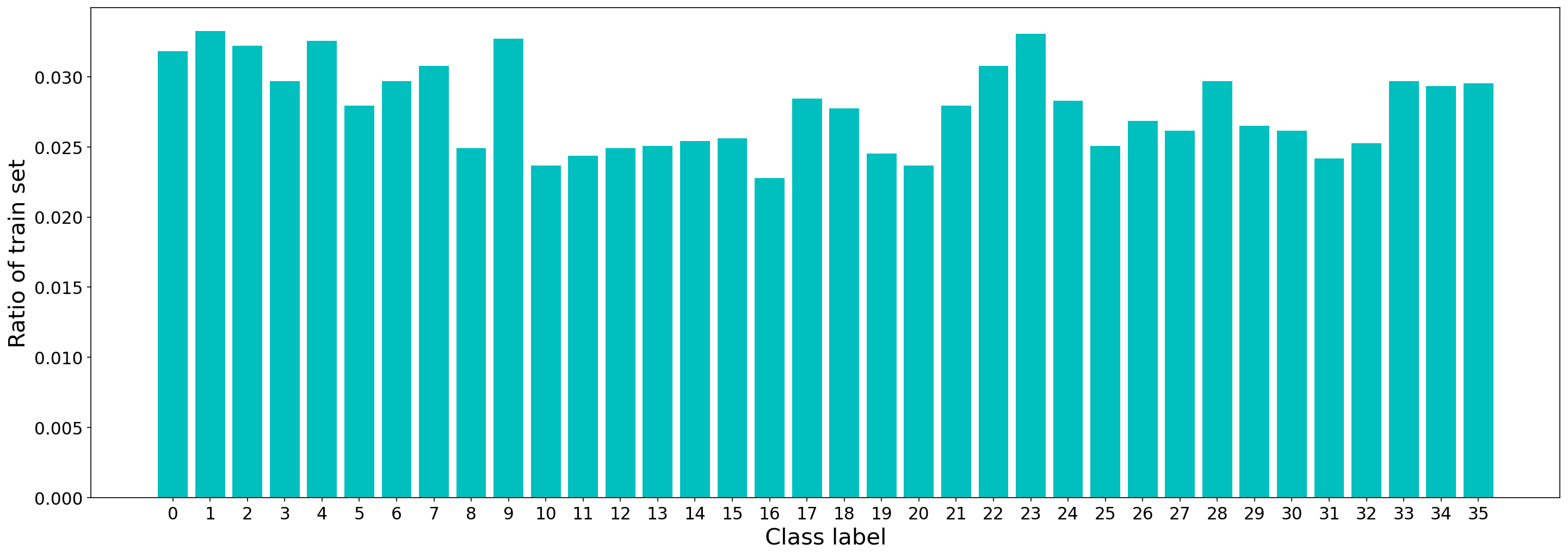

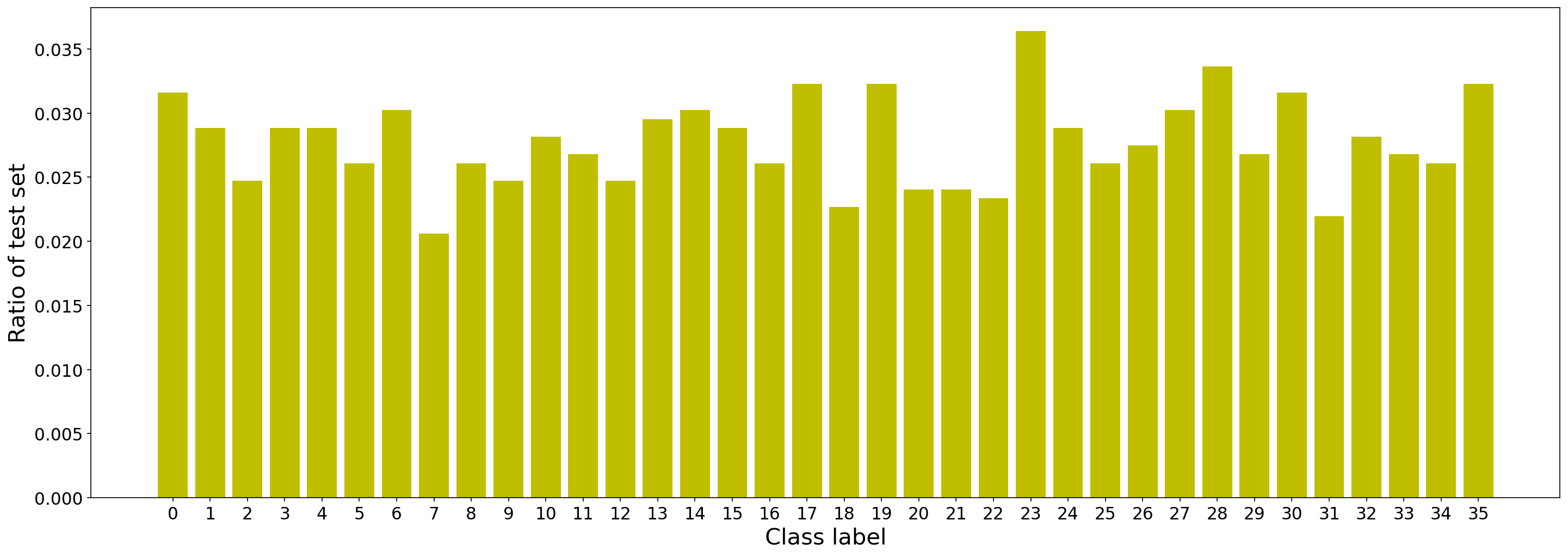

resample.ipynbwhich will resample the data to have balanced train/val set in terms of the class label and the frame index of the last visible snitch.

The code in this repository is heavily based on the following publically available implementations: