A PyTorch implementation of Single Shot MultiBox Detector from the 2016 paper by Wei Liu, Dragomir Anguelov, Dumitru Erhan, Christian Szegedy, Scott Reed, Cheng-Yang, and Alexander C. Berg. The official and original Caffe code can be found here.

- Note: While I would love it if this were my full-time job, this is currently only a hobby of mine so I cannot guarantee that I will be able to dedicate all my time to updating this repo. That being said, thanks to everyone for your help and feedback it is really appreciated, and I will try to address everything as soon as I can.

- Install PyTorch by selecting your environment on the website and running the appropriate command.

- Clone this repository.

- Note: We currently only support Python 3+.

- Then download the dataset by following the instructions below.

- We now support Visdom for real-time loss visualization during training!

- To use Visdom in the browser:

# First install Python server and client pip install visdom # Start the server (probably in a screen or tmux) python -m visdom.server

- Then (during training) navigate to http://localhost:8097/ (see the Train section below for training details).

- Note: For training, we currently only support VOC, but are adding COCO and hopefully ImageNet soon.

- UPDATE: We have switched from PIL Image support to cv2. The plan is to create a branch that uses PIL as well.

To make things easy, we provide a simple VOC dataset loader that inherits torch.utils.data.Dataset making it fully compatible with the torchvision.datasets API.

# specify a directory for dataset to be downloaded into, else default is ~/data/

sh data/scripts/VOC2007.sh # <directory># specify a directory for dataset to be downloaded into, else default is ~/data/

sh data/scripts/VOC2012.sh # <directory>- First download the fc-reduced VGG-16 PyTorch base network weights at: https://s3.amazonaws.com/amdegroot-models/vgg16_reducedfc.pth

- By default, we assume you have downloaded the file in the

ssd.pytorch/weightsdir:

mkdir weights

cd weights

wget https://s3.amazonaws.com/amdegroot-models/vgg16_reducedfc.pth- To train SSD using the train script simply specify the parameters listed in

train.pyas a flag or manually change them.

python train.py- Note:

- For training, an NVIDIA GPU is strongly recommended for speed.

- Currently we only support training on v2 (the newest version).

- For instructions on Visdom usage/installation, see the Installation section.

- You can pick-up training from a checkpoint by specifying the path as one of the training parameters (again, see

train.pyfor options)

To evaluate a trained network:

python eval.pyYou can specify the parameters listed in the eval.py file by flagging them or manually changing them.

| Original | Converted weiliu89 weights | From scratch w/o data aug | From scratch w/ data aug |

|---|---|---|---|

| 77.2 % | 77.26 % | 58.12% | 77.43 % |

VOC07 metric? Yes

AP for aeroplane = 0.8172

AP for bicycle = 0.8544

AP for bird = 0.7571

AP for boat = 0.6958

AP for bottle = 0.4990

AP for bus = 0.8488

AP for car = 0.8577

AP for cat = 0.8737

AP for chair = 0.6147

AP for cow = 0.8233

AP for diningtable = 0.7917

AP for dog = 0.8559

AP for horse = 0.8709

AP for motorbike = 0.8474

AP for person = 0.7889

AP for pottedplant = 0.4996

AP for sheep = 0.7742

AP for sofa = 0.7913

AP for train = 0.8616

AP for tvmonitor = 0.7631

Mean AP = 0.7743

GTX 1060: ~45.45 FPS

- We are trying to provide PyTorch

state_dicts(dict of weight tensors) of the latest SSD model definitions trained on different datasets. - Currently, we provide the following PyTorch models:

- SSD300 v2 trained on VOC0712 (newest PyTorch version)

- SSD300 v2 trained on VOC0712 (original Caffe version)

- SSD300 v1 (original/old pool6 version) trained on VOC07

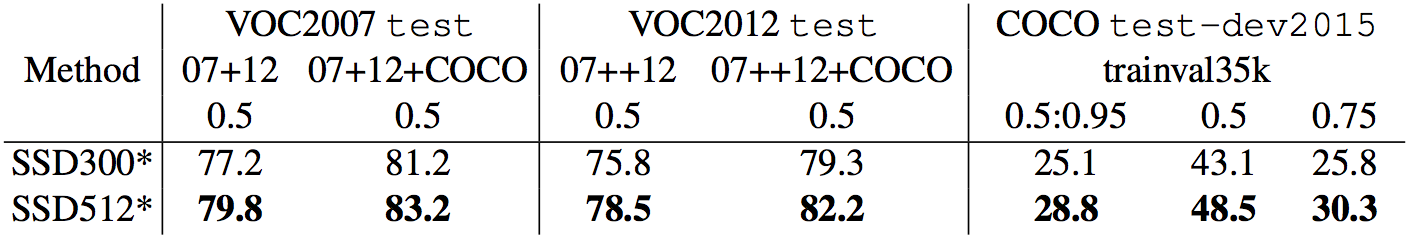

- Our goal is to reproduce this table from the original paper

- Make sure you have jupyter notebook installed.

- Two alternatives for installing jupyter notebook:

# make sure pip is upgraded

pip3 install --upgrade pip

# install jupyter notebook

pip install jupyter

# Run this inside ssd.pytorch

jupyter notebook- Now navigate to

demo/demo.ipynbat http://localhost:8888 (by default) and have at it!

- Works on CPU (may have to tweak

cv2.waitkeyfor optimal fps) or on an NVIDIA GPU - This demo currently requires opencv2+ w/ python bindings and an onboard webcam

- You can change the default webcam in

demo/live.py

- You can change the default webcam in

- Install the imutils package to leverage multi-threading on CPU:

pip install imutils

- Running

python -m demo.liveopens the webcam and begins detecting!

We have accumulated the following to-do list, which you can expect to be done in the very near future

- Still to come:

- Train SSD300 with batch norm

- Add support for SSD512 training and testing

- Add support for COCO dataset

- Create a functional model definition for Sergey Zagoruyko's functional-zoo

- Wei Liu, et al. "SSD: Single Shot MultiBox Detector." ECCV2016.

- Original Implementation (CAFFE)

- A huge thank you to Alex Koltun and his team at Webyclip for their help in finishing the data augmentation portion.

- A list of other great SSD ports that were sources of inspiration (especially the Chainer repo):