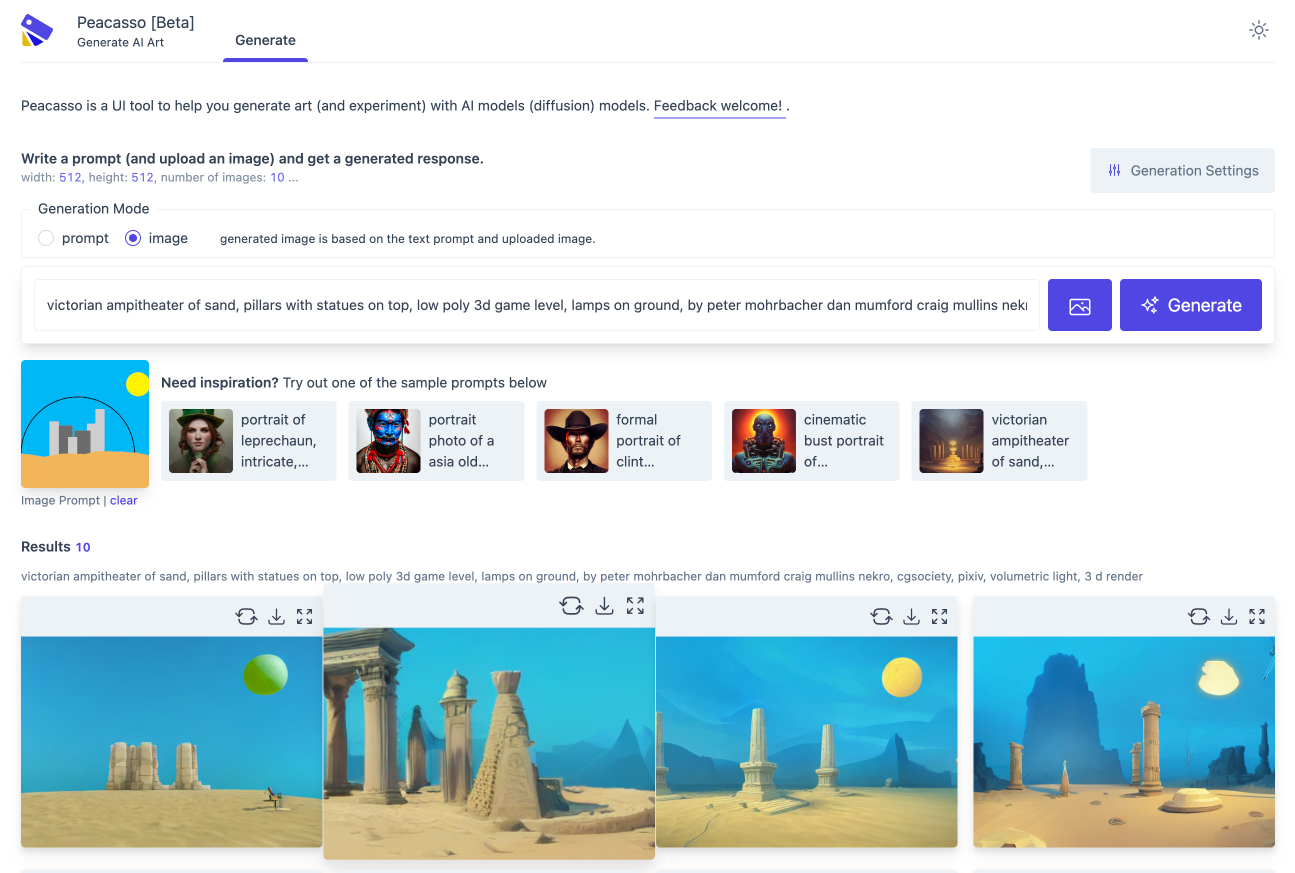

Peacasso neonsecret's edition [Beta] is a UI tool to help you generate art (and experiment) with multimodal (text, image) AI models (stable diffusion). This project is still in development (see roadmap below).

Because you deserve a nice UI and great workflow that makes exploring stable diffusion models fun, while using insanely high-resolution images! But seriously, here are a few things that make Peacasson interesting:

- Easy installation. Instead of cobbling together command line scripts, Peacasso provides a

pip installflow and a UI that supports a set of curated default operations. - UI with good defaults. The current implementation of Peacasso provides a UI for basic operations - text and image based prompting, remixing generated images as prompts, model parameter selection. Also covers the little things .. like light and dark mode.

- Python API. While the UI is the focus here, there is an underlying python api which will bake in experimentation features (e.g. saving intermediate images in the sampling loop, exploring model explanations etc. . see roadmap below).

Clearly, Peacasso (UI) might not be for those interested in low level code.

-

Step 1: Access to Weights via HuggingFace

Log in to your account here and, after that, download this. Rename it to model.ckpt

-

Step 2: Verify Environment - Pythong 3.7+ and CUDA Setup and verify that your python environment is

python 3.7or higher (preferably, use Conda). Also verify that you have CUDA installed correctly (torch.cuda.is_available()is true) and your GPU has at least 2GB of VRAM memory. -

Step 3: run this command:

pip install -e git+https://github.com/CompVis/taming-transformers.git@master#egg=taming-transformers

Once requirements are met, run the following command to install the library:

pip install git+https://github.com/neonsecret/neonpeacasso.gitDon't have a GPU, you can still use the python api and UI in a colab notebook. See this colab notebook for more details.

You can use the library from the ui by running the following command:

neonpeacasso ui --port=8080Then navigate to http://localhost:8080/ in your browser.

You can also use the python api by running the following command:

import os

from dotenv import load_dotenv

from neonpeacasso.generator import ImageGenerator

from neonpeacasso.datamodel import GeneratorConfig

token = os.environ.get("HF_API_TOKEN")

gen = ImageGenerator(token=token)

prompt = "A sea lion wandering the streets of post apocalyptic London"

prompt_config = GeneratorConfig(

prompt=prompt,

num_images=3,

width=512,

height=512,

guidance_scale=7.5,

num_inference_steps=50,

mode="prompt", # prompt, image

return_intermediates=True, # return intermediate images in the generate dict response

)

result = gen.generate(prompt_config)

for i, image in enumerate(result["images"]):

image.save(f"image_{i}.png")- Command line interface

- UI Features. Query models with multiple parametrs

- Text prompting (text2img)

- Image based prompting (img2img)

- Editor (for inpainting and outpainting)

- Latent space exploration

- Experimentation tools

- Save intermediate images in the sampling loop

- Prompt recommendation tools

- Model explanations

- Curation/sharing experiment results

This work builds on the stable diffusion model and code is adapted from the HuggingFace implementation. Please note the - CreativeML Open RAIL-M license associated with the stable diffusion model.