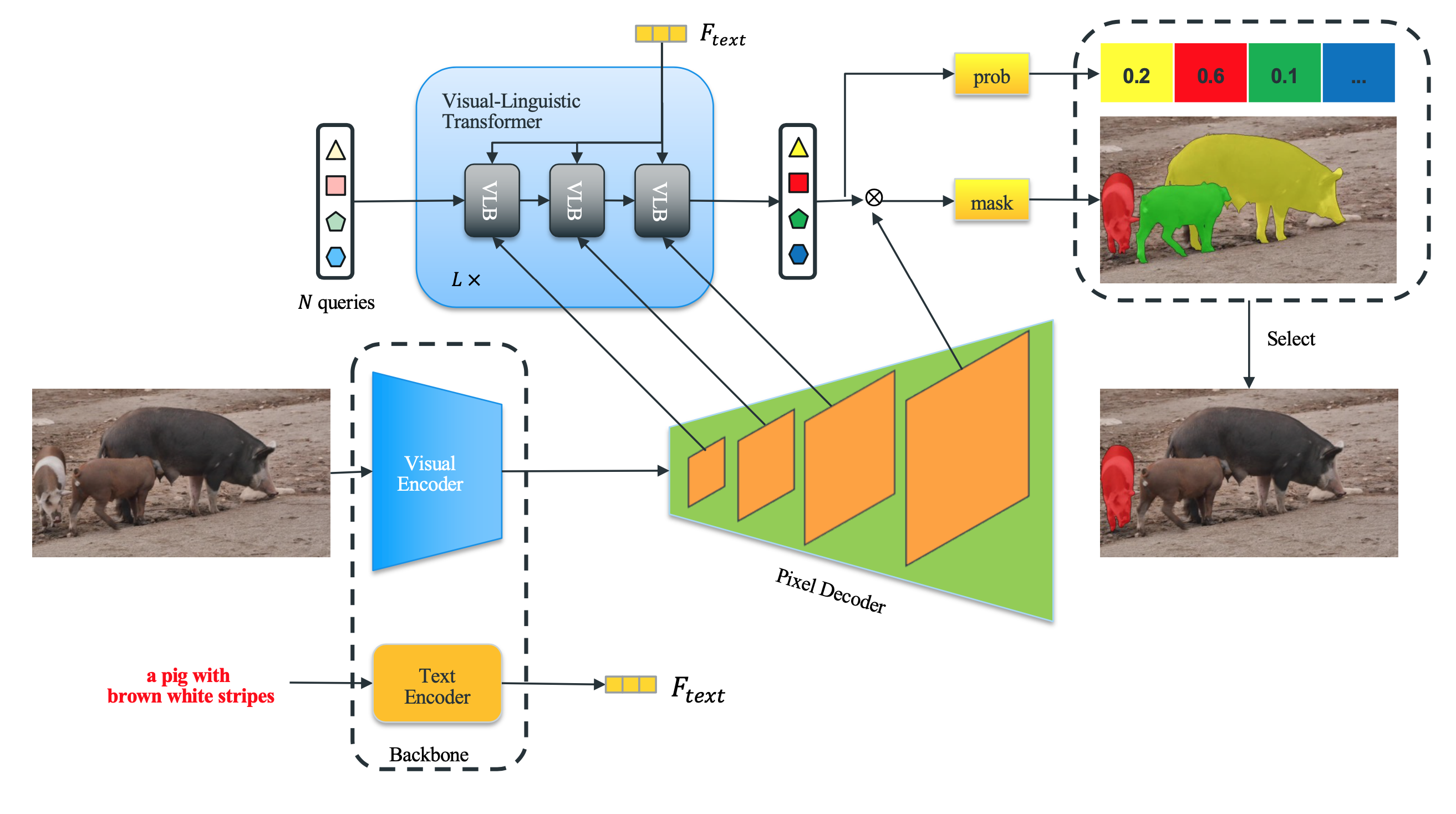

The official implementation of the paper:

Under review

Main results on RefCOCO

| Backbone | val | test A | test B |

|---|---|---|---|

| ResNet50 | 73.92 | 76.03 | 70.86 |

| ResNet101 | 74.67 | 76.8 | 70.42 |

Main results on RefCOCO+

| Backbone | val | test A | test B |

|---|---|---|---|

| ResNet50 | 64.02 | 69.74 | 55.04 |

| ResNet101 | 64.80 | 70.33 | 56.33 |

Main results on G-Ref

| Backbone | val | test |

|---|---|---|

| ResNet50 | 65.69 | 65.90 |

| ResNet101 | 66.77 | 66.52 |

update here

We test our work in the following environments, other versions may also be compatible:

- CUDA 11.1

- Python 3.8

- Pytorch 1.9.0

Please refer to installation.md for installation

Please refer to data.md for data preparation.

sh scripts/train.sh

or

python train_net_video.py --config-file <config-path> --num-gpus <?> OUTPUT_DIR <?>

for example, to train Resnet101-backbone model in RefCOCO dataset with 2 gpus:

python train_net_video.py --config-file configs/refcoco/VLFormer_R101_bs8_100k.yaml --num-gpus 2 OUTPUT_DIR output/refcoco-RN101

In terms of resuming the previous training, then add the flag --resume

python train_net_video.py --config-file <config-path> --num-gpus <?> --eval-only OUTPUT_DIR <output_dir> MODEL.WEIGHTS <weight_path>