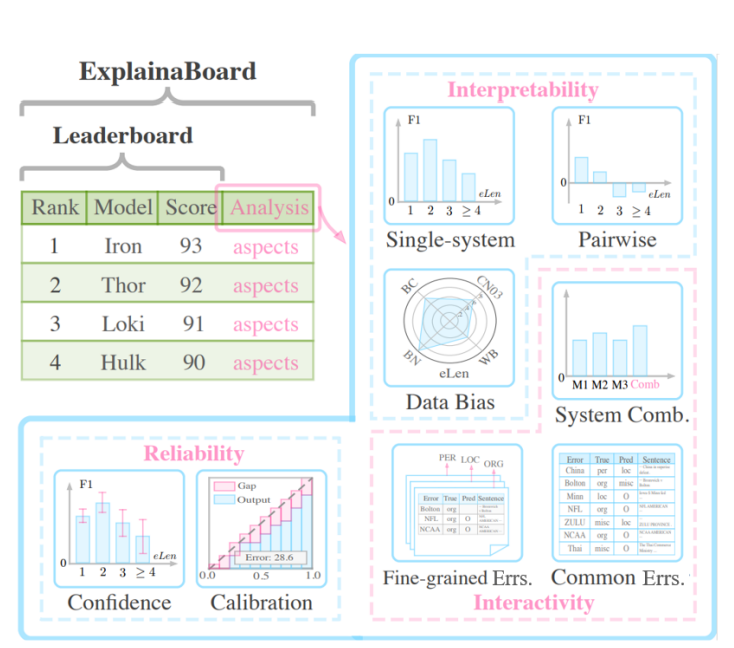

When developing a natural language processing (NLP or AI) system, often one of the hardest things is to understand where your system is working and where it is failing, and deciding what to do next. ExplainaBoard is a tool that inspects your system outputs, identifies what is working and what is not working, and helps inspire you with ideas of where to go next.

It offers a number of different ways with which you can evaluate and understand your systems:

- Single-system Analysis: What is a system good or bad at?

- Pairwise Analysis: Where is one system better (worse) than another?

- Fine-grained Error Analysis: On what examples do errors occur?

- Holistic Leaderboards and Benchmarks: Which systems perform best for a particular task?

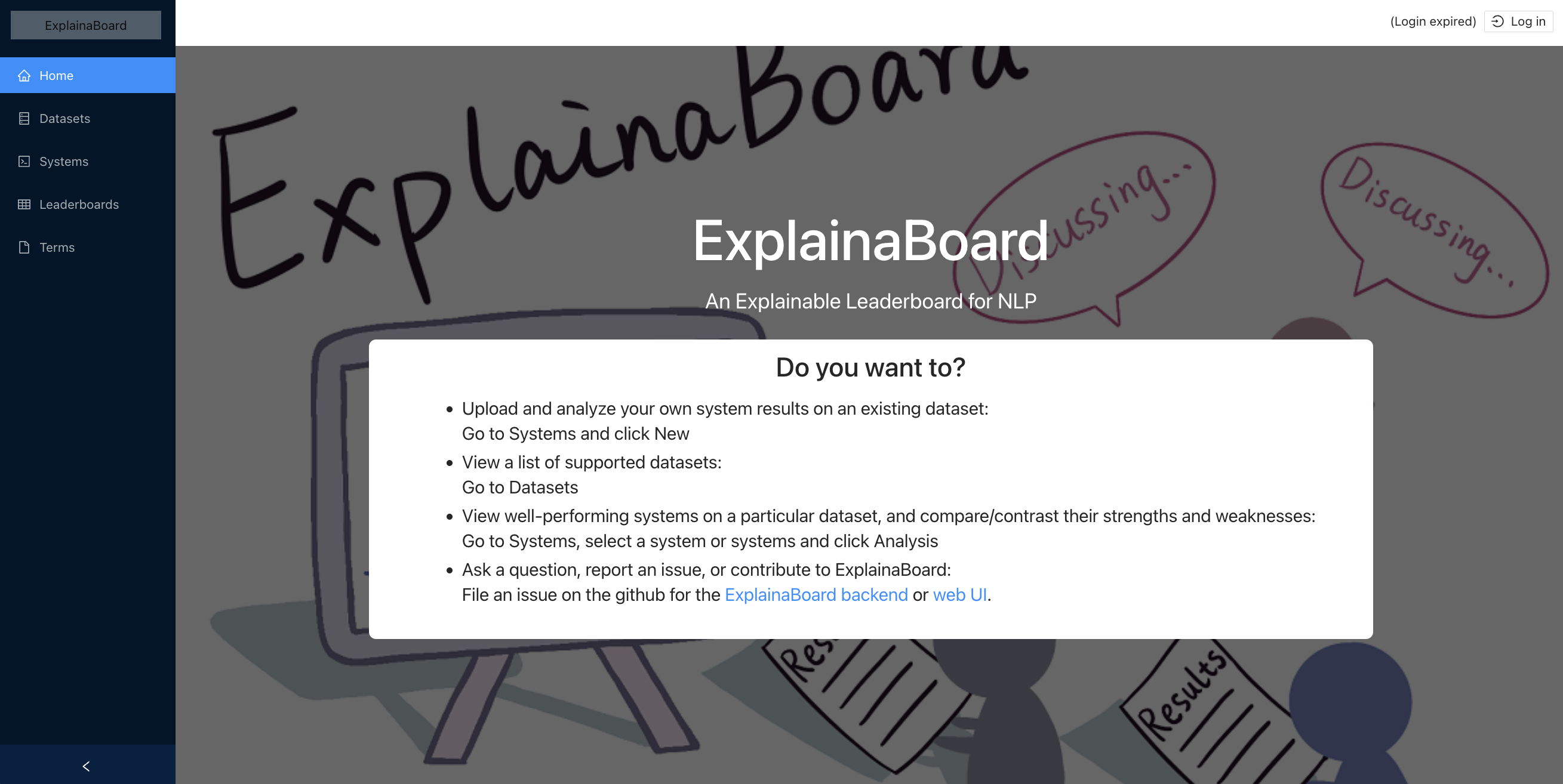

ExplainaBoard can be used online or offline. For most users, we recommend using the online interface, as it is more interactive and easier to get started.

Browse the web interface, which gives you the ability to browse outputs and evaluate and analyze your own system outputs.

If you would like to evaluate and analyze your own systems programmatically, you can use the ExplainaBoard client.

For power-users who want to use ExplainaBoard offline, first, follow the installation directions below, then take a look at our CLI examples.

Install Method 1 - Standard Use: Simple installation from PyPI (Python 3 only)

pip install --upgrade pip # recommending the newest version of pip.

pip install explainaboard

python -m spacy download en_core_web_sm # if you plan to use the AspectBasedSentimentClassificationProcessorInstall Method 2 - Development: Install from the source and develop locally (Python 3 only)

# Clone current repo

git clone https://github.com/neulab/ExplainaBoard.git

cd ExplainaBoard

# Install the required dependencies and dev dependencies

pip install ."[dev]"

python -m spacy download en_core_web_sm

pre-commit install- Testing: To run tests, you can run

python -m unittest. - Linting and Code Style: This project uses flake8 (linter) and black (formatter).

They are enforced in the pre-commit hook and in the CI pipeline.

- run

python -m black .to format code - run

flake8to lint code - You can also configure your IDE to automatically format and lint the files as you are writing code.

- run

After trying things out in the CLI, you can read how to add new features, tasks, or file formats.

ExplainaBoard is developed by Carnegie Mellon University, Inspired Cognition Inc., and other collaborators. If you find it useful in research, you can cite it in papers:

@inproceedings{liu-etal-2021-explainaboard,

title = "{E}xplaina{B}oard: An Explainable Leaderboard for {NLP}",

author = "Liu, Pengfei and Fu, Jinlan and Xiao, Yang and Yuan, Weizhe and Chang, Shuaichen and Dai, Junqi and Liu, Yixin and Ye, Zihuiwen and Neubig, Graham",

booktitle = "Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing: System Demonstrations",

month = aug,

year = "2021",

address = "Online",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2021.acl-demo.34",

doi = "10.18653/v1/2021.acl-demo.34",

pages = "280--289",

}

We thanks all authors who shared their system outputs with us: Ikuya Yamada, Stefan Schweter, Colin Raffel, Yang Liu, Li Dong. We also thank Vijay Viswanathan, Yiran Chen, Hiroaki Hayashi for useful discussion and feedback about ExplainaBoard.