This repository contains code and documentation for using DeepLabCut (DLC) to analyze the behavior of animals. Specifically, the code in this repository provides a pipeline for using DLC to track the movement of animal paws and analyze their behavior.

-

Open a Git terminal. If you haven't yet configured an SSH key for GitHub on your machine, generate a new one with the following command:

ssh-keygen -

Navigate to your GitHub account settings. Click on "SSH and GPG keys" and then "New SSH key". Paste the public key that was generated in the first step and click "Add SSH key".

-

Set up your GitHub username and email on your local machine. You can do this with the following commands:

git config --global user.name "Your Name" git config --global user.email "your-email@example.com" -

Now you're ready to clone the repository. In your Git terminal, enter the following command to download the repository to your local machine:

git clone git@github.com:neurobiologylab/dlc-based-behavioral-analysis.git

This section guides you through a step-by-step process to install DeepLabCut on a Windows machine. DeepLabCut is an open-source package primarily used for markerless pose estimation of animals in videos.

Anaconda is a free and open-source distribution of Python and R, primarily used for scientific computing. It simplifies package management and deployment. You can download and install Anaconda from here.

Download the Conda environment file DLC-CPU.yaml.

Create a new folder named "DeepLabCut" in your preferred workspace directory.

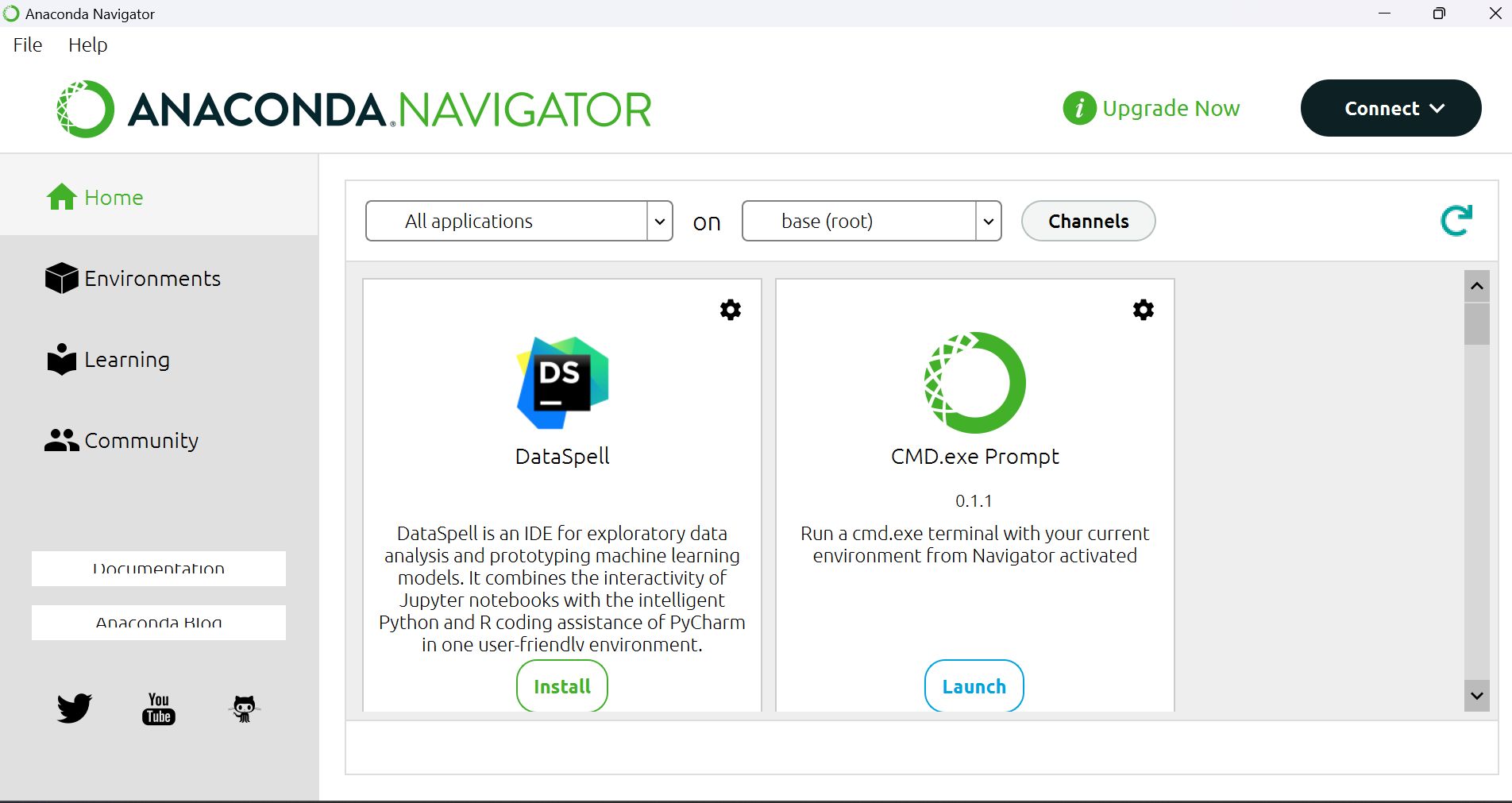

Start the Anaconda Navigator application.

In the Anaconda Navigator, launch the "CMD.exe Prompt" which will open a new command prompt window.

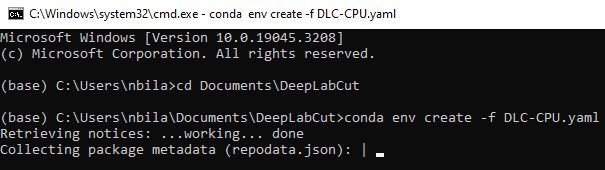

In the command prompt window, navigate to your "DeepLabCut" directory. For example, if your "DeepLabCut" folder is in the root of the C drive, you would type:

cd C:\your_workspace_directory\DeepLabCut

In the "DeepLabCut" directory, use the downloaded .yaml file to create a new Conda environment:

conda env create -f DLC-CPU.yaml

Activate the new Conda environment with:

conda activate DEEPLABCUT

Initiate a DeepLabCut session using the command prompt:

python -m deeplabcut

For more installation tips, visit the official DeepLabCut documentation.

This guide provides detailed instructions on how to use DeepLabCut (DLC) for your video analysis tasks.

-

Start DeepLabCut:

Launch the "CMD.exe Prompt" from Anaconda Navigator, and enter the following commands:

conda activate DEEPLABCUT python -m deeplabcutThis will open the DeepLabCut welcome window.

-

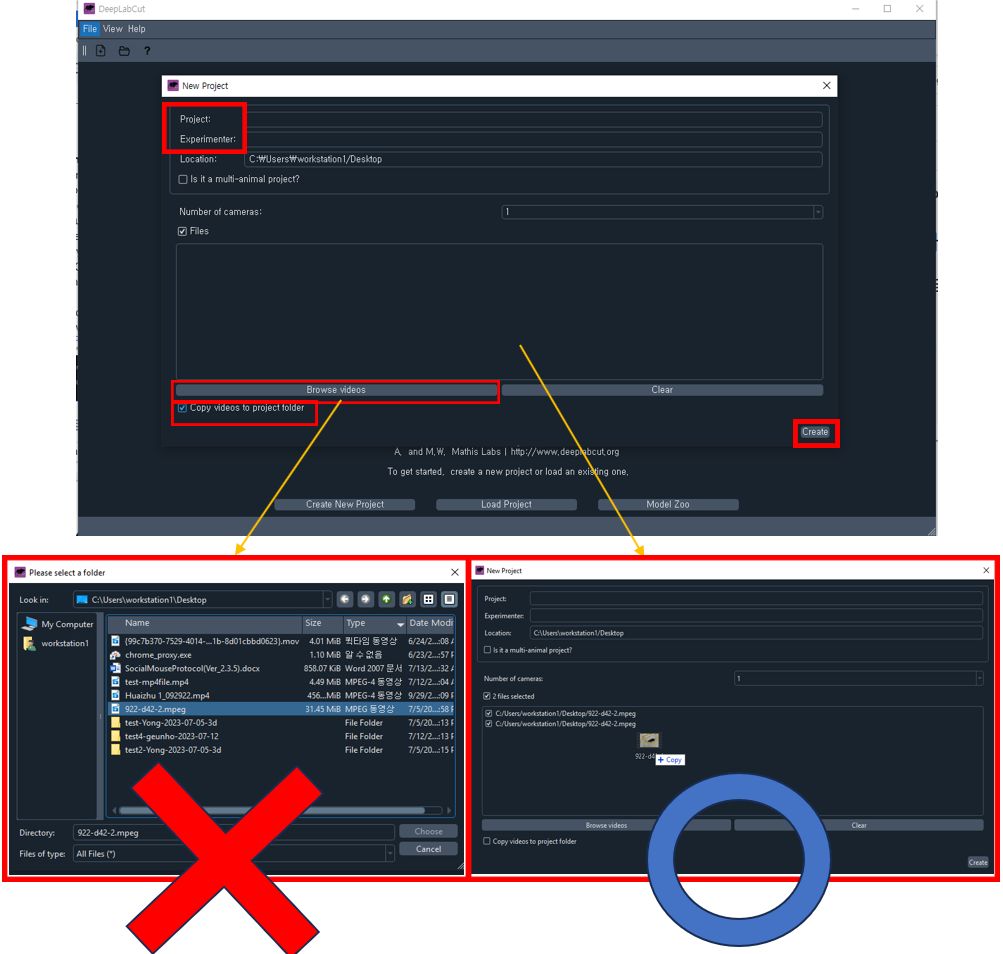

Create a new project:

Click on

Create New Projecton the welcome page.- Fill out the project name and experimenter name, then check the

Copy videos to project foldercheckbox. - Do not use the

Browse videosbutton which does work(bug). Use drag & drop to add your videos. Note: DLC may not recognize MPEG files correctly, so it's recommended to use MP4 files. Ensure that you don't change the video file location after this step. - Once all fields are filled, click

Create.

- Fill out the project name and experimenter name, then check the

-

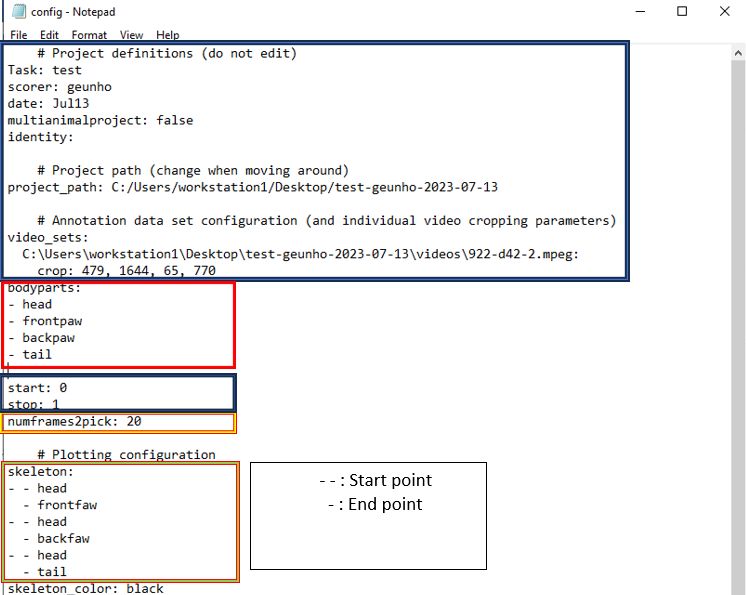

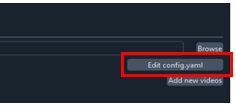

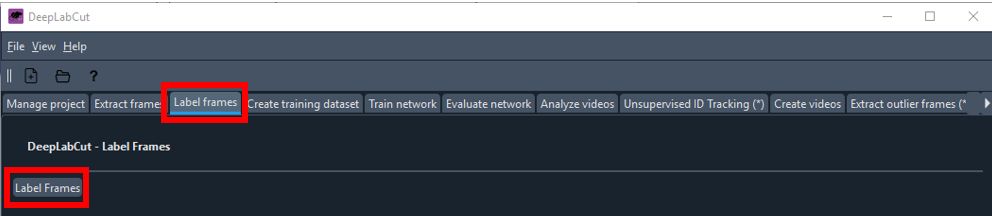

Edit Configuration File:

- Locate the

Config.yamlfile in your project directory and open it. - Replace the

bodypartslist with the labels you want to use for your project. - The

numframes2pickparameter specifies the number of frames to extract for labeling. For accurate analysis, it is recommended to pick a couple hundred frames. - The

skeletonparameter represents the connections between each label. These connections will be displayed in the final video. - Save your changes (Ctrl+S) and verify them by clicking the

Edit Config.yamlbutton in DeepLabCut.

You can click the "Edit config.yaml" button to verify that the list has been updated.

- Locate the

-

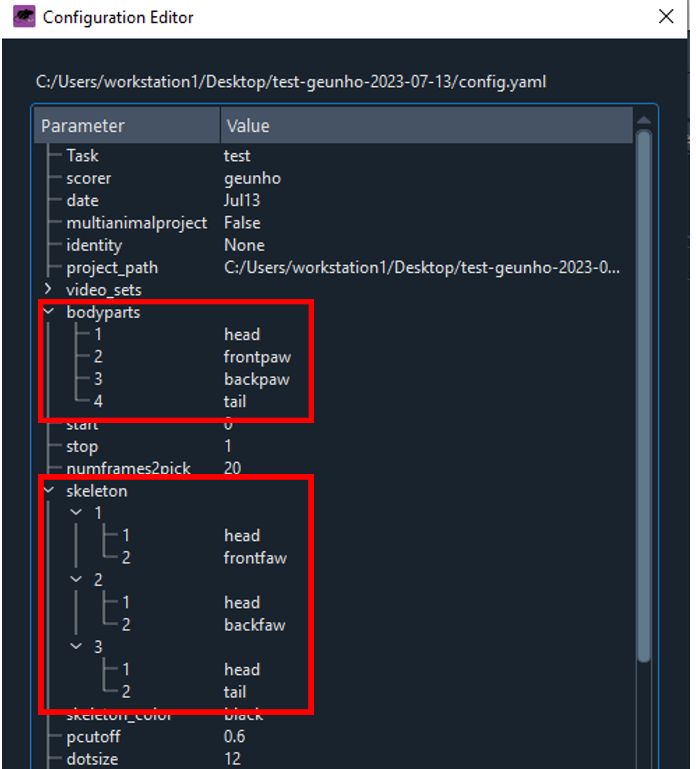

Extract Frames:

Navigate to the

Extract Framestab in DeepLabCut.- You can keep the default settings.

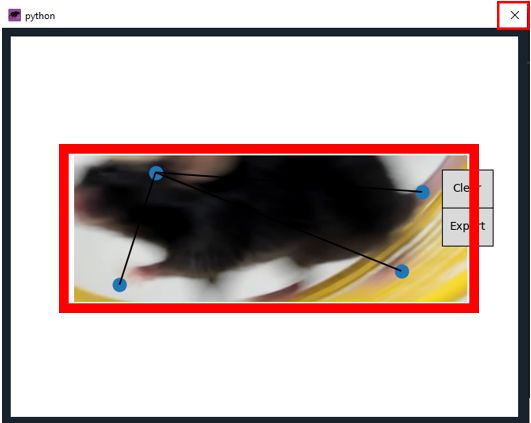

- You can crop the frame by select the

GUIfromFrame croppingand draw a rectangle around the area of interest in the GUI, then clickCrop.

- Once you're done,

Extract Frameswill be running. When it is done, clickokand go to the next step.

-

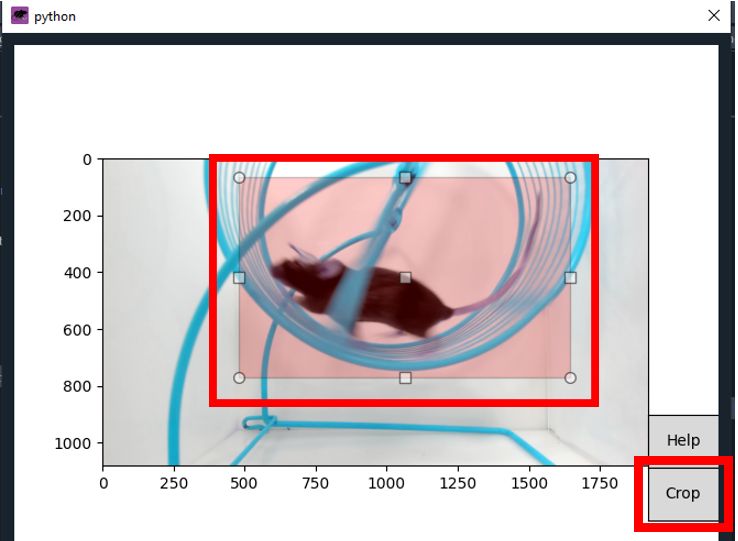

Label Frames:

Navigate to the

Label Framestab.- Click the

Label Framesbutton to open the frame labeling interface.

- Load the frames you extracted in the previous step.

- Click the

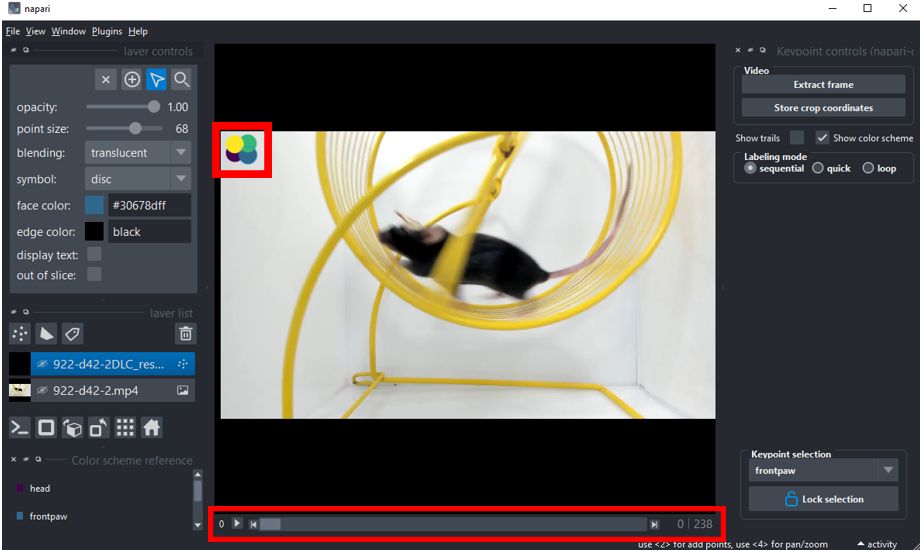

The labeling interface offers several features that make the task of labeling your images more convenient and efficient. Here's a quick rundown of each feature:

-

Image Display Area: This is where your selected image is displayed for labeling.

-

+ Button: This button is used to mark a new label on the image. You can use either left or right mouse clicks.

-

Arrow Button: This button is used to select an existing label and either reposition or delete it. Multiple points can be selected and repositioned or deleted simultaneously.

-

Navigation Bar: This tool or your keyboard's arrow keys can be used to navigate through images, moving to the next or previous image with ease.

-

Lock Selection: This feature is particularly useful when you have multiple points to label on each frame, such as 'head', 'frontpaw', 'backpaw', and 'tail'. If you lock the selection, for example, to 'head', as you navigate to any other frame using the navigation bar, 'head' will be automatically selected for labeling. On the other hand, if you unlock the selection, the labeling process will proceed in the default order 'head' > 'frontpaw' > 'backpaw' > 'tail' as you move to the next frame.

-

Save Button (Ctrl+S): Regularly save your changes to prevent any potential data loss. You can do this by clicking the Save button or by using the shortcut

Ctrl+S. -

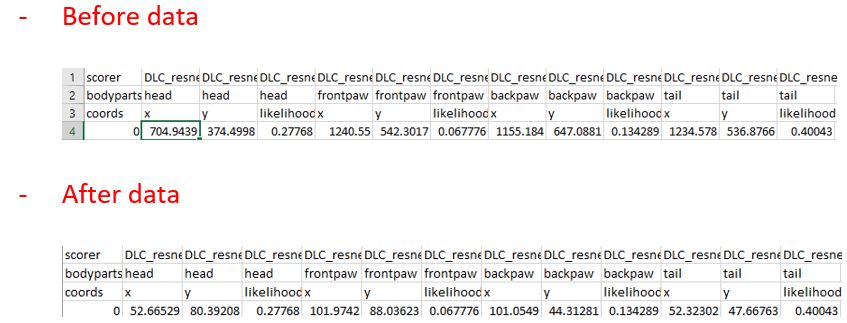

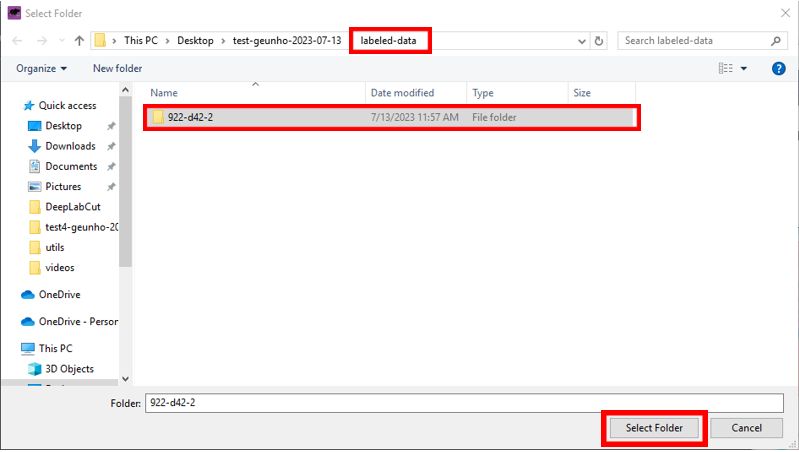

Post-Labeling Verification: After you have completed the labeling process, your annotated data will be saved in both CSV and H5 formats. These files, which contain the coordinates of the labels for each frame of your videos, can be found in the labeled-data directory within your project folder (named after your video file). Checking these files ensures that your labels have been saved correctly.

Understanding and effectively using these features can significantly streamline your labeling process, contributing to the overall accuracy and efficiency of your DeepLabCut project.

-

Create a Training Dataset:

Navigate to the

Create Training Datasettab.-

Shuffle: This option determines whether the training samples are shuffled.

-

Network architecture: Choose the appropriate model architecture based on your requirements:

-

ResNet (Residual Networks): Known for "skip connections" and deep layers, ResNet effectively mitigates the vanishing gradient problem, making it suitable for complex tasks like image classification. However, it's computationally expensive and large in size.

-

MobileNet: Designed for mobile and embedded vision applications, MobileNet is computationally efficient and small in size due to its use of depthwise separable convolutions. It's a go-to choice for resource-constrained applications.

-

EfficientNet: With a balance of accuracy and computational efficiency, EfficientNet uses compound scaling and an improved version of MobileNet's depthwise convolution. It provides state-of-the-art accuracy while remaining small and fast.

-

-

Augmentation method: Select the image augmentation method. Augmentation techniques such as tensorpack and imgaug can provide variation to your training data, enhancing the model's ability to generalize.

You can keep the default settings, or select particular network and augmentation method depend on your needs. after you choose the settings, click

Create Training Dataset.

-

-

Train the Network:

Navigate to the

Train Networktab.Display iterations: This determines how frequently the training status is displayed in terminal(shell window).Save iterations: This dictates how often the state of the trained network is saved.Maximum iterations: This is the total number of iterations that the training process should complete.

After setting these parameters, click

Start Training. The training procedure will take some time to finish. You can keep track of its progress in the Anaconda Prompt. Once the training is finished, a completion notification window will pop up. Click 'OK' on this window to proceed to the next step.

-

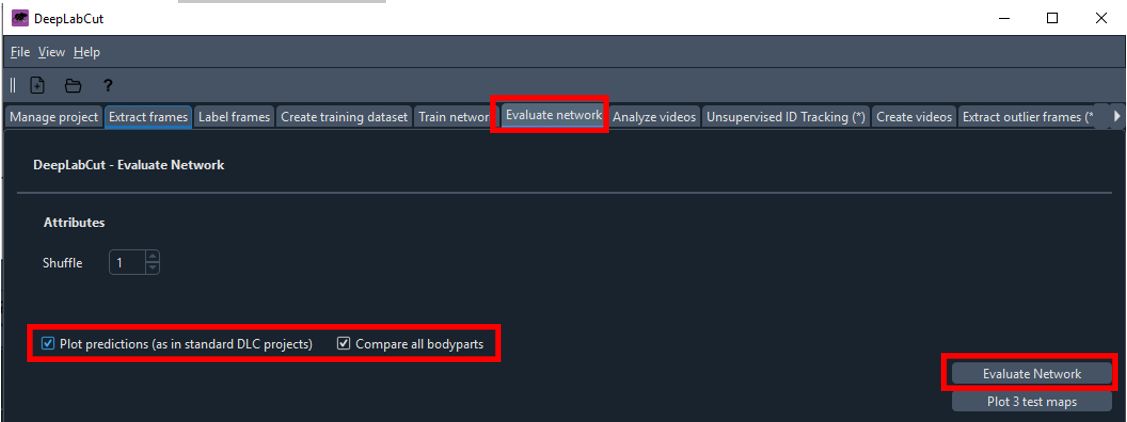

Evaluate the Network:

Navigate to the

Evaluate Networktab.- Check both

Plot predictionsandcompare all bodypartsoptions. - Click

Evaluate Network.

- Check both

-

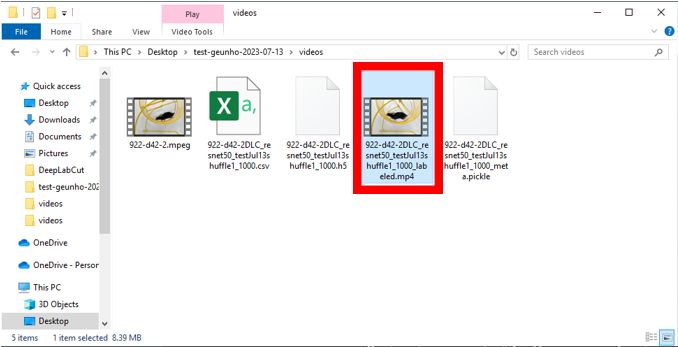

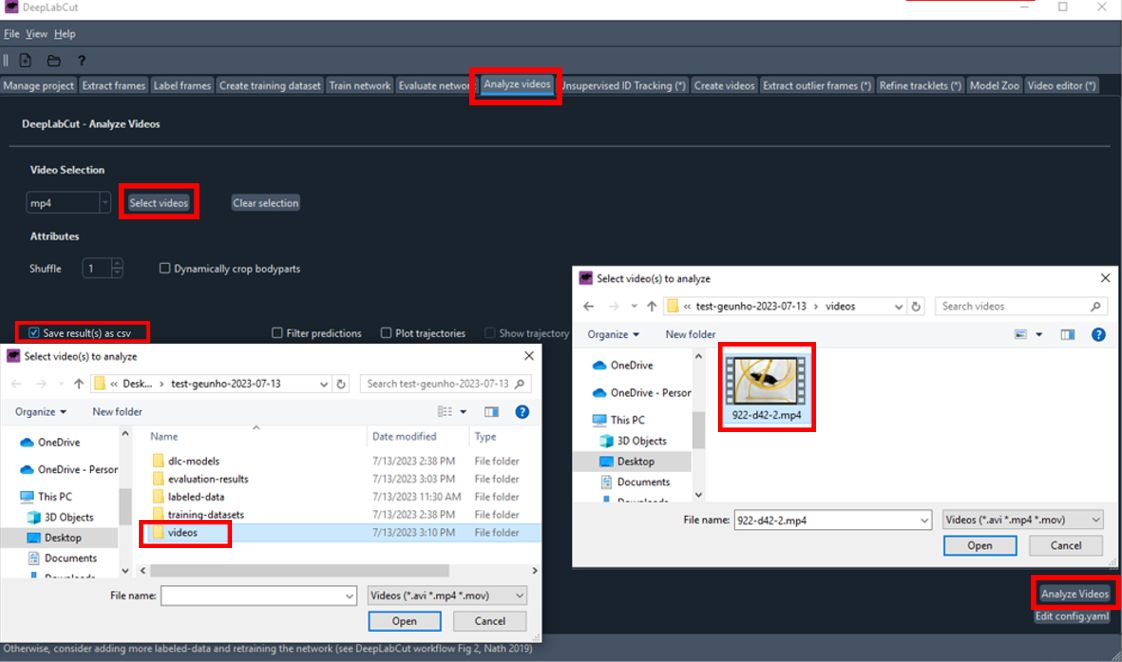

Analyze Videos:

Navigate to the

Analyze Videostab.- Click

Select videosand choose the videos you want to analyze. - Ensure

save_as_csvis checked. - Click

Analyze Videos.

- Click

-

Create Labeled Videos:

Navigate to the

Create Videostab.- Add the videos you analyzed in the previous step. The system will typically select the videos automatically, if not, you can manually add the videos in the same manner as in previous step.

- Check both

Plot all body partsandDraw skeletonoptions. - Click on the

Build Skeletonbutton to validate that the points are properly connected, then close the pop-up window. - Finally, click on

Create Videos. The system will now generate the labeled videos for you.

-

Extract Outliers (optional):

If the analysis results are not satisfactory, navigate to the

Extract Outlier Framestab.- Add the videos you want to refine.

- Specify the extraction algorithm. If you choose

manual, a new interface will open, allowing you to go through each frame of the video and mark frames that need to be relabeled. - Click

Extract Outlier Frames.

-

Refine Labels (optional):

If you have outlier frames, navigate to the

Refine Labelstab.- Load the frames, move the mislabeled points to their correct positions, then save your changes.

- You can verify your changes by checking the

CollectedData_YourName.csvfile in thelabeled-data/video_namedirectory in your project folder. - Once you're done, click

Merge datasets.

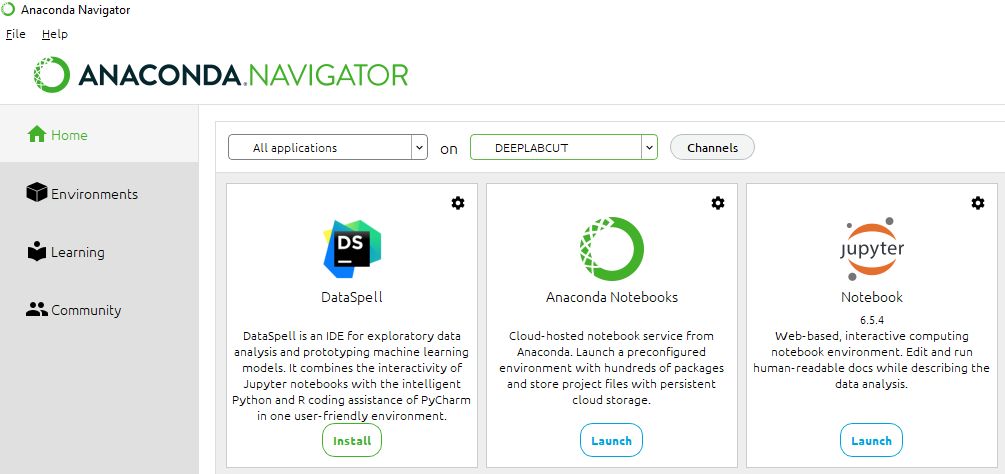

- Start the Anaconda Navigator.

Launch the Anaconda Navigator on your system. Within the Anaconda Navigator interface, install (if not already installed) and launch "Jupyter Notebook". This will open a new tab in your default web browser with the Jupyter Notebook file explorer.

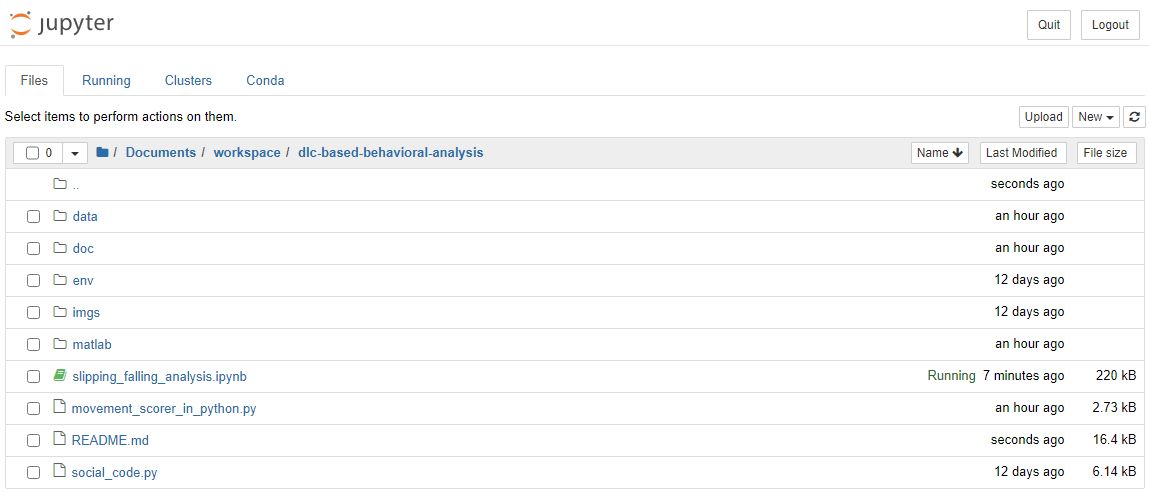

- Open the Notebook:

In the Jupyter Notebook file explorer tab in your browser, navigate to the directory where you cloned the dlc-based-behavioral-analysis repository. Find and open the notebook file.

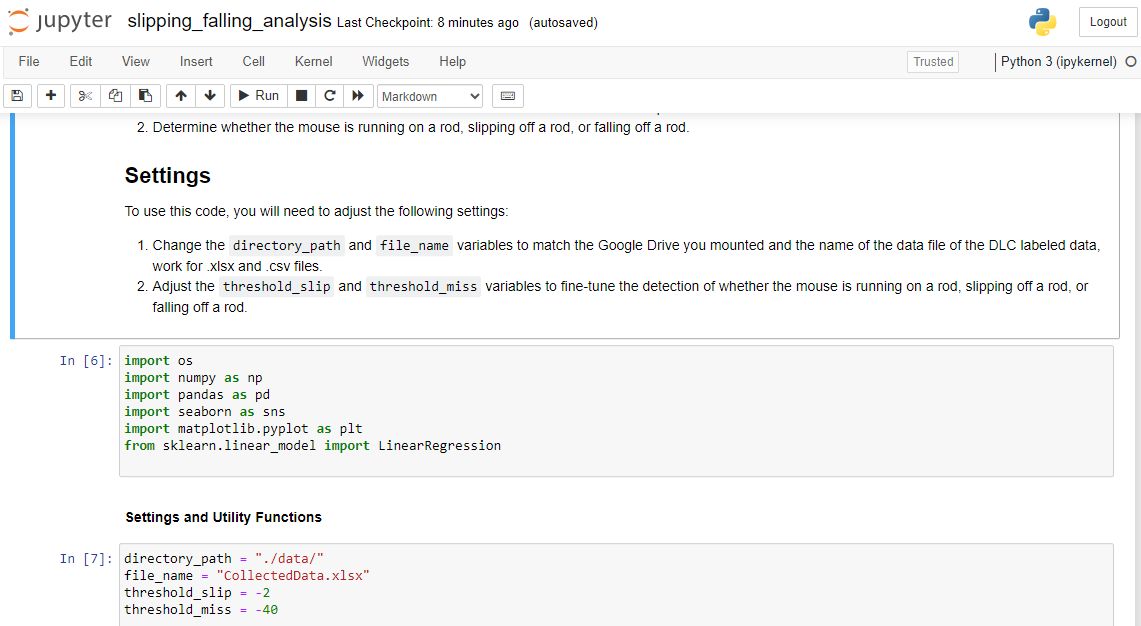

- Run the Notebook:

With the notebook file open, locate the cell with the path to the data file. Replace this with the correct path to the data file you wish to analyze. After updating the path, run the code in the notebook by selecting the cell and pressing 'Shift' + 'Enter'. This will execute the cell and output the results beneath it.

Congratulations! You have successfully used DeepLabCut to analyze your videos. For further analysis, you can import the CSV files created during the analysis into your preferred data analysis software.

To use this code, you must first create a DLC project for your animal behavior data. Once you have created a project and labeled your data, you can use the scripts in this repository to analyze the data and generate visualizations.

The main script for analyzing the data is analyze_behavior.ipynb, which takes a DLC project file as input and outputs data on paw movement and behavior. The script generates various visualizations to help you understand the data, including time series plots of paw movement and behavioral analysis plots.

Contributions to this project are welcome. If you find a bug or have a suggestion for how to improve the code, please open an issue or submit a pull request.