The major contributers of this repository include Shi Yan and Lizhen Wang.

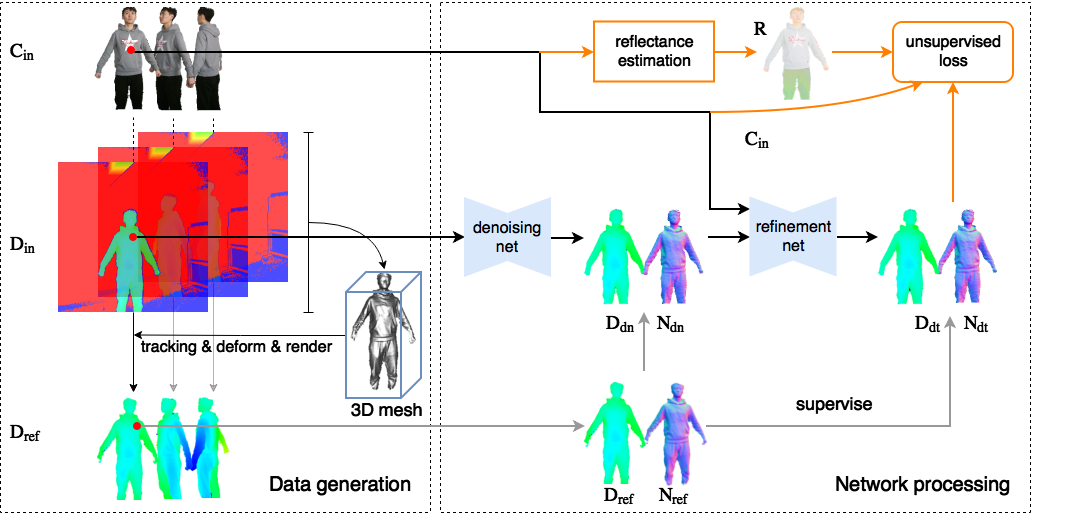

DDRNet is a cascaded Depth Denoising and Refinement Network, which achieves superior performance over the state-of-the-art techniques.

DDRNet is described in ECCV 2018 paper. It is worth noticing that:

- DDRNet leverages the multi-frame fused geometry and the accompanying high quality color image through a joint training strategy.

- Each sub-network focuses on lifting the quality in different frequency domains.

- It combines supervised and unsupervised learning to solve the issue of lacking ground truth training data.

- Python > 2.7

- TensorFlow >= 1.3.0

- numpy, scipy, scikit-image

Any NVIDIA GPUs with 5GB memory suffices. For training, we recommend 4xGPU with 12G memory.

Clone the repository.

git clone https://github.com/neycyanshi/DDRNet.git

-

Download dataset or prepare your own data. In the dataset folder:

dataset ├── 20170907 │ ├── group1 │ │ ├── depth_map │ │ ├── high_quality_depth (depth_ref) │ │ ├── depth_filled │ │ ├── color_map │ │ ├── mask │ │ └── albedo │ ├── group2 │ └── ... ├── 20170910 └── ...color_mapanddepth_mapare captured and aligned raw data.high_quality_depth(depth_ref, D_ref) is generated using fusion method, paired withdepth_map.depth_filled,maskandalbedoare generated by preprocess(optional).

-

Please prepare index files for training or testing.

train.csvhas at least 3 columns, pre-computedmaskandalbedois optional, and can be added to the 4,5th column.test.csvis similar, anddepth_refpath is not necessary. -

(Optional) Preproccess

depth_filledandmask: fill small holes in depth map and get salient mask using the following command.

python data_utils/dilate_erode.py dataset/20170907albedo: get offline albedo using methods like Retinex algorithm or Reflectance Filtering.

- Please download our pre-trained model from Google Drive or Baidu Pan, and put them under folder

log/cscd/noBN_L1_sd100_B16/, and we call this checkpoint directory as$CKPT_DIR. Make sure it looks like this:log/cscd/noBN_L1_sd100_B16/checkpoint log/cscd/noBN_L1_sd100_B16/graph.pbtxt log/cscd/noBN_L1_sd100_B16/noBN_L1_sd100_B16-6000.meta log/cscd/noBN_L1_sd100_B16/noBN_L1_sd100_B16-6000.index log/cscd/noBN_L1_sd100_B16/noBN_L1_sd100_B16-6000.data-00000-of-00001 - Edit

eval.shby modifingcheckpoint_dirandcsv_pathto yours. - Run test script and save results to

$RESULT_DIRdirectory. Denoised depth map (D_dn)dn_frame_%6d.pngand refined depth map (D_dt)dt_frame_%6d.pngwill be saved.sh eval.sh $RESULT_DIR/ # RESULT_DIR=sample

train.shis an example of how to train this network, please editindex_fileanddataset_dirto yours.- Run train script and save checkpoints to specified directory. Here

$LOG_DIRis the parent directory of$EXPM_DIR. Log, network graph and model parameters (checkpoints) are saved in$EXPM_DIR.$GPU_IDis the selected gpu id. IfGPU_ID=0, training is done on the first GPU.sh train.sh $LOG_DIR/$EXPM_DIR/ $GPU_ID # LOG_DIR=cscd EXPM_DIR=noBN_L1_sd100_B16 tensorboard --logdir=log/$LOG_DIR/$EXPM_DIR --port=6006 # visualizing training.

If you find DDRNet useful in your research, please consider citing:

@inproceedings{yan2018DDRNet,

author = {Shi Yan, Chenglei Wu, Lizhen Wang, Feng Xu, Liang An, Kaiwen Guo, and Yebin Liu},

title = {DDRNet: Depth Map Denoising and Refinement for Consumer Depth Cameras Using Cascaded CNNs},

booktitle = {ECCV},

year = {2018}

}

Code has been tested under:

- Ubuntu 16.04 with 3 Maxwell Titan X GPU and Intel i7-6900K CPU @ 3.20GHz

- Ubuntu 14.04 with a GTX 1070 GPU and Intel Xeon CPU E3-1231 v3 @ 3.40GHz